|

Voiced by Amazon Polly |

Introduction

Being at the incubator stage, it is open-source. It was launched in 2014 under the aegis of Airbnb, and since then, it has developed a stellar reputation, with about 800 contributors and 13000 ratings on GitHub. The primary purposes of Apache Airflow are authoring, monitoring, and scheduling workflow.

Airbnb created the workflow (data-pipeline) management technology known as Apache Airflow. More than 200 businesses use it, including Airbnb, Yahoo, PayPal, Intel, Stripe, and many more.

This is based on workflow objects implemented as directed acyclic networks (DAG). Such a workflow might, for instance, combine data from many sources before running an analysis script. It orchestrates the systems involved and takes care of scheduling the tasks while taking into account their internal dependencies.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Directed Acyclic Graph (DAGs)

All jobs and tasks in the pipeline are compiled into DAGs or directed acyclic graphs. The jobs are arranged according to their connections and interdependencies. For instance, you should run the job to query database one immediately before the task to load the results into database two if you wish to query database one and then load the results into database two. According to a directed acyclic network, your pipeline can only go ahead, never backward. A task can be tried again, but once it has finished and another task downstream has started, it cannot be started again.

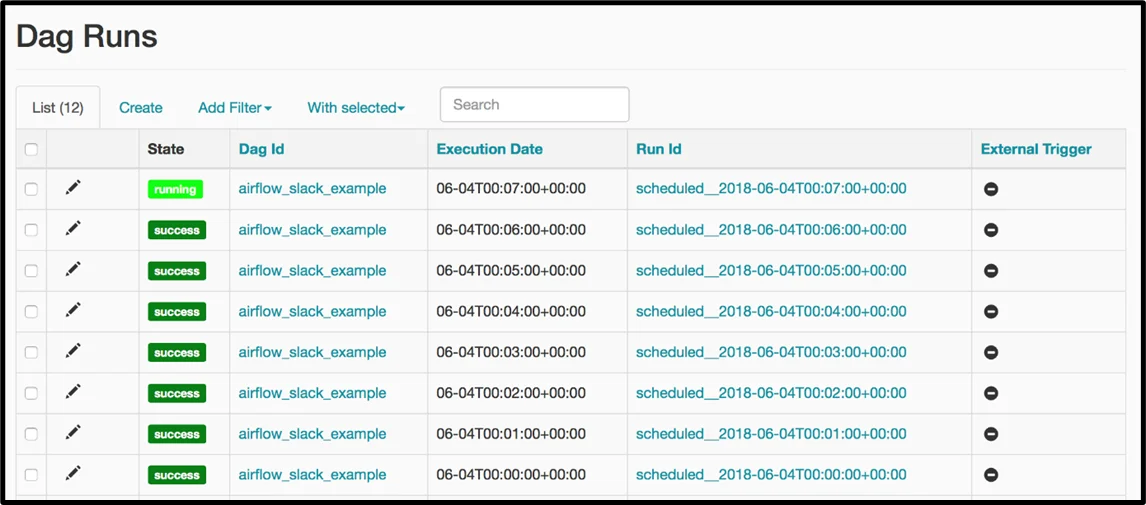

The Airflow UI’s home page is the DAG page. The DAG schedules are displayed and include an on/off switch.

The following is the page screenshot that represents the DAGs with DAG ids and status.

Tasks/Operators: The ideal tasks are independent units that don’t rely on data from other tasks. When they are executed, objects of the operator class become tasks. Importing operator classes results in the creation of the class object. The task is to instantiate that object by running its operator code.

Below is the visual representation of the tasks and the priority in which they need to be executed.

Four Major Parts

Scheduler: The scheduler monitors all DAGs and the jobs to which they are attached. The list of open tasks is often checked first.

Web server: The user interface for Airflow is the web server. It displays the status of the jobs, gives the user required access to the databases, and lets them read log files from other remote file stores like AWS S3, Microsoft Azure blobs, and Google Cloud Storage, among others.

Database: The status of the DAGs and the associated tasks are saved in the database to ensure that the schedule preserves metadata information. Airflow connects to the metadata database using Object Relational Mapping (ORM) and SQLAlchemy. Each DAG is scanned by the scheduler, saving important information like schedule intervals, run-by-run statistics, and task instances. Database: The status of the DAGs and the tasks they are attached to are saved to ensure that the schedule preserves metadata information. Connecting to the metadata database using SQLAlchemy and Object Relational Mapping (ORM) is done by Airflow. The scheduler scans every DAG and fetches essential data, including schedule intervals, run-by-run statistics, and task instances.

Executors:

- SequentialExecutor: With this executor, just one task may be done simultaneously. No parallel processing is possible. It is useful when testing or debugging.

- LocalExecutor: This executor supports hyperthreading and parallelism. It’s suitable for using Airflow on a single node or a local workstation.

- CeleryExecutor: The preferred method for managing a distributed Airflow cluster is CeleryExecutor.

- KubernetesExecutor: Using the Kubernetes API, the KubernetesExecutor creates temporary pods for each task instance to run in.

Working

Airflow reviews each DAG in the background at a specific time. The processor poll interval configuration value determines this period, which is one second. Task instances are established for required jobs, and their metadata database state is changed to SCHEDULED.

The schedule retrieves tasks in the SCHEDULED state from the database, which then distributes them to the executors. The task’s status then becomes QUEUED. From the queue, employees choose those jobs and complete them. As a result, the task’s state changes to RUNNING.

Features

- Simple to Use: If you know little about Python, you are ready to launch on Airflow.

- Open Source: It has many active users and is free and open-source.

- Robust Integrations: You may work with the Google Cloud Platform, Amazon AWS, Microsoft Azure, and other platforms, thanks to ready-to-use operators provided by this system.

- Coding in Standard Python: Python offers total flexibility for creating workflows, from the simplest to the most complex.

- Amazing user interface: Your workflows can be managed and monitored. You can view the status of both finished and continuing jobs.

Conclusion

Open Source, flexible, scalable, and Dependency Management Supportable are all attributes of Apache Airflow. Its primary purposes are to author, monitor, and schedule processes. These challenges were completed using directed acyclic graphs (DAG).

Making IT Networks Enterprise-ready – Cloud Management Services

- Accelerated cloud migration

- End-to-end view of the cloud environment

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. What is Airflow?

ANS: – Workflows can be created, scheduled, and monitored programmatically using Airflow. Data engineers use it to coordinate workflows or pipelines.

2. What is a DAG?

ANS: – The basic idea of a DAG (Directed Acyclic Graph), which groups Tasks and organizes them with dependencies and relationships to specify how they should operate.

WRITTEN BY Vinayak Kalyanshetti

Login

Login

April 17, 2023

April 17, 2023 PREV

PREV

Comments