|

Voiced by Amazon Polly |

Introduction

As businesses increasingly use generative AI in their operations, basic prompt engineering or generic foundation models often fall short of real-world needs. Many business workflows require systems that can think deeply, specialize in certain areas, manage behaviour, and make reliable decisions at different steps. This demand has led to multi-agent orchestration, where several AI agents collaborate, each handling a specific task within a larger workflow.

However, managing multiple agents effectively at scale is difficult. Without proper coordination, agents can produce inconsistent outputs, inefficient reasoning paths, or unsafe results. This is why advanced fine-tuning techniques are crucial. These methods enable businesses to build strong, scalable, high-performing agent systems that go beyond simple customization. This blog examines how fine-tuning methods have evolved, their role in multi-agent orchestration, their real-world applications, and best practices for system design.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Why Advanced Fine-Tuning Still Matters?

Despite major advancements in foundation models, many enterprise AI applications still need targeted fine-tuning. High-stakes areas, such as healthcare validation, engineering decision support, or content governance, often require accuracy, reliability, and clarity that generic models cannot consistently provide.

Advanced fine-tuning helps models understand domain rules, align with organizational standards, and behave predictably in unique cases. It also reduces operational risks by minimizing inaccuracies and improving consistency across agents. In large-scale systems, these benefits are even more pronounced, as the output quality of one agent directly affects downstream agents.

Evolution of Fine-Tuning Techniques

Fine-tuning has improved from basic supervised methods to more sophisticated optimization strategies tailored for complex reasoning and multi-step workflows.

Supervised Fine-Tuning (SFT)

Supervised Fine-Tuning is the starting point for customizing models. It involves training a model on labelled input-output pairs suited to a specific domain or task. SFT effectively teaches structured responses, enforces formats, and incorporates domain-specific vocabulary.

However, SFT alone struggles with complex reasoning, long-term planning, and tasks that rely on context rather than fixed labels.

Reinforcement Learning with PPO

To tackle these challenges, reinforcement learning techniques like Proximal Policy Optimization (PPO) were developed. PPO optimizes model behavior using reward signals rather than explicit labels, enabling the model to learn from outcomes rather than just examples.

This approach is well-suited for tasks involving decision-making, tool use, or iterative refinement. PPO enables agents to balance exploration and reliability, but it can be costly and sensitive to the design of the reward system.

Direct Preference Optimization (DPO)

Direct Preference Optimization simplifies reinforcement learning by eliminating the need for a separate reward model. Instead, it directly trains the model using comparisons of better and worse outputs from humans.

DPO provides better training stability and alignment, making it particularly effective for improving response quality, tone, and safety in multi-agent systems where consistent behaviour is essential.

Advanced Reasoning Optimization Techniques

As agent workflows became more complex, new optimization strategies emerged:

- Group-based Reinforcement Policy Optimization (GRPO): Improves reasoning by comparing groups of outputs rather than individual samples, leading to higher-quality reasoning paths.

- Direct Advantage Policy Optimization (DAPO): Builds on GRPO with detailed sequence-level advantages, enhancing long-form and multi-step reasoning.

- Group Sequence Policy Optimization (GSPO): Focuses on optimizing entire reasoning sequences, making it suitable for orchestration tasks that involve planning, delegation, and execution across agents.

These methods are particularly helpful when agents need to reason over long sequences of actions or coordinate across multiple stages.

Real-World Applications

Advanced fine-tuning techniques have shown a significant impact in several fields:

- Healthcare Systems: Fine-tuned models used for checking medical instructions significantly reduced critical errors, improving patient safety.

- Engineering and Operations: Domain-specific agents significantly reduced expert review time by providing accurate, context-aware answers to complex technical queries.

- Content Quality and Compliance: Specialized classification models achieved substantial accuracy improvements at scale, enabling automatic quality control across millions of submissions.

These examples show how fine-tuning transforms foundation models into reliable enterprise tools.

Reference Architecture for Multi-Agent Systems

A scalable multi-agent architecture depends on clearly defined roles and continuous evaluation:

- Model Customization Layer: Models are fine-tuned using methods ranging from SFT to advanced reasoning optimization based on task complexity.

- Agent Layer: Individual agents are created with specific responsibilities, tool access, and state management.

- Orchestration Layer: A central orchestrator directs task routing, agent collaboration, and result aggregation.

- Deployment and Runtime: Secure and scalable execution environments ensure low latency and operational reliability.

- Evaluation and Monitoring: Ongoing testing, observability, and feedback loops help identify drift and maintain output quality over time.

This architecture enables organizations to gradually enhance their agent systems without disrupting ongoing work.

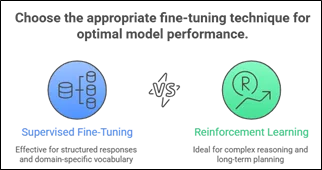

Choosing the Right Fine-Tuning Strategy

Selecting the right technique depends on workload readiness and business needs:

- Prompt engineering for quick prototyping

- Supervised fine-tuning for domain alignment

- Preference-based optimization for quality and safety

- Advanced reasoning optimization for complex orchestration

Not every use case requires the most advanced method. A staged approach helps manage cost, complexity, and risk while delivering real value.

Conclusion

Multi-agent orchestration represents a significant leap in enterprise AI capabilities, allowing systems to solve complex, multi-step problems together. However, the success of these systems heavily relies on advanced fine-tuning techniques that align models with domain knowledge, reasoning needs, and organizational standards.

Drop a query if you have any questions regarding Multi-agent orchestration and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. What is multi-agent orchestration?

ANS: – It is an AI design pattern in which multiple specialized agents collaborate to complete complex workflows under the guidance of an orchestrator.

2. Why isn't prompt engineering sufficient for enterprise use cases?

ANS: – Prompt engineering has lacked depth, consistency, and governance to perform high-stakes or domain-specific applications.

3. How do advanced optimization methods improve reasoning?

ANS: – They elicit the optimal whole reasoning paths or set of outputs, as opposed to individual responses, for overall more coherent and better results.

WRITTEN BY Akanksha Choudhary

Akanksha works as a Research Associate at CloudThat, specializing in data analysis and cloud-native solutions. She designs scalable data pipelines leveraging AWS services such as AWS Lambda, Amazon API Gateway, Amazon DynamoDB, and Amazon S3. She is skilled in Python and frontend technologies including React, HTML, CSS, and Tailwind CSS.

Login

Login

January 29, 2026

January 29, 2026 PREV

PREV

Comments