|

Voiced by Amazon Polly |

Overview

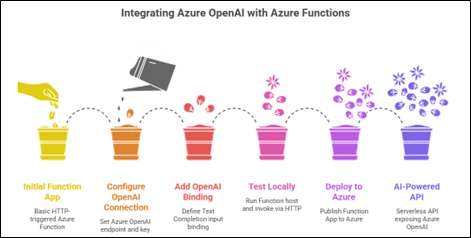

Azure Functions is a serverless compute platform ideal for exposing backend logic via HTTP or event triggers. By connecting it to Azure OpenAI, you can host AI capabilities (text completion, chat, etc.) behind serverless APIs without managing servers. For example, an HTTP-triggered function can use the OpenAI GPT models to answer queries or generate content on demand. This integration lets you build intelligent microservices (e.g., chatbots, summarizers, QA tools) that scale automatically. In this tutorial, we walk through building a Python-based Azure Function that calls Azure OpenAI’s Text Completion API using the new Functions binding.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Prerequisites

- An Azure subscription with permission to create resources.

- Azure CLI and Azure Functions Core Tools (v4) are installed locally. Python 3.8+ is recommended.

- An Azure OpenAI resource (via Azure portal or CLI) with a deployed model (e.g., gpt-35-turbo). Note the Endpoint URI and Key from the Azure portal or Foundry.

Step-by-Step Guide

1. Create a Python Function App

First, create a new Functions project and an HTTP-triggered function:

- Open a terminal and initialize a new Function App project:

|

1 |

func init MyFuncApp --worker-runtime python |

This creates a folder MyFuncApp with a Python Functions project. (You can include –model V2 for the v2 Python worker if needed.)

- Enter the project directory and add an HTTP-triggered function:

|

1 2 |

cd MyFuncApp func new --template "HTTP trigger" --name ChatFunction |

This scaffolds an HTTP trigger (ChatFunction) with a sample py and function.json. By default, the HTTP trigger has AuthorizationLevel=function (which requires a function key) and a route like /api/ChatFunction.

- Ensure the project’s host.json file uses the preview extension bundle so that the OpenAI binding is available. Add (or replace) the extensionBundle section with the preview bundle, e.g.:

|

1 2 3 4 5 6 7 |

{ "version": "2.0", "extensionBundle": { "id": "Microsoft.Azure.Functions.ExtensionBundle.Preview", "version": "[4.0.0, 5.0.0)" } } |

This enables the preview OpenAI extension in Functions. Save and close json.

- Configure the Azure OpenAI Connection

Your function needs to know which Azure OpenAI endpoint and credentials to use. We’ll use environment settings (in local.settings.json for local dev, and Function App settings in Azure) as recommended. For example, in local.settings.json, include keys like:

|

1 2 3 4 5 6 7 8 9 10 |

{ "IsEncrypted": false, "Values": { "FUNCTIONS_WORKER_RUNTIME": "python", "AzureWebJobsStorage": "UseDevelopmentStorage=true", "AZURE_OPENAI_ENDPOINT": "<YOUR_OPENAI_ENDPOINT>", "AZURE_OPENAI_KEY": "<YOUR_OPENAI_KEY>", "CHAT_MODEL_DEPLOYMENT_NAME": "gpt35" } } |

Here: – AZURE_OPENAI_ENDPOINT is the URI of your Azure OpenAI resource (e.g., https://YOUR-OPENAI-RESOURCE.openai.azure.com/).

– AZURE_OPENAI_KEY is the primary key for that resource.

– CHAT_MODEL_DEPLOYMENT_NAME (any name) corresponds to the model deployment in Azure (e.g., deploying gpt-35-turbo as gpt35) – this is used in the function code as %CHAT_MODEL_DEPLOYMENT_NAME%.

– If using managed identities (the recommended secure approach), you would instead configure the AIConnectionName in code and set up an Azure identity rather than keys. For simplicity, we use key-based auth here.

- Add and Configure the OpenAI Binding

Install/enable the Azure OpenAI Functions extension via the extension bundle (did that in host.json). With that in place, you can define a Text Completion input binding in your function. In Python (v2 model), we use the @app.text_completion_input decorator. For example, open the generated __init__.py (or create your own file) and modify it as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

import azure.functions as func import logging, json app = func.FunctionApp() @app.route(route="whois/{name}", methods=["GET"]) @app.text_completion_input( arg_name="response", prompt="Who is {name}?", chat_model="%CHAT_MODEL_DEPLOYMENT_NAME%", max_tokens="100" ) def whois(req: func.HttpRequest, response: str) -> func.HttpResponse: """HTTP GET /whois/{name}: calls Azure OpenAI to get info about the name.""" data = json.loads(response) content = data.get("content", "") return func.HttpResponse(content, status_code=200) |

This example does the following: – The HTTP trigger listens on route /whois/{name}. A GET to …/api/whois/Alice would set name=”Alice”.

– The @app.text_completion_input binding automatically sends the prompt Who is {name}? to the OpenAI completions API, using the model specified by chat_model. The {name} in the prompt template is replaced by the route parameter.

– The binding’s output (a JSON string) is passed into our function as the response argument. We parse it (json.loads(response)) and extract [“content”], which holds the generated text completion.

– We return that content in the HTTP response.

- Test Locally

Before deploying, test the function on your machine:

- Start the Function host:

- func start

- The console will list the functions found and the local HTTP endpoints. For example:

- Http Function WhoIs: http://localhost:7071/api/whois/{name}

- Invoke via HTTP: In another terminal or REST client, call the function. For example, using curl:

- curl –get “http://localhost:7071/api/whois/Azure”

- This should return text like “Azure is …” generated by the model.

(By default, a function key is required unless you set authLevel=anonymous in the function; when running locally, auth is bypassed by default.)

- Log output: Use Python’s logging to add debug/info logs in your function (shown above). Azure Functions’ Python host uses the standard logging module and sends logs to Console/Application Insights. For example, logging.info(“Request for %s”, name) would appear in the console or Azure logs. These logs help diagnose issues.

- Using request body (optional): You could also design a POST endpoint that takes JSON input. In that case, you’d use a binding prompt like “{Prompt}” and call the function with JSON {“Prompt”: “Your question here”}. The same binding mechanism applies.

- Deploy to Azure

To publish your function app to Azure, you have several options. A simple way is to use the Functions Core Tools:

func azure functionapp publish <FUNCTION_APP_NAME>

This command packages your code and deploys it to the specified Function App in your Azure subscription. You must have already created a Function App resource (e.g., via az functionapp create or the Azure portal) and assigned it the Python runtime and a storage account.

After deployment, configure the same app settings (AzureOpenAI endpoint, key, model name) in the Function App’s Configuration on Azure (portal or CLI) so the function knows where to connect. Azure UI: Function App → Settings → Configuration → Application settings. Paste your AZURE_OPENAI_ENDPOINT, AZURE_OPENAI_KEY, and CHAT_MODEL_DEPLOYMENT_NAME values there (ensure the setting names match exactly).

Finally, you can invoke the live function via its Azure URL. The function URL is typically:

https://<YOUR_APP_NAME>.azurewebsites.net/api/whois/{name}?code=<FUNCTION_KEY>

Conclusion

In this tutorial, we created a Python Azure Function that uses the built-in Azure OpenAI input binding to call OpenAI’s Text Completion API. We covered everything from initializing the function project and configuring the OpenAI connection to writing the function code and testing locally and in Azure. We also discussed secure practices (such as storing secrets and using managed identities) and monitoring strategies. With this setup, you can now build on top of it, for example, using chat completions, embeddings, or RAG patterns, to unlock powerful AI functionality in your serverless applications.

Drop a query if you have any questions regarding Azure OpenAI and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. What is the benefit of integrating Azure OpenAI with Azure Functions?

ANS: – Integrating Azure OpenAI with Azure Functions allows you to expose AI capabilities (chat, text generation, summarization, Q&A) as serverless APIs. You don’t need to manage servers, scaling is automatic, and you only pay for execution time and token usage. This makes it ideal for building lightweight, scalable AI microservices.

2. Do I need to use the OpenAI SDK in my Python code?

ANS: – No. When using the Azure OpenAI Functions binding, you do not need to call the OpenAI SDK directly. The binding handles:

- Request construction

- Authentication

- API calls to Azure OpenAI

WRITTEN BY Shantanu Singh

Shantanu Singh is a Research Associate at CloudThat with expertise in Data Analytics and Generative AI applications. Driven by a passion for technology, he has chosen data science as his career path and is committed to continuous learning. Shantanu enjoys exploring emerging technologies to enhance both his technical knowledge and interpersonal skills. His dedication to work, eagerness to embrace new advancements, and love for innovation make him a valuable asset to any team.

Login

Login

January 28, 2026

January 28, 2026 PREV

PREV

Comments