|

Voiced by Amazon Polly |

Introduction

Building a successful generative AI SaaS platform requires striking the right balance between scalability and cost efficiency. This challenge becomes even more pronounced in multi-tenant environments, where diverse customer usage patterns range from steady, predictable consumption to sudden, high-demand spikes. Traditional cost management methods often fall short, making it difficult to attribute expenses accurately across tenants and respond proactively to anomalies. Organizations need an advanced monitoring and alerting strategy to address these complexities beyond basic binary notifications.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

What are application inference profiles?

Application inference profiles in Amazon Bedrock allow you to organize and monitor model usage at a detailed level by associating metadata with inference requests. Using the same foundation models, this feature helps create clear boundaries between applications, teams, or customers. By tagging requests with identifiers like TenantID, ApplicationID, or business unit details, you can track consumption, enable accurate cost allocation, and implement chargeback models based on actual usage. When combined with AWS tagging strategies, inference profiles provide valuable insights for performance optimization and cost control across multi-tenant environments.

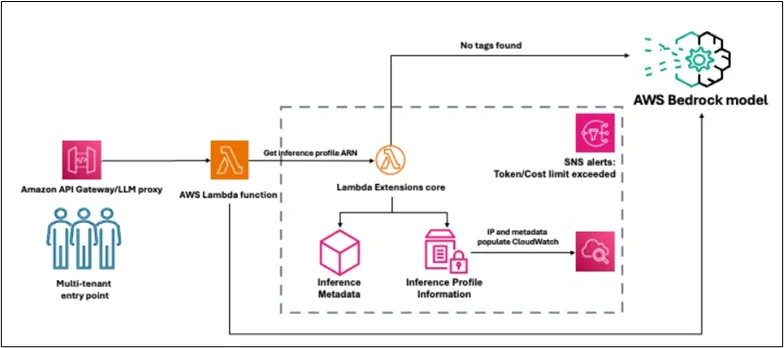

Solution overview

The system manages the difficulties of gathering and combining usage data from many tenants, keeping track of past measurements for trend analysis, and providing useful insights in user-friendly dashboards. With the freedom to modify components to fit your organizational needs, this solution gives you the visibility and control you need to manage your Amazon Bedrock expenses.

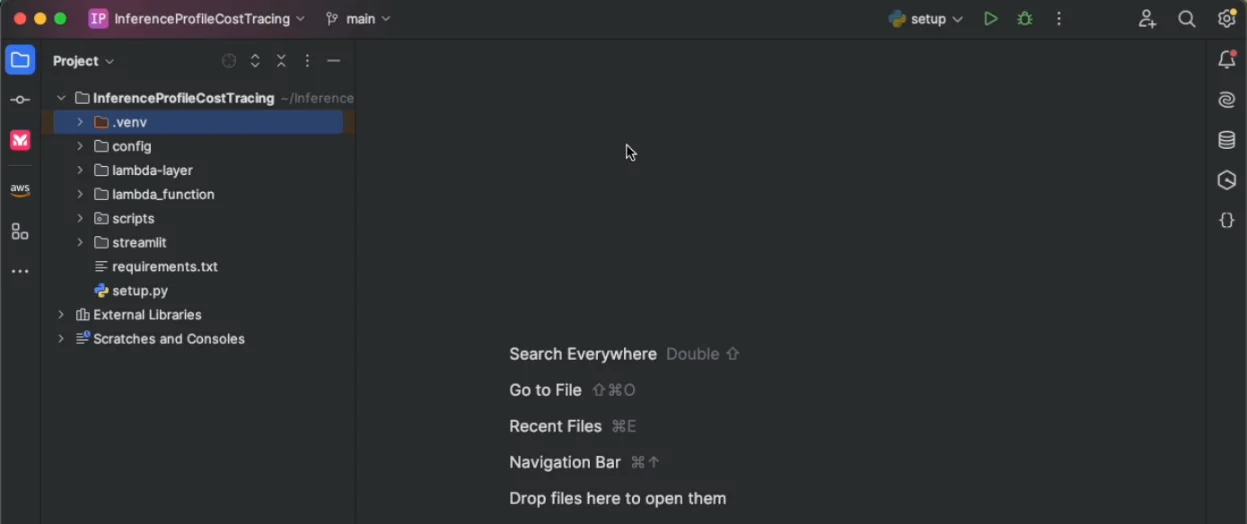

Deploy the solution

- Establish the virtual environment:

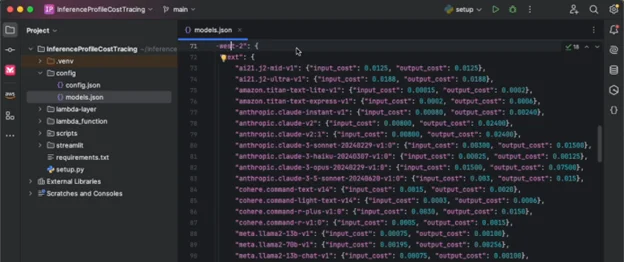

2. Update models.json: Examine and revise the models. Use the default settings or modify the JSON file to reflect the accurate input and output token pricing according to your organization’s contract.

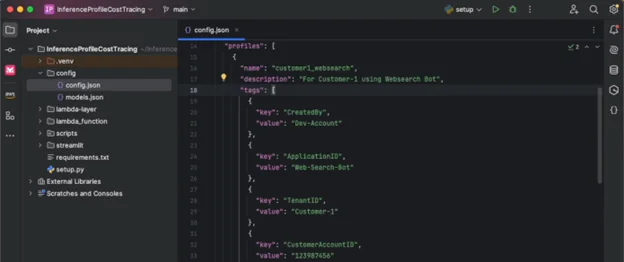

3. Update config.json: To specify the profiles you wish to set up for cost tracking, edit config.json. Multiple key-value pairs for tags may be present in a single profile. These tags or the profile name should be HTTP headers in every incoming request at runtime.

You also set up an admin email alias that will be notified when a specific threshold is crossed and a special Amazon Simple Storage Service (Amazon S3) bucket for storing configuration artifacts.

4. Deploy solution resources and establish user roles.

Run the following command in the terminal to generate the assets, including the user roles, after making changes to config.json and models.json:

|

1 |

python setup.py --create-user-roles |

Creating the inference profiles, creating an Amazon CloudWatch dashboard to record the metrics for each profile, deploying the inference AWS Lambda function that runs the Amazon Bedrock Converse API and retrieves the inference metadata and metrics associated with the inference profile, configuring the Amazon SNS alerts, and finally creating the Amazon API Gateway endpoint to call the AWS Lambda function are all initiated by the setup command.

Dashboards and Alarms

The solution produces the following dashboards and alerts:

- BedrockTokenCostAlarm-{profile_name} – Alert when total token cost for {profile_name} exceeds {cost_threshold} in 5 minutes

- BedrockTokensPerMinuteAlarm-{profile_name} – Alert when tokens per minute for {profile_name} exceed {tokens_per_min_threshold}

- BedrockRequestsPerMinuteAlarm-{profile_name} – Alert when requests per minute for {profile_name} exceed {requests_per_min_threshold}

The following states are available for a metric alarm:

- OK – The metric or expression is within the defined threshold

- ALARM – The metric or expression is outside of the defined threshold

- INSUFFICIENT_DATA – The alarm has just started, the metric is not available, or not enough data is available for the metric to determine the alarm state

While an alarm is added to a dashboard, it becomes red while it is in the ALARM state and gray when it is in the INSUFFICIENT_DATA state. When the alert is in the OK condition, it is displayed without color.

It triggers actions only when an alarm transitions from the OK state to the ALARM state. This approach emails the admin listed in your config.json file via your SNS subscription. When the alarm switches between OK, ALARM, and INSUFFICIENT_DATA states, you can define further actions.

Conclusion

By incorporating historical trends, customer tiers, and contextual factors into an alerting strategy, you can differentiate between normal growth and unexpected spikes, ensuring timely and appropriate responses. Whether through notifications, automated customer engagement, or rate-limiting actions, this framework empowers teams to maintain cost efficiency while supporting scalability and performance across a diverse customer base.

Drop a query if you have any questions regarding Amazon Bedrock and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. Why use application inference profiles in Amazon Bedrock?

ANS: – They enable tagging and tracking requests per tenant, allowing accurate cost allocation and usage monitoring.

2. Can alert thresholds be customized?

ANS: – Yes, thresholds for cost, token usage, and requests can be tailored to tenant SLAs and usage patterns.

3. How does this prevent overruns?

ANS: – Real-time alerts detect unusual spikes and trigger actions like notifications or rate limits to control costs.

WRITTEN BY Aayushi Khandelwal

Aayushi is a data and AIoT professional at CloudThat, specializing in generative AI technologies. She is passionate about building intelligent, data-driven solutions powered by advanced AI models. With a strong foundation in machine learning, natural language processing, and cloud services, Aayushi focuses on developing scalable systems that deliver meaningful insights and automation. Her expertise includes working with tools like Amazon Bedrock, AWS Lambda, and various open-source AI frameworks.

Login

Login

August 18, 2025

August 18, 2025 PREV

PREV

Comments