|

Voiced by Amazon Polly |

Overview

The growth of generative AI, encompassing large language models (LLMs), agents, and retrieval-augmented generation (RAG) systems, requires stronger solutions for the end-to-end machine learning lifecycle management. With the launch of MLflow 3.0 as a fully managed service on Amazon SageMaker AI, organizations can now speed their generative AI workflows through easy experiment tracking, model registry, and automated deployment. This blog discusses how adding MLflow 3.0 on Amazon SageMaker revolutionizes generative AI development, presents key features, details best practices for setup, and answers typical questions and constraints.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Introduction

Generative AI models have transformed content generation, automated reasoning, and data generation. Nevertheless, complexity in developing, testing, and deploying these models at scale can be overwhelming. MLflow, an open-source software for ML lifecycle management, has emerged as a standard for machine learning experiment tracking, reproducibility, and deployment.

Configure Your Environment to Use Amazon SageMaker Managed MLflow Tracking Server

Setting up your environment to leverage Amazon SageMaker’s managed MLflow tracking server involves several best practices:

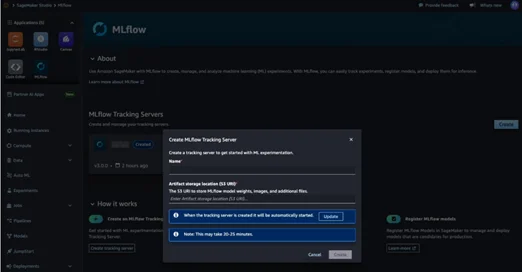

- Set Up the Managed MLflow Service

- Log in to your Amazon SageMaker console and enable MLflow features.

- The tracking server is provisioned and managed by AWS, which helps to reduce operational workload.

- Optimization tip: Use appropriate instance types for your team and experiment volume. Start with smaller instances, which can be scaled up later to control costs.

- Configure Artifact Storage in Amazon S3

- Go to your MLflow settings and ensure that experiment artifacts and models are saved directly to Amazon S3.

- These stores provide greater efficiency and more durable storage for large generative AI models.

- Cost optimization: Apply Amazon S3 lifecycle rules to automatically move older artifacts to more cost-effective cold storage after a set period of inactivity.

- Provide Secure Access Using AWS IAM Roles

- Control MLflow and Amazon SageMaker permissions using AWS Identity and Access Management (IAM) roles.

- Protect experiment data and model artifacts with least-privilege access.

- Security tip: Implement dedicated roles for development, testing, and production to enforce stronger bounds for security.

- Experiment Tracking and Hyperparameter Optimization

- Use the MLflow Tracking APIs to track quantified experiment runs, parameters, and model metrics.

- Use Amazon SageMaker hyperparameter tuning jobs with MLflow to optimize and track your hyperparameters automatically.

- Performance tip: For LLMs, tracking more than just accuracy metrics further down the line, once in production, is good. Tracking your inference latency, token throughput rate, and memory utilization can be useful for identifying production bottlenecks sooner rather than later.

- Automated Deployment with MLflow

- Using the MLflow CLI or Python API, you can deploy your registered models as endpoints in Amazon SageMaker.

- MLflow will manage the containerizing complexity with the mlflow.sagemaker module, so deploying to Amazon SageMaker is simple for anyone new.

- Pitfall to avoid: Always validate the deployment by testing it with representative input data before moving to production. It is critical to avoid serialization or inference issues.

- Observability and Monitoring

- MLflow’s tracing capabilities will let you trace latency, token usage, and your evaluation metrics for generative models.

- To build an observability layer, you should establish dashboards and alerts in your model monitoring in production.

- To optimize your cost resources, you can set up Amazon CloudWatch alarms for unusual spikes in inference\tokens costs or latency decline. Best Practices and Considerations for Managed MLflow with Amazon SageMaker

Best Practices

- Scalability: Store all experiment data and artifacts in Amazon S3 to expand model development. For teams developing several LLMs, manage artifacts by naming conventions by model family with a version number.

- Security: Regularly audit AWS IAM roles and permissions to ensure compliance and data protection. Use Amazon VPC endpoints with enhanced network security when working with private data.

- Automation: Leverage Amazon SageMaker Pipelines and CI/CD integrations to automate training, evaluation, and deployment. Create standard protocols for evaluating generative models to ensure consistent quality assessments.

- Reproducibility: Use a model registry and Git integration to version control code and experiments, assigning business-oriented tags to versions that clarify approval governance and business context.

- Cost Management: Implement auto-shutdown policies for development environments and right-size inference endpoints for actual traffic patterns. Manage training and inference separately by monitoring and setting budgets.

Current Limitations and Workarounds

While Amazon SageMaker’s managed MLflow will streamline and accelerate generative AI workflows, users should be mindful of the following limitations:

- OCI Manifest Support: Amazon SageMaker does not currently support OCI (Open Container Initiative) manifests for Docker containers, which may restrict some container deployment situations. Workaround: Use ECR-compatible Docker image formats, and not multi-architecture images.

- Service-specific limits: Refer to the operational limits that could limit or impact the scale of your workflows. Workaround: Pre-emptively request limits to be increased for scaled deployments.

- Customization: If your workflow is sufficiently advanced to require custom pre-installed libraries or secret management, additional setup, testing, etc., may be necessary. Workaround: Use Amazon SageMaker script mode with lifecycle configurations to install the dependencies when your instance starts.

- Open-Source vs. Managed Differences: The managed version of MLflow may continue to lag the newer and faster moving open-source version of MLflow. Workaround: Verify the current version on the AWS documentation or use the information on this page for feature support on critical dependencies before you develop a dependency plan.

While these limitations will exist, the managed workflow will sufficiently support your needs for most generative AI development and deployment requests.

Conclusion

The synergy of MLflow 3.0, combined with Amazon SageMaker AI, provides a powerful, fully managed platform for accelerating the development of generative AI. With robust experiment tracking capability, automated deployment, and observability, teams can concentrate on innovating rather than worrying about infrastructure. As generative AI models proliferate and become more complex and mission critical, it will be increasingly important for organizations to take advantage of managed MLflow on Amazon SageMaker to facilitate speed, governance, and time-to-market.

Drop a query if you have any questions regarding Amazon SageMaker and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. What are the primary advantages of using managed MLflow 3.0 on Amazon SageMaker with respect to generative AI projects?

ANS: – Managed MLflow 3.0 on Amazon SageMaker allows teams to develop and productionize generative AI faster and more reliably by offering observability, automated deployment, model versioning, and simplified experiment tracking. Managed MLflow helps remove infrastructure maintenance for teams more easily than self-hosted MLflow, opens up enterprise-grade security and scalability immediately, and provides direct access to native AWS services integrations.

2. Can I use MLflow models with Amazon SageMaker to deploy custom container images?

ANS: – Yes, but with restrictions. Some custom container deployments may not work with Amazon SageMaker, depending on the MLflow version. Because Amazon SageMaker does not provide OCI manifests (as of this writing), the workflows for MLflow model deployment with standard support are fully supported. Consider creating compatible images for complex dependencies using Amazon SageMaker’s container building tools, which can be referred to in your MLflow deployment setups.

3. How does one track and then compare generative AI experiment runs?

ANS: – While running a generative AI, you will want to keep records of the parameters, metrics, and artifacts through the experiment tracking user interface and APIs in MLflow. This way, several models can be compared side by side with respect to performance, and the best ones can then be deployed. In the generative AI context, one can also use MLflow’s GenAI evaluation metrics to keep track of things like hallucination rate, semantic similarity, and additional quality measures beyond the standard accuracy metrics.

WRITTEN BY Nekkanti Bindu

Nekkanti Bindu works as a Research Associate at CloudThat, where she channels her passion for cloud computing into meaningful work every day. Fascinated by the endless possibilities of the cloud, Bindu has established herself as an AWS consultant, helping organizations harness the full potential of AWS technologies. A firm believer in continuous learning, she stays at the forefront of industry trends and evolving cloud innovations. With a strong commitment to making a lasting impact, Bindu is driven to empower businesses to thrive in a cloud-first world.

Login

Login

August 18, 2025

August 18, 2025 PREV

PREV

Comments