|

Voiced by Amazon Polly |

Artificial Intelligence (AI) assistants such as ChatGPT, Bard, DeepSeek, and Microsoft Copilot are becoming integral to workplace productivity. They help employees generate content, automate workflows, and analyze large datasets quickly. But alongside these benefits comes a growing challenge: sensitive data exposure. Confidential details can unintentionally leak through prompts, responses, or stored conversations.

This is where Data Security Posture Management (DSPM) for AI in Microsoft Purview becomes critical. It provides a structured framework to discover, secure, govern, and respond to AI-related data risks, ensuring that innovation happens without compromising compliance or trust.

Start Learning In-Demand Tech Skills with Expert-Led Training

- Industry-Authorized Curriculum

- Expert-led Training

Understanding DSPM for AI in Microsoft Purview

Unlike standalone tools, DSPM for AI is a built-in framework within Microsoft Purview that strengthens AI security by:

- Discovering AI usage across enterprise systems and monitoring employee interactions with AI tools.

- Securing sensitive content before it is ingested or shared with AI models.

- Governing AI-generated outputs to meet compliance requirements.

- Assessing and mitigating risks dynamically, based on real-time AI activity.

When integrated with tools such as Microsoft Copilot, ChatGPT, Bard, or DeepSeek, DSPM ensures enterprise-grade data protection without stifling productivity.

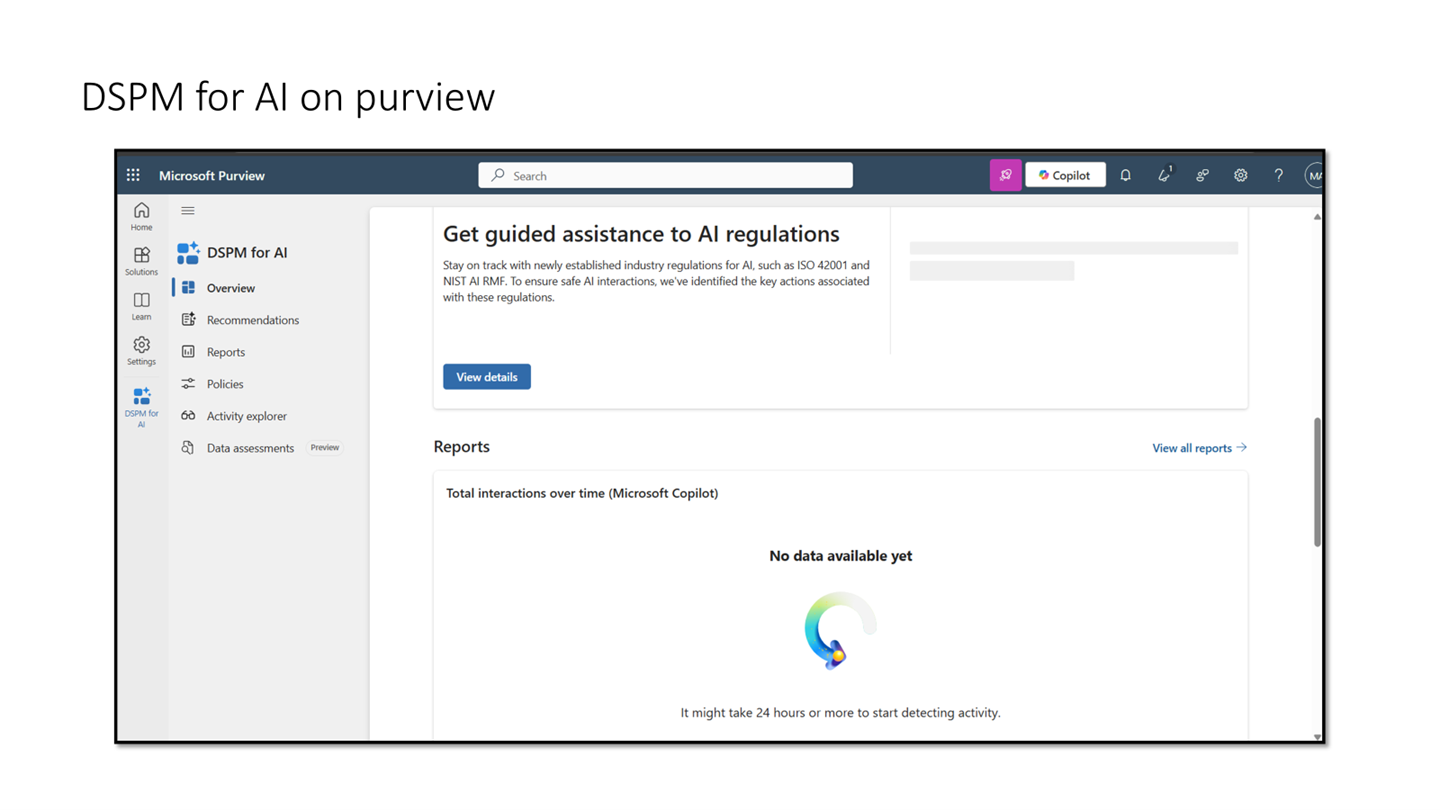

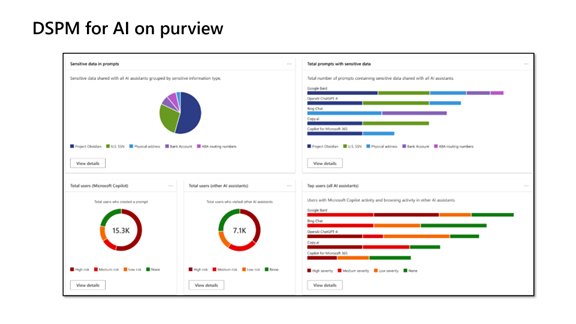

Figure 1: Microsoft Purview DSPM for AI dashboard showing regulatory guidance and activity reports.

A Real-World Use Case

Scenario: FinSecure Inc., a financial services firm, noticed employees using ChatGPT, Bard, and DeepSeek to prepare investment reports. Some prompts included sensitive details such as client account numbers and internal risk data.

How DSPM for AI addresses the challenge:

- Discovering AI Activity: DSPM scans endpoint logs and browser history to detect when AI tools are accessed. Purview Audit highlights Copilot queries containing sensitive data.

- Securing Data Exposure: Sensitivity labels automatically encrypt documents before upload. Endpoint DLP prevents employees from pasting confidential text into AI interfaces.

- Governing AI-Generated Content: Retention policies archive generated reports for compliance audits, while Communication Compliance flags summaries with regulated terms.

- Responding to Risks: DSPM analytics reveal oversharing patterns. Insider Risk Management applies adaptive policies to users attempting to bypass restrictions.

Outcome: With DSPM for AI, FinSecure enables safe AI adoption while avoiding compliance penalties and protecting customer trust.

Best Practices for Implementing DSPM for AI

Classify Before You Share: Apply sensitivity labels to critical files before enabling AI access.

Educate Employees: Make staff aware of what data can and cannot be entered into AI prompts.

Audit AI Tool Usage Regularly: Use Purview Audit logs to identify patterns and anomalies.

Integrate with Insider Risk Management: Prevent intentional misuse while maintaining user trust.

Conclusion

AI assistants are here to stay—but so are compliance and data protection obligations. DSPM for AI in Microsoft Purview ensures that organizations can benefit from AI’s capabilities without exposing sensitive information.

By adopting proactive strategies, businesses can strike the right balance between innovation and security, safeguarding both compliance requirements and customer trust.

Relevant Learning Resources

https://cloudthat.com/training/microsoft-purview-data-protection

https://cloudthat.com/training/microsoft-ai-security

Relevant Microsoft Learning Resources

Protect data in AI environments with Microsoft Purview – SC-401: https://learn.microsoft.com/en-us/training/modules/protect-data-ai-environments-purview/

Microsoft Purview Information Protection: https://learn.microsoft.com/en-us/microsoft-365/compliance/information-protection?view=o365-worldwide

Upskill Your Teams with Enterprise-Ready Tech Training Programs

- Team-wide Customizable Programs

- Measurable Business Outcomes

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

WRITTEN BY Rajesh KVN

Dr. K. V. N. Rajesh is a Microsoft Certified Trainer & Senior Subject Matter Expert at CloudThat (Microsoft Gold Partner), specializing in Azure cloud security and AI. With over 20 years of experience in training, research, and development, he has trained thousands globally on Microsoft certifications and best practices. Known for simplifying complex security concepts and practical, hands‑on guidance, Dr. Rajesh brings deep technical insight. His passion for mentoring and writing fuels every learning journey. He is a Microsoft global award winner.

Login

Login

September 12, 2025

September 12, 2025

PREV

PREV

Comments