|

Voiced by Amazon Polly |

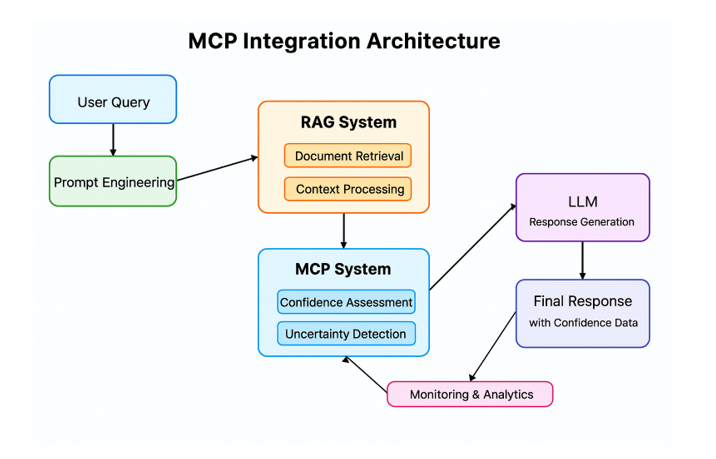

The rise of Large Language Models (LLMs) has been revolutionary, bringing remarkable capabilities in natural language understanding and generation. Yet, two persistent challenges remain: ensuring access to current, relevant knowledge, and providing transparency into the reliability of outputs. The MCP Integration Architecture addresses these issues by combining Retrieval Augmented Generation (RAG) with confidence assessment mechanisms. The result is more accurate, context-rich, and trustworthy AI interaction.

Start Learning In-Demand Tech Skills with Expert-Led Training

- Industry-Authorized Curriculum

- Expert-led Training

The Challenge: Context, Accuracy, and Trust

LLMs are trained on vast data, but this knowledge can be outdated or lack domain-specific detail. This creates problems like “hallucinations” (factually incorrect outputs) and leaves users guessing about the certainty of responses. In critical fields—finance, healthcare, governance—blind trust is risky.

The MCP Integration Architecture directly tackles these issues by grounding responses in external knowledge and embedding a confidence layer that quantifies reliability.

Breaking Down the MCP Integration Architecture

The architecture follows a step-by-step flow, transforming a simple query into a validated response enriched with confidence data.

1. User Query

The journey begins with the user’s natural language query—this sparks the pipeline.

2. Prompt Engineering

Before passing into core systems, the query is refined. This involves:

– Clarification: Rewording ambiguous inputs.

– Contextualization: Adding relevant background details.

– Guidance: Specifying format or style of output.

– Keyword Extraction & Expansion: Broadening retrieval scope.

Effective prompt engineering ensures both the RAG system and LLM get actionable input.

3. Retrieval Augmented Generation (RAG)

The refined query flows into the RAG System, which enhances context:

– Document Retrieval: Searches external databases, wikis, or knowledge bases for relevant snippets.

– Context Processing: Summarizes and ranks retrieved data, producing concise, relevant context for the LLM.

This step grounds answers in up-to-date, factual information, reducing hallucinations.

4. MCP Confidence Layer

The processed context then enters the MCP System, which validates reliability:

– Confidence Assessment: Uses semantic similarity, probabilistic checks, or LLM self-reflection to assign a confidence score.

– Uncertainty Detection: Identifies ambiguity or insufficient information and flags responses for review or additional retrieval.

This layer acts as a “quality guardian,” ensuring answers are not only generated but also evaluated for reliability.

5. Response Generation (LLM)

With query and enriched context, the Large Language Model generates a comprehensive response—now grounded in external data.

6. Final Response with Confidence Data

The output is paired with confidence metrics. Users receive not only an answer but also insight into how reliable it is. This transparency is invaluable in enterprise and mission-critical use cases.

7. Monitoring & Analytics

Finally, a feedback loop tracks performance, user satisfaction, and confidence patterns. This enables:

– Continuous refinement of prompt engineering.

– Improved retrieval mechanisms.

– Domain-specific LLM fine-tuning.

– Ongoing performance optimization.

Visualizing the MCP Integration Architecture

This diagram illustrates the flow from User Query → Prompt Engineering → RAG → MCP Confidence → LLM → Final Response.

Why This Matters

The MCP Integration Architecture offers clear benefits:

– Increased Accuracy: Reduces hallucinations with factual grounding.

– Transparency & Trust: Confidence metrics empower informed decisions.

– Contextual Richness: Enriched by external, domain-specific knowledge.

– Domain Relevance: Effective in niche sectors with specialized data.

– Actionable Safeguards: Flags uncertainty for human review.

– Continuous Learning: Built-in monitoring drives iterative improvement.

Real-World Impact

Industries can leverage the MCP Integration Architecture in transformative ways:

– Healthcare: Generate patient reports with confidence scores and reliable medical references.

– Finance: Produce market analysis backed by real-time datasets.

– Legal & Compliance: Deliver answers paired with transparency into reliability.

Learning Path with CloudThat

To master architectures like MCP Integration Architecture and tool-augmented AI, explore CloudThat’s specialized programs:

– Generative AI Solutions on Azure

– Azure AI Fundamental Certification Training

These courses offer hands-on labs to help you design scalable, trustworthy AI systems.

Conclusion

The MCP Integration Architecture represents a vital step forward in designing Large Language Models that are confident, context-aware, and enterprise-ready. By combining Retrieval Augmented Generation with a robust confidence layer, it ensures reliable AI interaction with built-in trust and transparency.

For professionals and enterprises, adopting this architecture paves the way for the next generation of responsible and intelligent AI solutions.

Upskill Your Teams with Enterprise-Ready Tech Training Programs

- Team-wide Customizable Programs

- Measurable Business Outcomes

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

WRITTEN BY Abhishek Srivastava

Abhishek Srivastava is a Subject Matter Expert and Microsoft Certified Trainer (MCT), as well as a Google Cloud Authorized Instructor (GCI), with over 15 years of experience in academia and professional training. He has trained more than 7,000 participants worldwide and has been recognized among the Top 100 Global Microsoft Certified Trainers, receiving awards from Microsoft for his outstanding contributions. Abhishek is known for simplifying complex topics using practical examples and clear explanations. His areas of expertise include AI agents, Agentic AI, Generative AI, LangChain, Machine Learning, Deep Learning, NLP, Data Science, SQL, and cloud technologies such as Azure and Google Cloud. He also has hands-on experience with Snowflake, Python, and Image Processing. His in-depth technical knowledge has made him a sought-after trainer for clients in the USA, UK, Canada, Singapore, and Germany. In his free time, Abhishek enjoys exploring new technologies, sharing knowledge, and mentoring aspiring professionals.

Login

Login

September 12, 2025

September 12, 2025 PREV

PREV

Comments