|

Voiced by Amazon Polly |

Introduction

Cloud adoption has given enterprises unprecedented agility, global scale, and innovation potential. Yet, managing costs on platforms like AWS remains a complex challenge. Studies show that cloud waste can account for up to 30% of enterprise AWS bills, and traditional reporting tools often fail to uncover inefficiencies in time. As organizations scale, controlling spend is less about reporting and more about balancing financial accountability with technical efficiency. This is where FinOps comes in – merging financial oversight with cloud operations to enable smarter, data-driven decisions. Increasingly, IT and finance leaders are asking whether Large Language Models (LLMs), a sophisticated form of AI, can meaningfully optimize AWS costs.

The short answer: yes – when applied strategically alongside FinOps principles. LLMs won’t magically slash costs overnight, but they can analyze massive datasets, detect inefficiencies, and recommend smarter usage decisions. In practice, this enables enterprises to optimize spend at scale while aligning cloud usage with business objectives.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

The FinOps Challenge on AWS

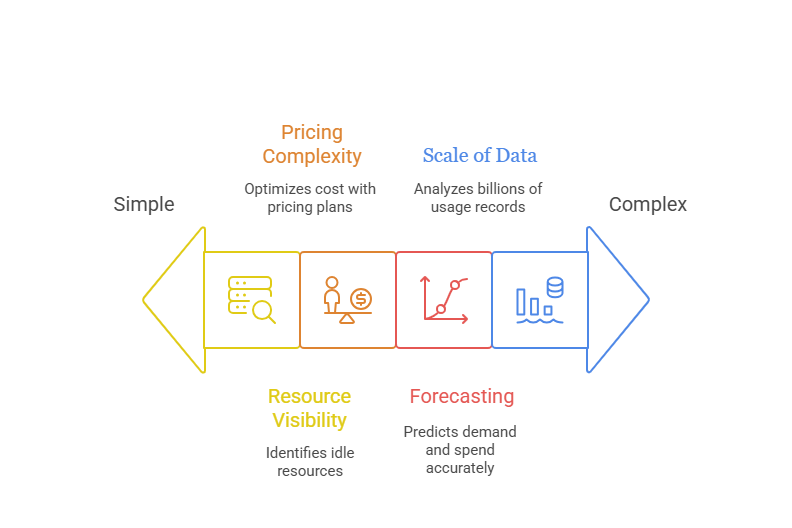

FinOps empowers teams to make data-driven decisions on cloud costs, yet AWS presents unique challenges:

- Resource Visibility: Thousands of accounts, services, and regions make it difficult to identify idle or underused resources.

- Pricing Complexity: Reserved Instances, Savings Plans, and Spot pricing can save substantial money – but selecting the optimal combination requires deep insight.

- Forecasting: Dynamic workloads, seasonal spikes, and product launches make predicting demand and spend highly uncertain.

- Scale of Data: AWS environments generate billions of cost and usage records across services every month, overwhelming traditional analysis tools.

Traditional rule-based optimization tools provide limited help, often missing context and nuance. LLMs offer a new dimension by interpreting complex datasets, recognizing patterns, and producing actionable insights that balance technical and financial objectives.

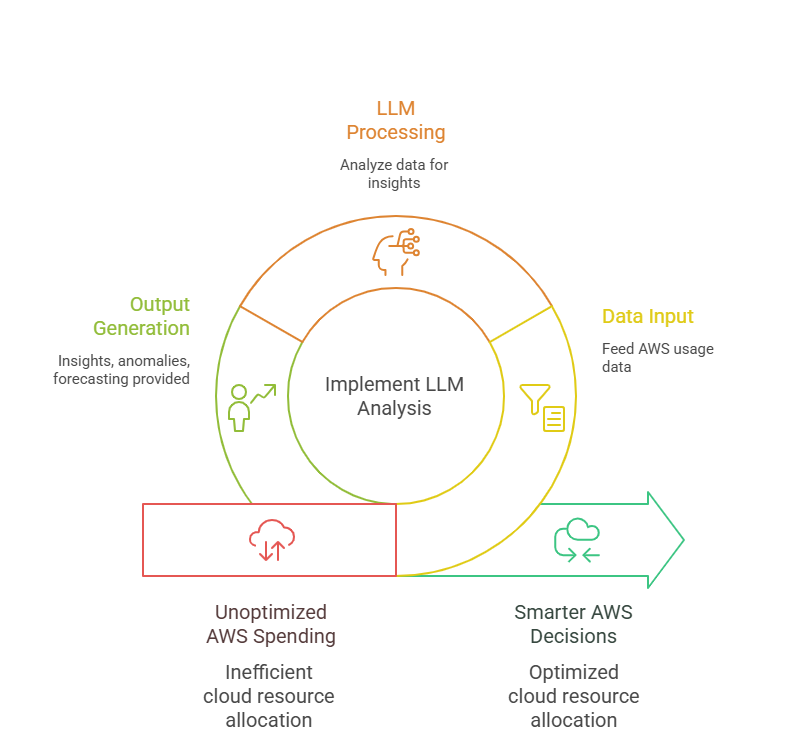

How LLMs Enhance FinOps

LLMs are not just text generators – they bridge raw data with decision-making intelligence. Applied in FinOps, they can:

- Enable Natural Language Queries: Teams can ask, for example, “Why did EC2 costs in us-east-1 spike last week?” The LLM interprets the query, runs analysis, and delivers actionable insights without complex SQL or dashboards.

- Automated Anomaly Detection: LLMs can flag cost spikes caused by misconfigurations, such as idle EBS volumes, over-provisioned instances, or unnecessary cross-region transfers.

- Forecasting with Context: By integrating time-series models and external signals – like seasonal trends, product launches, or expansion – LLMs provide more accurate predictions for Reserved Instances or Spot utilization.

- ChatOps-Style Cost Governance: LLM-powered assistants integrated with collaboration tools like Slack or Teams allow engineers and finance teams to query budgets, trigger optimizations, or validate scaling decisions seamlessly within their workflows.

This interactivity democratizes FinOps insights, empowering cross-functional teams to collaborate effectively and make timely, informed decisions.

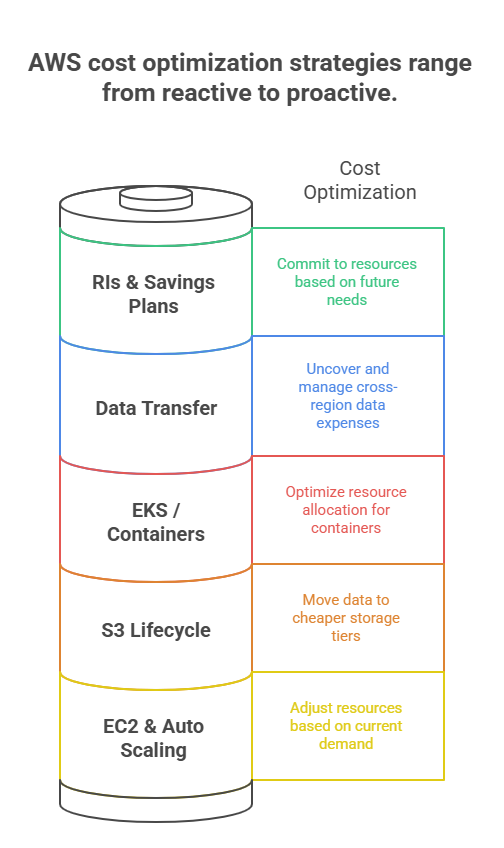

Practical Applications in AWS

LLMs, when combined with FinOps strategies, can unlock tangible cost savings across AWS services:

- EC2 & Auto Scaling: Right-size instances and optimize Auto Scaling policies based on predictive workload analysis.

- S3 Lifecycle Policies: Automate archival of infrequently accessed data to lower-cost tiers, such as Glacier.

- EKS & Container Workloads: Reduce over-provisioning in Kubernetes clusters by analyzing resource utilization and optimizing deployments.

- Data Transfer Costs: Identify hidden charges from cross-region or cross-AZ transfers.

- Reserved Instance & Savings Plan Optimization: Tailor purchases using predictive modeling of future workloads and usage patterns.

Illustrative Example: One enterprise running a multi-region e-commerce platform used LLM-assisted FinOps to analyze EC2 and S3 usage patterns. Within three months, they reduced idle EC2 costs by 35% and automated Glacier archiving for 60% of historical S3 data – delivering immediate savings while improving operational efficiency.

These optimizations not only reduce spend but also align cloud consumption with operational goals, driving efficiency and business value simultaneously.

Security and Governance Considerations

AI-driven insights must be delivered securely. Enterprises should follow best practices:

- Controlled Environment: Run LLM-powered FinOps within a secure AWS VPC, avoiding external API exposure.

- Role-Based Access: Limit access to cost insights to authorized stakeholders only.

- Audit Integration: Combine AI-driven insights with CloudTrail logging and IAM policies to maintain transparency and compliance.

Secure deployment ensures LLMs enhance financial governance without exposing operational or architectural details.

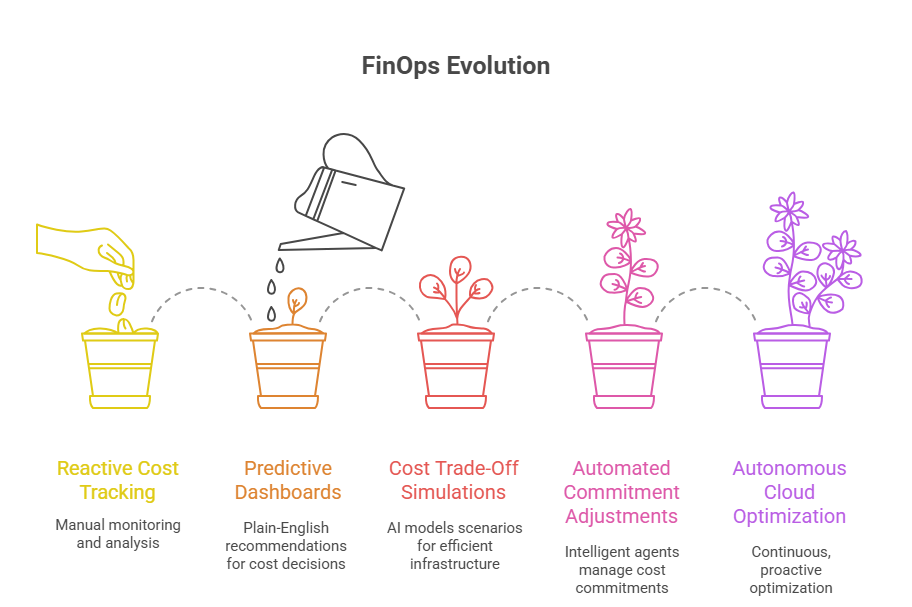

The Future of AI-Driven FinOps

The next frontier of FinOps moves beyond reactive cost tracking toward autonomous cloud optimization. Future capabilities include:

- Predictive Dashboards: CFOs and architects receive plain-English recommendations for actionable cost decisions.

- Cost Trade-Off Simulations: AI models scenarios like EC2 vs. Lambda or containerized workloads to select the most efficient infrastructure.

- Automated Commitment Adjustments: Intelligent agents can dynamically manage Reserved Instances, Savings Plans, or migration strategies based on evolving workloads.

This intelligence-driven approach transforms cloud financial management from reactive monitoring into continuous, proactive optimization – delivering measurable business value and operational agility.

Conclusion: Strategic Edge with Expertise

Large Language Models, when paired with disciplined FinOps practices, empower enterprises to move beyond simple cost tracking toward actionable business intelligence. Organizations can align AWS spend with performance, innovation, and financial accountability, unlocking operational efficiency and strategic value.

Success, however, depends on expertise. That’s where CloudThat comes in. With deep experience in cloud migration, FinOps, and managed services, CloudThat helps enterprises implement AI-driven cost optimization securely and effectively. By combining technical insights with financial governance, CloudThat ensures your AWS journey is efficient, cost-optimized, and aligned with business priorities – empowering organizations to scale in the cloud without compromising financial control.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. What is FinOps and how does it help with AWS cost management?

ANS: – FinOps is the practice of merging financial oversight with cloud operations to enable smarter, data-driven decisions. It helps organizations balance financial accountability with technical efficiency, making it easier to manage and optimize AWS costs.

2. How can Large Language Models (LLMs) optimize AWS spend?

ANS: – LLMs can analyze massive datasets, detect inefficiencies, and recommend smarter usage decisions. They enable natural language queries, automate anomaly detection, provide context-aware forecasting, and facilitate cost governance through tools like Slack or Teams.

WRITTEN BY Sana Pathan

Sana Pathan is the Head of Infra, Security & Migrations at CloudThat and also leads the Managed Services and FinOps verticals. She holds 7x AWS and Azure certifications, spanning professional and specialty levels, demonstrating deep expertise across multiple cloud domains. With extensive experience delivering solutions for customers in diverse industries, Sana has been instrumental in driving successful cloud migrations, implementing advanced security frameworks, and optimizing cloud costs through FinOps practices. By combining technical excellence with transparent communication and a customer-centric approach, she ensures organizations achieve secure, efficient, and cost-effective cloud adoption and operations.

Login

Login

September 24, 2025

September 24, 2025 PREV

PREV

Comments