|

Voiced by Amazon Polly |

Overview

If you are new to data or just starting your journey in data analytics or data science, you might have heard the word data warehouse. But what does it really mean? And how can we use it with Python, one of the most popular programming languages today?

In this blog, we will understand the basic concepts of data warehousing. We will also look at how to use Python to work with data warehouses.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Data Warehouse

In simple terms, a data warehouse is a central storage system where we keep data from different sources. These sources include Excel files, databases, web applications, APIs, or any company software.

A data warehouse is used mainly for reporting, analysis, and decision-making.

You can imagine it like a store room or godown where a shopkeeper keeps items from different suppliers. Similarly, a company stores different data types in one place for easy access and better understanding.

Why Do We Need a Data Warehouse?

Here are a few important reasons why data warehouses are used:

- Single source of truth – All data from different departments is stored in one place.

- Easy reporting – Reports and dashboards can be created easily.

- Better analysis – Helps identify trends, customer behaviour, and performance.

- Faster results – Data is organised to make it quicker to get results.

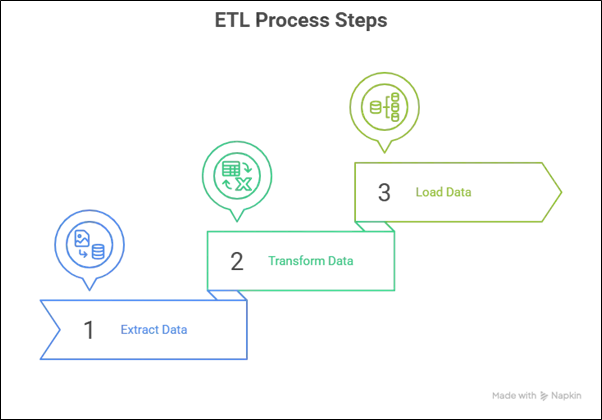

ETL – The Heart of Data Warehousing

One of the most important parts of data warehousing is the ETL process. ETL stands for:

- Extract – Getting data from different sources (Excel, databases, APIs, etc.)

- Transform – Cleaning the data, formatting it, fixing errors, etc.

- Load – Storing the final, cleaned data into the data warehouse

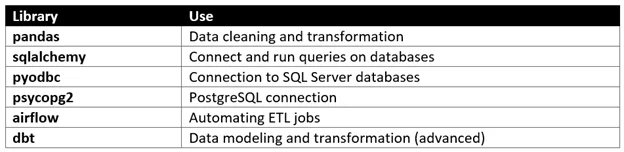

Python is a very good language for writing ETL processes. Some useful Python libraries are:

- pandas – For working with data tables

- sqlalchemy – For connecting to databases

- pyodbc or psycopg2 – For connecting to SQL Server or PostgreSQL

- airflow – For automating and scheduling ETL tasks

Example: ETL in Python

Let us look at a simple ETL example in Python.

Step 1: Extract data

|

1 2 3 4 |

import pandas as pd # Read Excel file data = pd.read_excel("sales_data.xlsx") |

Step 2: Transform data

|

1 2 3 4 5 |

# Remove missing values data = data.dropna() # Convert the date column to datetime data['Date'] = pd.to_datetime(data['Date']) |

Step 3: Load data into SQL database

|

1 2 3 4 5 6 7 |

from sqlalchemy import create_engine # Connect to PostgreSQL database engine = create_engine('postgresql://user:password@localhost/mydatabase') # Load data into table data.to_sql('sales', engine, if_exists='replace', index=False) |

With these three steps, we completed a simple ETL process using Python.

Star Schema and Snowflake Schema

To organise data in a data warehouse, we use schemas.

- Star Schema

- Has one central table called the fact table.

- Other surrounding tables are called dimension tables.

- Easy to understand and good for fast reporting.

- Snowflake Schema

- A more complex version of the star schema.

- Dimension tables are further divided.

- Saves space but can be slower to query.

Most companies use a star schema for business reports because it is simple and efficient.

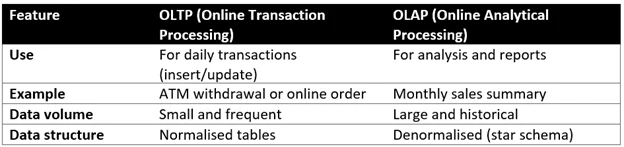

OLTP vs OLAP

Two common terms in databases are OLTP and OLAP. Let us understand the difference.

A data warehouse is built for OLAP, not OLTP.

Useful Python Libraries for Data Warehousing

Here are some important Python tools that help in data warehousing tasks:

Cloud Data Warehouses

Many companies now prefer to use cloud-based data warehouses instead of setting up physical servers. These are faster, scalable, and easier to manage.

Some popular cloud data warehouses are:

- Snowflake

- Google BigQuery

- Amazon Redshift

- Azure Synapse

Python can also connect to these platforms using specific libraries.

Example – Connecting to Snowflake:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

import snowflake.connector conn = snowflake.connector.connect( user='your_user', password='your_password', account='your_account' ) cursor = conn.cursor() cursor.execute("SELECT * FROM my_table") for row in cursor: print(row) |

Real-Life Use Case

Suppose a company like Flipkart wants to know how many items were sold in Maharashtra in the last 3 months.

- First, they extract data from their sales, customer, and product databases.

- Next, they clean and filter the data to show only Maharashtra and recent orders.

- Finally, they load this data into the data warehouse to create a report or dashboard.

Data engineers handle this entire process using Python and ETL pipelines.

Conclusion

To sum up, data warehousing is a key component in modern data management, helping organisations store, manage, and analyse large volumes of data effectively. Python, with its powerful libraries and simplicity, is widely used to build and automate ETL processes, making it a valuable tool for anyone working in data engineering or analytics.

Understanding concepts like ETL, data schemas, OLAP vs OLTP, and cloud data warehouses lays a strong foundation for working on real-world data projects. As the demand for data-driven decision-making grows, these skills will continue to be in high demand across industries.

Drop a query if you have any questions regarding Data Warehouse and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. What is the role of Python in data warehouse automation?

ANS: – Python is often used to automate the ETL process. Instead of manually extracting, cleaning, and loading data daily, you can write a Python script that does it automatically. You can also schedule this script using tools like Apache Airflow or even basic schedulers like cron jobs (Linux) or Task Scheduler (Windows). This reduces human error and saves time in data operations.

2. Is Python enough to become a data warehouse developer?

ANS: – Python is very useful for writing ETL pipelines and automating data tasks, but to become a complete data warehouse developer, you also need to know:

- SQL (for querying and managing data)

- Data modeling (star schema, fact, and dimension tables)

- Database design and performance tuning

- ETL tools (like Apache Airflow, dbt, Talend)

- Cloud platforms (like AWS, GCP, Azure)

WRITTEN BY Hridya Hari

Hridya Hari is a Subject Matter Expert in Data and AIoT at CloudThat. She is a passionate data science enthusiast with expertise in Python, SQL, AWS, and exploratory data analysis.

Login

Login

September 1, 2025

September 1, 2025 PREV

PREV

Comments