|

Voiced by Amazon Polly |

Introduction

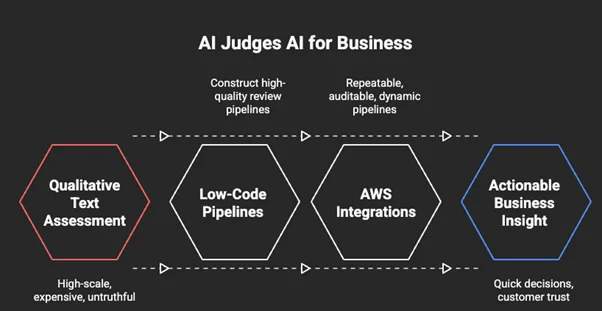

It is the responsibility of today’s organizations to extract reliable conclusions from heaps of unorganized text, such as questionnaires, customer feedback, and product reviews. Hand verification is impossible; checking even a few thousand records may consume weeks of work. With the advent of large language models (LLMs), summarization became easier, but ensuring that the summaries are of good quality and reliable is a concern.

This is where LLM reviewing LLM, or LLM jury systems, fit in. By having multiple LLMs review the work of another model, companies can construct scalable pipelines that approximate human-level review without compromising speed and efficiency. Amazon Bedrock and the Amazon Nova family of models provide the foundation to construct such systems with minimal custom code.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Learning about LLM Jury Systems

An LLM jury process works in a three-step process:

- Summarization – A single-pass LLM is applied to raw input text (for example, thousands of questionnaire answers) and generates thematic summaries.

- Evaluation – A jury of different LLMs individually grades the match of the summary with the data.

- Aggregation – Marks are aggregated to generate a consensus, reducing the impact of any single model’s bias.

This approach lowers hallucinations and confirmation bias to generate firmer results than an individual-model pipeline. Unlike other keyword assessments, jury systems introduce contextual meaning, which is well suited to subtle text such as sentiment-laden comments or open-ended survey responses.

Why AI Must Judge AI?

- Scalability: Thousands of text records are processed within minutes and not weeks.

- Objectivity: Multiple assessors reduce individual model biases.

- Low-Code Deployment: Amazon Bedrock separates infrastructure and enables orchestration with low engineering effort.

- Reliability: the Human and LLM jury agreement rates generally converge, utilizing metrics such as Cohen’s kappa to quantify concurrence.

- Adaptability: Jury platforms can be readily calibrated for different industries (retail, health, finance) by adjusting test prompts, and thus are extremely flexible between industries.

Amazon Nova and Amazon Bedrock Integration

The Amazon Nova model family is best equipped to handle jury-based workflows:

- Amazon Nova Micro: Economically priced, high-speed text analysis.

- Amazon Nova Lite: Economically priced, balanced multimodal support.

- Amazon Nova Pro: High-accuracy model for advanced evaluation.

Through Amazon Bedrock, they can orchestrate the models into jury pipelines without dealing with infrastructure. Amazon Bedrock’s built-in AWS service integration for AWS Lambda, AWS Step Functions, and Amazon DynamoDB enables organizations to automate evaluation workflows, store results, and drive insights into dashboards or report applications. This delivers an end-to-end pipeline from ingestion to decision-making.

Ex: Jury Evaluation Workflow with Amazon Bedrock

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

import boto3 import json client = boto3.client("bedrock-runtime", region_name="us-east-1") def invoke_model(model_id, prompt): response = client.invoke_model( modelId=model_id, body=json.dumps({"inputText": prompt}) ) result = json.loads(response["body"].read()) return result["outputText"] raw_text = "Customer feedback: The product is good but delivery was late. Support was responsive." summary_prompt = f"Summarize the following text into one theme: {raw_text}" summary = invoke_model("amazon.nova-pro-v1", summary_prompt) eval_prompt = f"Rate alignment of this summary with the text (1-5): Summary: {summary} Text: {raw_text}" rating_lite = invoke_model("amazon.nova-lite-v1", eval_prompt) rating_micro = invoke_model("amazon.nova-micro-v1", eval_prompt) ratings = [int(rating_lite), int(rating_micro)] final_score = sum(ratings) / len(ratings) print("Generated Summary:", summary) print("Final Jury Score:", final_score) |

This demonstrates:

- Summarization by a higher-capacity model (Amazon Nova Pro).

- Assessment by multiple lighter models.

- Consensus conclusion through aggregation of all ratings.

This is possible for thousands of records at production volume using AWS Lambda for parallel execution or Step Functions for orchestration. Assessments and scores can be stored in DynamoDB for audit and future comparison.

Conclusion

Judging AI using AI is not an idea confined to research, it’s a lucrative business strategy for organizations that require high-scale qualitative text assessment at the expense of truthfulness.

The greater the volume of unstructured text data that is reaped, the more these systems will be the medium of translating noise into actionable insight to empower businesses to respond more quickly, make better decisions, and continue to benefit from customers’ trust.

Drop a query if you have any questions regarding Amazon Bedrock or Amazon Nova and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. What is an LLM jury system?

ANS: – A process whereby multiple LLMs vote on the output of another model, submit scores, and reach a consensus to establish credibility.

2. Why more than one AI model and not one?

ANS: – Some models remove bias, hallucinations, and errors when working under a single system.

3. How is Amazon Bedrock making governance possible?

ANS: – Amazon Bedrock offers governed access to models such as Amazon Nova, offering codeless orchestration of jury systems without requiring custom infrastructure to be utilized.

WRITTEN BY Daniya Muzammil

Daniya works as a Research Associate at CloudThat, specializing in backend development and cloud-native architectures. She designs scalable solutions leveraging AWS services with expertise in Amazon CloudWatch for monitoring and AWS CloudFormation for automation. Skilled in Python, React, HTML, and CSS, Daniya also experiments with IoT and Raspberry Pi projects, integrating edge devices with modern cloud systems.

Login

Login

September 4, 2025

September 4, 2025 PREV

PREV

Comments