|

Voiced by Amazon Polly |

In a groundbreaking development for the AI community, OpenAI has released its first open-weight models since GPT-2: the GPT-OSS-120b and GPT-OSS-20b. These models are now available on Amazon Web Services (AWS) through Amazon Bedrock and SageMaker JumpStart, offering developers unprecedented access to powerful AI tools. This move not only democratizes AI development but also provides enterprises with scalable, secure and cost-effective solutions for their AI needs.

Freedom Month Sale — Upgrade Your Skills, Save Big!

- Up to 80% OFF AWS Courses

- Up to 30% OFF Microsoft Certs

- Ends August 31

What Are GPT-OSS Models?

The GPT-OSS models are large language models (LLMs) designed to perform a variety of tasks, including text generation, reasoning and coding. The GPT-OSS-120b model boasts 120 billion parameters, while the GPT-OSS-20b model has 20 billion parameters. Despite their size, both models are optimized for efficiency and can run on hardware ranging from consumer-grade machines to enterprise-level infrastructure.

Released under the permissive Apache 2.0 license, these models are freely available for download and can be customized for specific use cases. The GPT-OSS-120b model has been shown to achieve near-parity with OpenAI’s proprietary o4-mini model on core reasoning benchmarks, while running efficiently on a single 80 GB GPU. The GPT-OSS-20b model delivers similar results to OpenAI’s o3-mini on common benchmarks and can run on edge devices with just 16 GB of memory, making it ideal for on-device use cases, local inference or rapid iteration without costly infrastructure.

Integration with AWS: Amazon Bedrock and SageMaker JumpStart

By integrating these models into AWS’s ecosystem, developers gain access to a suite of tools and services that enhance the capabilities of GPT-OSS models.

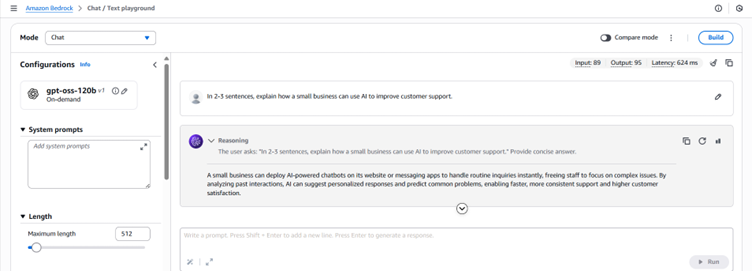

Amazon Bedrock provides a fully managed environment for building and scaling generative AI applications. With GPT-OSS models available through Amazon Bedrock, developers can leverage OpenAI’s models alongside other leading AI models through a single, unified API, allowing them to select the best model for each specific use case without changing application code. This integration gives developers the flexibility to modify and customize the models for their particular business needs while maintaining complete control over their data.

Fig 1: Amazon Bedrock Text Playground interface showing configuration of the GPT-OSS-120B model with a prompt example for AI-powered customer support.

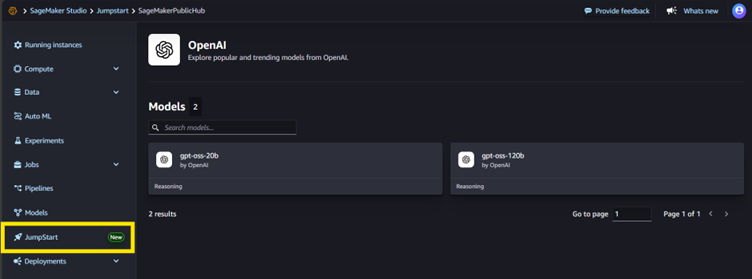

Amazon SageMaker JumpStart offers a comprehensive suite of tools for building, training and deploying machine learning models. Developers can quickly evaluate, compare and customize GPT-OSS models for their use cases. Once customized, these models can be deployed in production using the SageMaker AI console or the SageMaker Python SDK. This streamlined process accelerates the development cycle and simplifies the deployment of AI applications.

Fig 2: Amazon SageMaker AI Studio JumpStart interface highlighting the availability of OpenAI models (GPT-OSS-20b and GPT-OSS-120b) for deployment and experimentation.

Key Features and Benefits

- Extended Context Windows: Both models support a 128K context window, allowing them to handle longer documents and more complex tasks without losing coherence.

- Adjustable Reasoning Levels: Developers can fine-tune the models’ reasoning capabilities by selecting low, medium or high reasoning levels, tailoring the models’ performance to specific requirements.

- Tool Integration: The models support external tools to enhance their capabilities and can be used in agentic workflows, such as those built with frameworks like Strands Agents.

- Enterprise-Grade Security: Amazon Bedrock offers enterprise-grade security features, including Guardrails, which help block up to 88% of harmful content using configurable safeguards. This ensures that AI applications built with GPT-OSS models adhere to security and safety standards.

- Cost Efficiency: The GPT-OSS-120b model is three times more price-efficient than Gemini and five times more efficient than DeepSeek-R1 when used through Amazon Bedrock, making it a cost-effective choice for enterprises.

Real-World Applications

The GPT-OSS models are versatile and can be applied across various industries:

- Healthcare: Analyze medical literature, assist in diagnostics and support personalized patient care.

- Finance: Automate financial reporting, perform risk assessments and provide investment insights.

- Education: Develop intelligent tutoring systems, create personalized learning experiences and assist in content creation.

- Customer Service: Enhance chatbots, automate support tickets and provide real-time assistance to customers.

By integrating GPT-OSS models into their workflows, organizations can streamline operations, improve decision-making and deliver better services to their clients.

Getting Started

To begin using the GPT-OSS models on AWS:

- Access Amazon Bedrock or SageMaker JumpStart: Log in to your AWS account and navigate to the respective service.

- Select the GPT-OSS Model: Choose either the GPT-OSS-120b or GPT-OSS-20b model based on your requirements.

- Deploy the Model: Follow the provided instructions to deploy the model in your environment.

- Integrate into Your Application: Utilize the unified API to integrate the model into your existing applications.

Detailed documentation and tutorials are available on the AWS website to assist you in the setup process. If you are interested in diving deeper into the world of AI, check out courses like Generative AI Essentials to explore advanced concepts and use cases.

Shaping the Future of Open AI on AWS

The availability of OpenAI’s GPT-OSS models on AWS marks a significant milestone in the evolution of generative AI. By providing open-weight models with robust capabilities and integrating them into AWS’s powerful infrastructure, developers and enterprises are empowered to build innovative AI solutions that are scalable, secure and cost-effective. As AI continues to transform industries, the collaboration between OpenAI and AWS sets the stage for the next generation of intelligent applications.

Freedom Month Sale — Discounts That Set You Free!

- Up to 80% OFF AWS Courses

- Up to 30% OFF Microsoft Certs

- Ends August 31

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

WRITTEN BY Nehal Verma

Nehal is a seasoned Cloud Technology Expert and Subject Matter Expert at CloudThat, specializing in AWS with a proven track record across Generative AI, Machine Learning, Data Analytics, DevOps, Developer Tools, Databases and Solutions Architecture. With over 12 years of industry experience, she has established herself as a trusted advisor and trainer in the cloud ecosystem. As a Champion AWS Authorized Instructor (AAI) and Microsoft Certified Trainer (MCT), Nehal has empowered more than 15,000 professionals worldwide to adopt and excel in cloud technologies. She holds premium certifications across AWS, Azure, and Databricks, showcasing her breadth and depth of technical expertise. Her ability to simplify complex cloud concepts into practical, hands-on learning experiences has consistently earned her praise from learners and organizations alike. Nehal’s engaging training style bridges the gap between theory and real-world application, enabling professionals to gain skills they can immediately apply. Beyond training, Nehal actively contributes to CloudThat’s consulting practice, designing, implementing and optimizing cutting-edge cloud solutions for enterprise clients. She also leads experiential learning initiatives and capstone programs, ensuring clients achieve measurable business outcomes through project-based, real-world engagements. Driven by her passion for cloud education and innovation, Nehal continues to champion technical excellence and empower the next generation of cloud professionals across the globe.

Login

Login

December 16, 2025

December 16, 2025 PREV

PREV

Comments