|

Voiced by Amazon Polly |

Overview

Large-scale data migrations, moving terabytes or petabytes of files between on-premises systems and the cloud, are complex, time-sensitive, and risk-prone. AWS DataSync simplifies these challenges with a purpose-built, parallel, multi-threaded architecture that automates scheduling, monitoring, encryption, and data integrity checks. This guide presents a structured workflow for executing efficient, secure, and cost-optimized large data migrations using AWS DataSync.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

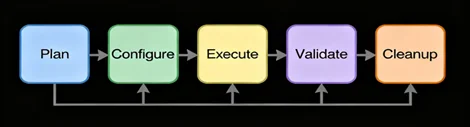

A Structured Five-Step Process for Migration Workflow

- Planning Your Data Migration

A thorough planning phase lays the foundation for success. Key activities include:

- Define Migration Scope & Objectives

Establish measurable success criteria, such as “Transfer 200 TB within three weeks with zero data loss.” Clear objectives help prioritize data sets and allocate resources effectively.

- Inventory Data Sources

Catalog all data locations, file formats, directory structures, and total volume. Understanding data distribution, such as the difference between large multimedia files and millions of small logs, informs performance tuning decisions.

- Assess Network & Infrastructure

Evaluate available bandwidth, latency, and on-premises hardware capacity. Determine whether existing network links can support peak transfer rates without impacting production services.

- Proof-of-Concept (POC)

Run a POC using AWS DataSync on a representative subset of data. Benchmark transfer speeds, refine task configurations, and validate security controls. Document results in a migration runbook to guide full-scale execution.

- Configuring AWS DataSync

Proper configuration maximizes throughput and security for large migrations.

- Deploy Source & Destination Locations

- On-Premises Sources: Install the AWS DataSync agent on a server or VM in your data center. Grant it access to file shares or network file systems.

- AWS Destinations: Define Amazon S3 buckets, Amazon EFS file systems, or FSx for Windows File Server volumes as targets.

- Configure Task Settings

- Compression & Encryption: Enable compression to reduce data sent over the network. Use TLS for in-flight encryption and AWS KMS for at-rest encryption on destination buckets.

- Concurrency & Bandwidth: Adjust thread counts and set bandwidth limits to fully utilize links without saturating them. Balance transfer speed against other critical traffic.

- Verify Options: Choose checksum verification and/or file timestamp comparison. Checksums ensure end-to-end integrity, while timestamps verify the freshness of data.

- Optimize Task Performance

- Enhanced Mode: Activate Enhanced mode to parallelize data listing, preparation, transfer, and verification. Enhanced mode can boost throughput by 2–5× compared to Basic mode.

- Buffer & Thread Tuning: Increase buffer sizes for larger files, and scale thread counts based on agent host CPU and memory capacity. Monitor performance metrics to fine-tune.

- Executing and Monitoring Transfers

With configurations in place, launch and closely monitor migrations in real-time.

- Task Execution:

Schedule tasks during off-peak hours or initiate on-demand runs for urgent transfers. Use AWS CLI, SDK, or console to start tasks programmatically.

- CloudWatch Metrics:

Track metrics such as bytes transferred per second, files processed, error counts, and task duration. Create dashboards that surface throughput trends and error spikes.

- Alarms & Notifications:

Set Amazon CloudWatch alarms on critical metrics, task failures, high error rates, or throughput drops. Use Amazon SNS subscriptions or AWS Lambda notifications for immediate alerts.

- Incremental Syncs:

Enable scheduled delta transfers to replicate only changed or new files after the initial bulk copy. Incremental syncs reduce the final cutover window to minutes, minimizing downtime.

- Validation and Cutover Strategy

A robust validation and cutover plan ensures data integrity and seamless switchover.

- Checksum Verification:

Rely on AWS DataSync’s built-in checksum feature to confirm that every object arrived intact. Regularly audit checksums during ongoing delta syncs.

- Staging Environment Testing:

Point applications to a staging bucket or file system in AWS. Execute functional and performance tests to verify application behavior before production cutover.

- Phased Cutover Windows:

Migrate non-critical datasets first to validate the process. For business-critical data, schedule cutover during planned maintenance windows. This phased approach mitigates risk and disruption.

- Final Sync & Switchover:

Perform a final incremental sync to capture any remaining changes. The switch application reads to AWS destinations, monitors application performance, and then decommissions on-premises sources only after successful confirmation.

- Post-Migration Cleanup and Optimization

After migration, finalize cleanup tasks and implement cost-optimization measures.

- Resource Decommissioning:

Remove AWS DataSync agents from on-premises hosts, release reserved IPs, and tear down test environments to avoid unnecessary costs.

- Storage Tiering:

Apply Amazon S3 lifecycle policies to move infrequently accessed objects to Amazon S3 Glacier or Intelligent-Tiering. Right-size EFS and FSx volumes based on usage patterns.

- Performance Tuning:

Review transfer logs and Amazon CloudWatch metrics to adjust buffer sizes, thread counts, and bandwidth limits for future syncs or ongoing replication tasks.

- Cost Monitoring:

Use AWS Cost Explorer and Amazon S3 Storage Lens to track data transfer and storage costs. Identify anomalies and refine lifecycle policies and storage classes to maintain cost efficiency over time.

Conclusion

AWS DataSync’s automated, parallel architecture and comprehensive monitoring capabilities make it an ideal solution for large-scale data migrations. By following this five-step workflow, Plan, Configure, Execute, Validate, and Cleanup, organizations can achieve faster transfers, maintain data integrity, minimize downtime, and optimize costs. Applying these best practices empowers enterprises to tackle even the most complex migration projects with confidence and agility.

Drop a query if you have any questions regarding AWS DataSync and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. Can AWS DataSync handle millions of small files efficiently?

ANS: – Yes. Enhanced mode batches file listings and transfers them in parallel, optimizing throughput for both small and large files.

2. How do I minimize application downtime during cutover?

ANS: – Use phased cutover windows with incremental syncs that transfer only changed data after the bulk copy, reducing final switchover to minutes.

3. What security features does AWS DataSync offer?

ANS: – AWS DataSync supports TLS encryption in transit, AWS KMS–managed encryption at rest, and checksum validation for end-to-end data integrity.

WRITTEN BY Anusha

Anusha works as a Subject Matter Expert at CloudThat. She handles AWS-based data engineering tasks such as building data pipelines, automating workflows, and creating dashboards. She focuses on developing efficient and reliable cloud solutions.

Login

Login

October 30, 2025

October 30, 2025 PREV

PREV

Comments