- Consulting

- Training

- Partners

- About Us

x

AWS Batch is a beneficial service for batch computing heavy workloads. It removes the complexity of having a complete infrastructure setup and maintaining it. Also, it decreases the cost of the environment and is open to several types of automation to run the batch job.

AWS Batch is a very effective service introduced by the AWS Team. It helps to run batch computing workloads on the AWS Cloud. We can also say that it is a service that helps us use aws resources more effectively and efficiently, making the aws cloud more convenient to its users. This service also provisions the underlying resources efficiently once job is submitted. It helps to eliminate capacity constraints, reduce compute costs, and deliver results quickly.

It is used for running high-volume, repetitive data jobs at our ease. This method allows its users to process data when all the computing resources are available and minimize user Interaction.

AWS Batch makes all the required resources available only when the user needs to process any data, or we can say to run any computing job. Once the job completes, it automatically releases the resources saving some money for the user.

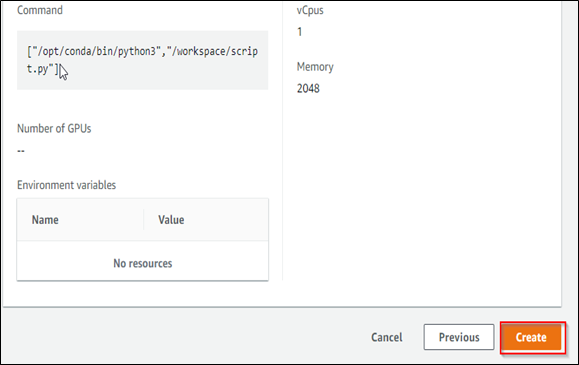

To run a Batch process, we need to create and run a job; a job can be created and run directly from the console. But in most use cases, users need to automate this process so that whenever they want to run any batch job, they don’t need to create and run the batch job manually.

The two most effective ways for automating this process are using CloudWatch Events and AWS Lambda. We can easily trigger these services to create and run a job on the AWS batch.

AWS Batch multi-node parallel jobs let users run a single job on multiple servers. Multi-node parallel job nodes are single tenants, which means that only a single job container is run on each Amazon EC2 instance. To know more about AWS Batch, drop a query in the below comments section, and I will get back to you quickly.

CloudThat provides end-to-end support with all the AWS services. As a pioneer in the Cloud Computing consulting realm, we are AWS (Amazon Web Services) Advanced Consulting Partner and Training partner. We are on a mission to build a robust cloud computing ecosystem by disseminating knowledge on technological intricacies within the cloud space. Read more about CloudThat’s Consulting and Expert Advisory.

|

Voiced by Amazon Polly |

Nishant Ranjan is a Sr. Research Associate (Migration, Infra, and Security) at CloudThat. He completed his Bachelor of Engineering degree in Computer Science and completed various certifications in multi-cloud such as AWS, Azure, and GCP. His area of interest lies in Cloud Architecture and Security, Application Security, Application Migration, CICD, and Disaster Recovery. Besides professional interests, he likes learning the latest technologies and tools, reading books, and traveling.

Our support doesn't end here. We have monthly newsletters, study guides, practice questions, and more to assist you in upgrading your cloud career. Subscribe to get them all!

Panchanathan

Jul 21, 2022

Hi, I am new to AWS and your AWS Batch guide looks really good and easy to understand. However is it possible to give overall flow diagram for the AWS batch (read file from S3 and insert into RDS SB) to have better understanding before i start AWS batch solution

Nishant Ranjan

Jul 21, 2022

Please contact the CloudThat team for support. we will be happy to help you.