|

Voiced by Amazon Polly |

In today’s cloud-first world, data lives everywhere: in data lakes, warehouses, streaming sources, SaaS platforms and more. The ability to connect, clean and transform that data efficiently is critical. That’s where Power Query comes in.

Power Query offers a powerful, visual, code-light interface for extracting, transforming and loading (ETL) data. And when paired with cloud services from Azure and AWS, it becomes a key tool in modern data architecture.

Start Learning In-Demand Tech Skills with Expert-Led Training

- Industry-Authorized Curriculum

- Expert-led Training

What is Power Query?

Power Query is Microsoft’s data-preparation engine. It is embedded in Excel, Power BI, Dataflows and other tools. It uses a functional language called “M” under the hood.

The typical workflow:

- Connect to a data source

- Transform/clean data (filter, split, rename, etc)

- Load the result into your target (Excel table, Power BI model, etc)

Because each step in Power Query is logged (in the “Applied Steps” pane), it’s easy to maintain and refresh automatically.

Power Query + Azure

Azure offers a rich ecosystem that works very well with Power Query.

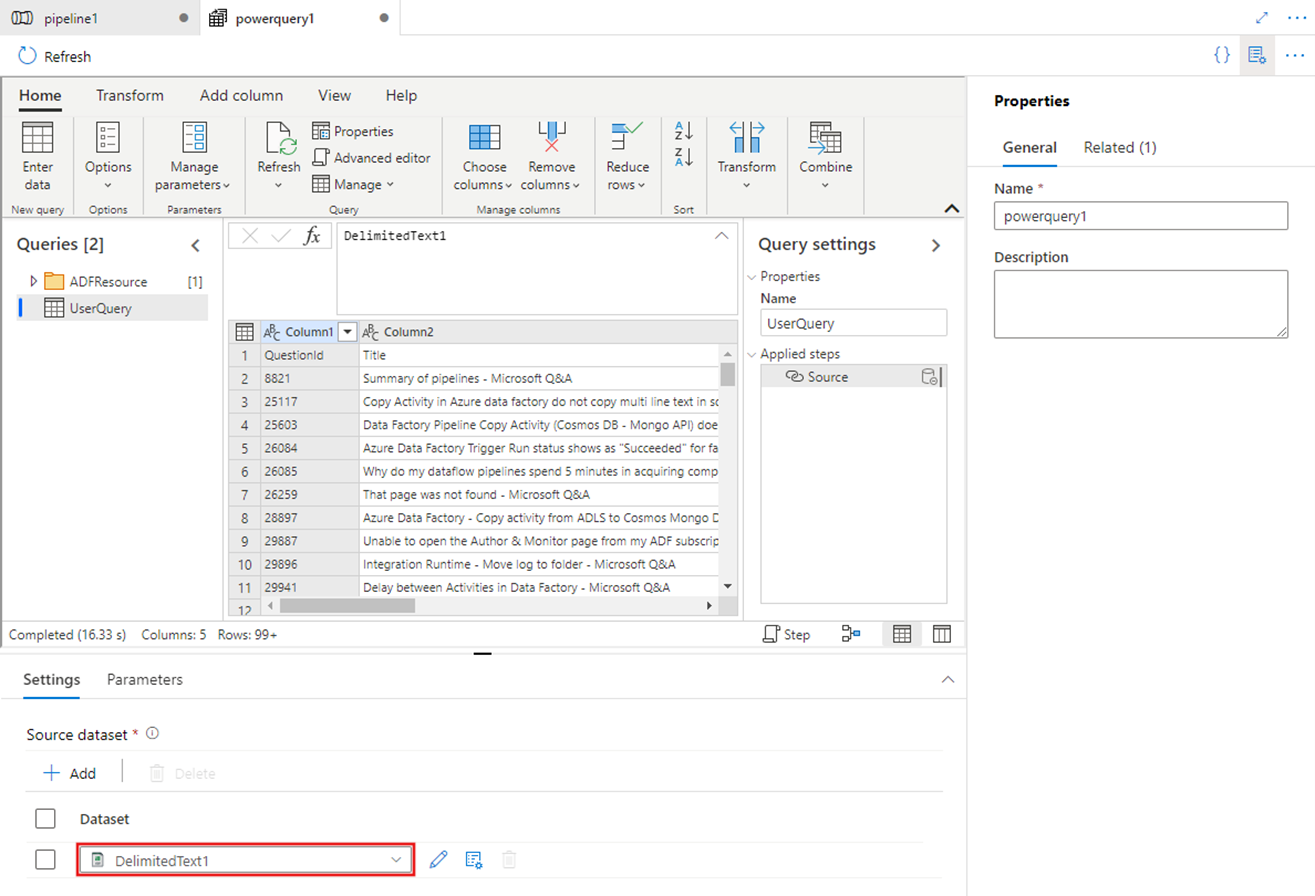

Power Query in ADF enables scalable Azure-based data transformation.

Here are some key integration points and possibilities:

Key Azure Connectors & Capabilities

- You can connect to Azure SQL Database using the built-in connector.

- You can connect to Azure Data Explorer (Kusto) via Power Query.

- In the context of data pipelines, the Azure Data Factory (ADF) “Power Query activity” allows Power Query scripts to run at scale in Azure’s Spark integration runtime.

- The connector between Azure Synapse Analytics and Power Query (via Power BI) was announced to simplify access to Synapse data from PQ.

Why Use Azure + Power Query

- Scale: With ADF or Synapse, your Power Query logic can scale out across a Spark environment.

- Managed infrastructure: Azure handles compute, storage and scaling so you can focus on logic rather than plumbing.

- End-to-end architecture: From ingestion (Data Factory) → storage (Data Lake, Synapse) → transform (Power Query) → visualization/reporting (Power BI) – all within Azure.

- Governance and compliance: Azure will include enterprise-grade security, access controls, etc, making it viable for enterprise workloads.

Example Scenario

Suppose you have log data in Azure Data Explorer (Kusto) that you want to include in a monthly operational dashboard. You could:

- Use Power Query to connect via the Azure Data Explorer connector.

- In Power BI or Dataflows, author transformations (filter, aggregate) in PQ.

- Refresh automatically so every month you get updated data.

- Possibly move the dataset into Synapse for large-scale analysis, using the Synapse connector.

Tips & Considerations

- When using connectors like Azure SQL Database or Data Explorer, pay attention to Import vs DirectQuery mode – large data sets might require DirectQuery or other architectural tweaks.

- In a pipeline scenario (e.g., with ADF), the Power Query activity runs on Spark, so you’ll need to think about compute size, staging storage and cost.

- Ensure data source formats are consistent and transformations are documented (naming of queries, steps).

- Consider security (service principals, managed identity) when connecting to Azure data sources – especially in enterprise environments.

Power Query + AWS

Although Power Query is a Microsoft technology, it also supports integration with AWS services. This enables hybrid or multi-cloud use cases.

Key AWS Connectors & Capabilities

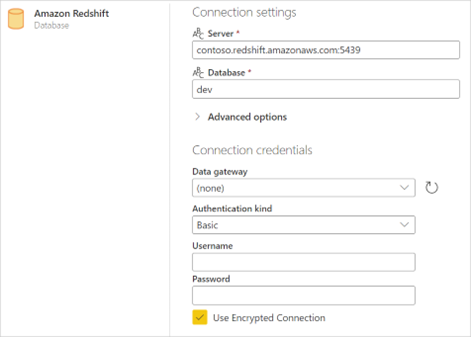

- Amazon Redshift: Power Query supports connecting to Redshift.

Power Query connects to AWS Redshift for hybrid data analysis.

- Amazon Athena: You can connect via the Athena connector (import or DirectQuery) to query data stored in S3.

- Amazon S3: While not a native Power Query connector in all cases, third-party ODBC drivers (e.g., from CData) allow you to bring S3 data into Power Query.

Why Use AWS + Power Query

- Flexibility in cloud-choice: If your data lives in AWS (e.g., S3, Redshift), you can still leverage Power Query for transformation rather than rebuilding everything in Azure.

- Cost optimisation: Keep data storage/processing in AWS where it resides; use Power Query for transformation and then bring results into your Microsoft BI stack.

- Hybrid scenarios: Organisations often have multi-cloud architectures; PQ allows for consistent transformation logic, whether the data is in Azure or AWS.

Example Scenario

You store raw event data in AWS S3 and use Athena for querying. You want to build a dashboard in Power BI that draws from this. You could:

- Use the Power Query Athena connector to connect to the data lake.

- In PQ, filter/aggregate the data.

- Load into Power BI or publish as a dataflow.

Unified Architecture: Azure + AWS + Power Query

It’s entirely plausible (and increasingly common) to have a data estate spanning both Azure and AWS, and to standardise on Power Query as the transformation layer. Here’s how you might design such an architecture:

- Data ingestion

- AWS: Raw data lands in S3 → Athena/Redshift

- Azure: Raw data lands in Data Lake / SQL DB or Data Explorer

- Transformation via Power Query

- Use PQ to connect to AWS sources (Athena, Redshift)

- Use PQ to connect to Azure sources (SQL DB, Data Explorer, Synapse)

- Perform unified cleaning/shape logic in PQ so logic is portable

- Load/reporting

- Results loaded into a destination (could be Azure SQL, Synapse, or even AWS-hosted warehouse)

- Reports built in Power BI (which can connect to either Azure or AWS output)

- Governance/refresh

- Use scheduled refreshes, dataflows, usage monitoring

- Use enterprise identity, RBAC, network security across clouds

This multi-cloud and PQ approach provides agility: you’re not locked into a single cloud, and you’re utilising a consistent transformation layer.

Best Practices

Here are some practical best practices to make the most of Power Query in Azure & AWS environments:

- Naming & documentation: Name your queries meaningfully (e.g., Source_AWS_S3_Events, Transform_CleanedSales_AzureSQL) and document what each step does.

- Push transformations upstream when possible: If your source (e.g., Athena or Redshift) can filter or aggregate data before PQ loads it, it reduces load and improves performance.

- Use parameterization: Use Power Query parameters (e.g., for date ranges, buckets) so your logic is flexible.

- Monitor refresh and compute cost: Especially in Azure Data Factory with PQ activity, you’ll pay for compute time. Monitor usage.

- Secure your connections: Use managed identities (in Azure), IAM roles (in AWS), secure ODBC drivers and ensure data access is limited.

- Test with direct query vs import: For very large datasets, DirectQuery may be better than import to avoid memory bottlenecks.

- Version control/reuse logic: If you find yourself repeating transformations across sources, consider abstracting them into reusable templates or functions in Power Query (M language).

- Design for scale: For large-scale workloads, consider whether Power Query alone is enough or if you need to move some logic into the data source (e.g., Spark in Synapse, Warehouse in Redshift).

- Refresh strategy: For multi-cloud sources, verify how refresh orchestration will work (e.g., scheduled refresh in the Power BI service, pipeline triggers in ADF, etc.).

Challenges & Things to Watch Out For

- Performance on very large data sets: Power Query sometimes struggles when datasets are massive and transformations are complex. In such cases, off-load heavy computation to the source or use a proper data warehouse/engine.

- Cross-cloud latency or security issues: Connecting tools in Microsoft Stack to AWS sources may require gateways, drivers, secure networking.

- Data refresh and gateway dependency: When using cloud sources outside Azure (e.g., AWS), Power BI service may require a gateway or additional configuration.

- Governance across clouds: Ensuring data lineage, security and compliance across both Azure and AWS can become more complex.

Getting Started: A Step-by-Step Example

Here’s a simplified “first steps” for someone who wants to start with Power Query in a multi-cloud environment:

- Pick your data source

- Let’s say you have event logs in AWS S3 + Athena.

- Ensure you have prerequisites

- Cognito/IAM user with permissions in AWS

- ODBC driver for Athena configured.

- In Power BI Desktop (or Excel)

- Home → Get Data → Search “Amazon Athena” (or appropriate connector)

- Select tables or run query, then click Transform Data to open Power Query Editor.

- Transform data in Power Query Editor

- Clean columns, filter date ranges, rename fields, change data types.

- Add parameters if needed.

- Load data

- Either load into model (Import) or use DirectQuery if dataset is huge.

- Repeat for Azure Source

- Home → Get Data → Azure → e.g., Azure SQL Database

- Transform/clean similarly.

- Combine or Append data if required

- Use Append Queries or Merge queries in Power Query to bring together AWS + Azure data.

- Publish & schedule refresh

- Publish to Power BI Service. Configure refresh schedule. If AWS source requires gateway, ensure it’s set up.

- Monitor & optimize

- Check refresh runtimes, look for heavy steps, optimize where needed.

Power Query in Azure and AWS

Suppose your data estate spans multiple clouds, such as Azure and AWS. In that case, using Power Query as your transformation layer provides a unified, manageable and often user-friendly way to shape and prepare data for reporting.

On Azure, you benefit from native integrations (Data Factory, Synapse, Data Explorer). On AWS, you can still connect via Redshift, Athena, S3 and use Power Query alongside your Microsoft BI tools. The key is to architect thoughtfully, considering scale, cost, performance, security and maintainability.

Upskill Your Teams with Enterprise-Ready Tech Training Programs

- Team-wide Customizable Programs

- Measurable Business Outcomes

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

WRITTEN BY Vivek Kumar

Vivek Kumar is a Senior Subject Matter Expert at CloudThat, specializing in Cloud and Data Platforms. With 11+ years of experience in IT industry, he has trained over 2000 professionals to upskill in various technologies including Cloud and Full Stack Development. Known for simplifying complex concepts and hands-on teaching, he brings deep technical knowledge and practical application into every learning experience. Vivek's passion for technology reflects in his unique approach to learning and development.

Login

Login

November 13, 2025

November 13, 2025 PREV

PREV

Comments