|

Voiced by Amazon Polly |

In the rapidly evolving world of Artificial Intelligence (AI), Large Language Models (LLMs) have emerged as powerful tools. They can generate human-like text, answer complex questions, and even write code. However, traditional LLMs often struggle when asked to integrate real-time data, perform precise computations, or interact with specialized software. This limitation gives rise to the concept of tool-augmented AI, where external tools extend the core capabilities of LLMs.

One architecture that exemplifies this approach is the MCP architecture (Multi-modal Command Processor). This framework represents a major leap in how we design intelligent systems capable of real-world AI interaction.

Start Learning In-Demand Tech Skills with Expert-Led Training

- Industry-Authorized Curriculum

- Expert-led Training Sessions

The Core Challenge: Bridging LLMs with External Capabilities

Imagine asking an AI not just to describe the weather but to fetch the live forecast for your city using an API. Or requesting it to run a statistical analysis with external software. Traditional LLMs cannot directly perform these tasks. The MCP architecture solves this by creating a pipeline between the user’s query, the LLM, and a suite of specialized MCP Tools that execute the required tasks.

How the MCP Architecture Works

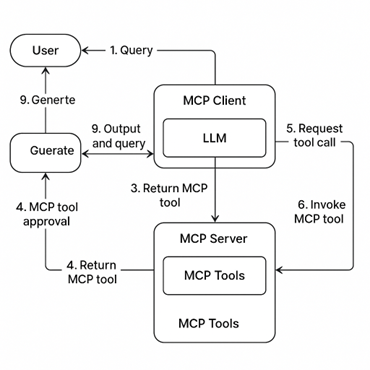

The MCP design follows a step-by-step workflow that blends natural language understanding with tool execution:

1. User Query

The process starts when the user submits a query in natural language. This represents the intent of the task.

2. LLM Orchestration

The query is first processed by the MCP Host (housing the LLM). The Large Language Model interprets intent and decides whether an external MCP tool is required.

3. Tool Selection

The LLM selects the appropriate MCP tool from an available repository. These tools may include APIs, databases, or custom computational scripts.

4. User Approval

Before execution, the chosen tool is presented to the user for approval. This ensures transparency and prevents unintended actions, especially when dealing with sensitive data.

5. Tool Execution

Once approved, the MCP Client sends the request to the MCP Server, which invokes the tool. This could involve fetching data, performing analysis, or interacting with external systems.

6. Returning Results

The tool output is returned to the MCP Server and then routed back to the LLM. The LLM combines the original query with the new results to generate a refined and accurate response.

Why MCP Architecture Matters

The MCP architecture unlocks several critical benefits:

– Enhanced Accuracy: By grounding answers with external data, it reduces hallucinations and improves factual correctness.

– Expanded Capabilities: From stock prices to medical datasets, the LLM can now interact with diverse systems.

– Real-Time Interaction: Responses are always updated with live data.

– User Control: Tool approval keeps humans in the loop, improving trust and safety.

– Scalability: New MCP tools can be added without disrupting the architecture.

Visualizing the MCP Workflow

This diagram illustrates how user queries are processed, tools are invoked, and results are fed back into the LLM for a complete AI interaction loop.

Real-World Applications

The MCP architecture can transform industries:

– Finance: AI agents can pull live stock prices, run models, and provide actionable insights.

– Healthcare: LLMs can fetch patient data securely and generate diagnostic reports.

– Retail: Chatbots can integrate with inventory APIs for real-time product availability.

Learning Path with CloudThat

To explore architectures like MCP and advance your expertise in tool-augmented AI, check out CloudThat’s specialized training programs:

– Generative AI Solutions on Azure

– Azure AI Fundamental Certification Training

These courses provide hands-on labs to help you design and deploy scalable AI systems.

Conclusion

The MCP architecture represents a turning point in how we build AI systems. It blends the natural language power of Large Language Models with the precision of specialized tools, ensuring trustworthy, real-time, and scalable AI interaction. For businesses and professionals, this approach lays the foundation for advanced intelligent agents capable of handling complex, real-world tasks.

By mastering MCP architecture concepts today, you can position yourself at the forefront of the next era in AI innovation.

Upskill Your Teams with Enterprise-Ready Tech Training Programs

- Team-wide Customizable Programs

- Measurable Business Outcomes

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

WRITTEN BY Abhishek Srivastava

Abhishek Srivastava is a Subject Matter Expert and Microsoft Certified Trainer (MCT), as well as a Google Cloud Authorized Instructor (GCI), with over 15 years of experience in academia and professional training. He has trained more than 7,000 participants worldwide and has been recognized among the Top 100 Global Microsoft Certified Trainers, receiving awards from Microsoft for his outstanding contributions. Abhishek is known for simplifying complex topics using practical examples and clear explanations. His areas of expertise include AI agents, Agentic AI, Generative AI, LangChain, Machine Learning, Deep Learning, NLP, Data Science, SQL, and cloud technologies such as Azure and Google Cloud. He also has hands-on experience with Snowflake, Python, and Image Processing. His in-depth technical knowledge has made him a sought-after trainer for clients in the USA, UK, Canada, Singapore, and Germany. In his free time, Abhishek enjoys exploring new technologies, sharing knowledge, and mentoring aspiring professionals.

Login

Login

September 12, 2025

September 12, 2025 PREV

PREV

Comments