|

Voiced by Amazon Polly |

Introduction

Artificial Intelligence (AI) and Machine Learning (ML) are revolutionizing industries across the globe—from healthcare and finance to retail and manufacturing. But building a high-performing ML model is only part of the equation. To ensure these models are accurate, reliable, scalable, and secure, AI/ML testing becomes critical—especially when deployed on cloud platforms like Amazon Web Services (AWS).

In this blog, we’ll explore:

- What is AI/ML testing?

- Why testing AI/ML is different

- AWS tools for ML development and testing

- Testing strategies and best practices on AWS

Start Learning In-Demand Tech Skills with Expert-Led Training

- Industry-Authorized Curriculum

- Expert-led Training Sessions

What is AI/ML Testing?

AI/ML testing refers to validating the entire machine learning pipeline—from data ingestion and preprocessing to model training, evaluation, and deployment. It includes:

- Data Validation: Ensuring data quality, format, and distribution

- Model Validation: Measuring model accuracy, bias, overfitting, underfitting

- Performance Testing: Speed, scalability, and latency of predictions

- Integration Testing: Ensuring the ML model works within the larger system (API, UI, etc.)

- Security & Compliance: Protecting data privacy and adhering to regulations

Why Testing AI/ML is Different

Unlike traditional software, ML systems learn from data rather than being explicitly programmed. This introduces unique challenges:

- Non-determinism: Two training runs may not produce the same model

- Data drift: Model accuracy may degrade as new data changes over time

- Bias and fairness: Models can unintentionally reflect societal biases

- Explainability: Black-box models are harder to debug or interpret

AWS Tools for AI/ML Testing

AWS offers a rich set of services and tools to test and manage ML workflows effectively:

- Amazon SageMaker

- End-to-end ML development and deployment platform

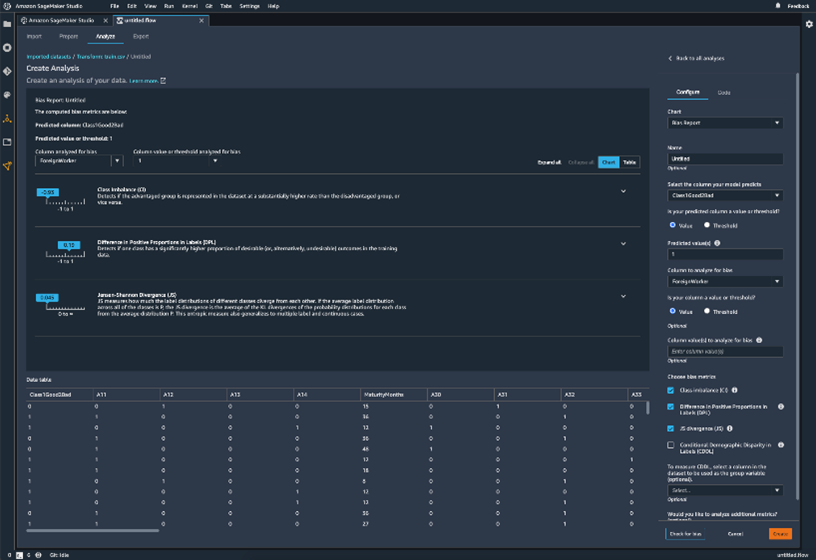

- SageMaker Model Monitor: Continuously monitors deployed models for data drift and anomalies

- SageMaker Clarify: Tests models for bias and ensures fairness

- SageMaker Debugger: Automatically detects training issues (e.g., overfitting)

- AWS Glue

- ETL service used for data preprocessing and validation

- Supports data quality checks before feeding into models

- Amazon Athena + Amazon S3

- Perform SQL-based data validation directly on raw datasets stored in S3

- Amazon CloudWatch

- Track and log model performance, latency, and failure events during inference

- AWS Lambda + API Gateway

- For unit and integration testing of ML models deployed as APIs

- Amazon CodePipeline + CodeBuild

- CI/CD for ML: Automate testing, training, and deployment steps

Testing Strategies for AI/ML Projects on AWS

- Data Quality Testing

- Use AWS Glue DataBrew or pandas in SageMaker notebooks to validate nulls, duplicates, and schema mismatches.

- Schedule periodic data audits with AWS Lambda.

- Model Unit Testing

- Use testing frameworks like PyTest or unittest for:

- Preprocessing functions

- Model inference consistency

- Package tests with the model code and integrate with AWS CodeBuild

- Model Evaluation Testing

- Split datasets into training/validation/test sets

- Track metrics like accuracy, precision, recall using SageMaker Experiments

- Automate regression tests for model updates

- Bias & Explainability Testing

- Leverage SageMaker Clarify to:

- Test feature importance

- Detect and mitigate bias

- Performance & Load Testing

- Use Amazon SageMaker endpoints + AWS CloudWatch to monitor latency and throughput

- Simulate concurrent users using tools like Apache JMeter with Lambda functions

- Security Testing

- Ensure encryption (in transit & at rest) with KMS

- Limit IAM roles for model training and inference

- Use Amazon Macie for sensitive data classification

Best Practices

- Automate tests in your CI/CD pipeline (CodePipeline)

- Monitor models post-deployment (Model Monitor, CloudWatch)

- Use version control for datasets and models (SageMaker Model Registry)

- Regularly retrain and validate models to combat data drift

- Document assumptions, features, and test results for compliance and audits

Summary

AI/ML systems are only as reliable as the tests we build around them. AWS provides a robust ecosystem to develop, test, monitor, and scale ML models. Whether you’re a data scientist or an MLOps engineer, implementing a structured testing strategy ensures your models remain trustworthy, performant, and production-ready.

Want to get started? Try building a CI/CD ML pipeline using Amazon SageMaker, integrated with CodePipeline, and CloudWatch for end-to-end testing and monitoring.

Upskill Your Teams with Enterprise-Ready Tech Training Programs

- Team-wide Customizable Programs

- Measurable Business Outcomes

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

WRITTEN BY Vivek Kumar

Vivek Kumar is a Senior Subject Matter Expert at CloudThat, specializing in Cloud and Data Platforms. With 11+ years of experience in IT industry, he has trained over 2000 professionals to upskill in various technologies including Cloud and Full Stack Development. Known for simplifying complex concepts and hands-on teaching, he brings deep technical knowledge and practical application into every learning experience. Vivek's passion for technology reflects in his unique approach to learning and development.

Login

Login

September 12, 2025

September 12, 2025 PREV

PREV

Comments