|

Voiced by Amazon Polly |

Overview

Kubernetes cost optimization strategies and tools are vital in the modern world to manage cloud infrastructure expenses efficiently. They involve resource scaling, auto-scaling, and cluster right-sizing to match application demands dynamically. Cost management tools like Kubernetes Cost Explorer, KubeCost, and Prometheus help monitor resource usage and allocate resources effectively. These strategies are essential as they prevent over-provisioning, reduce operational costs, and enable organizations to allocate resources more judiciously, ensuring optimal utilization in today’s cloud-native and containerized application landscape.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Introduction

In recent years, Kubernetes has emerged as the container orchestration platform for deploying and managing large-scale applications. While Kubernetes offers numerous benefits, such as flexibility, scalability, and agility, it can lead to unexpected cost overruns if not managed efficiently. This comprehensive guide will explore various methods and tools for optimizing Kubernetes costs, helping you strike the right balance between performance and budget.

Understanding Kubernetes Costs

Before diving into optimization strategies, it’s crucial to grasp the factors contributing to Kubernetes costs. The primary cost drivers are:

- Compute Resources: Kubernetes clusters run on virtual machines (VMs) or physical servers. The cost of these resources depends on the number of nodes, their specifications, and the cloud provider.

- Storage: Persistent storage volumes are used for data persistence and application state. The type and size of storage, as well as data transfer costs, impact expenses.

- Networking: Kubernetes involves network traffic between pods, services, and external resources. Data transfer costs and network ingress/egress charges can add up.

- Management and Support: Kubernetes management tools, monitoring, and support services costs.

- Third-party Services: Expenses for external services integrated with your Kubernetes applications, such as databases or messaging queues.

Top Kubernetes Cost Optimization Strategies

- Rightsizing Your Cluster

Rightsizing your cluster is a fundamental cost optimization strategy. It involves adjusting the number and types of Kubernetes cluster nodes to match your resource needs. Overprovisioning nodes can lead to unnecessary costs, while under provisioning can impact performance.

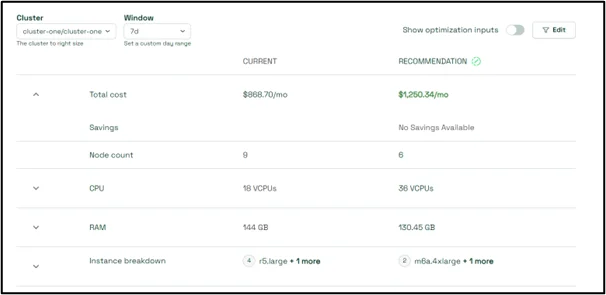

Tools: Cloud provider-specific auto-scaling tools like AWS Auto Scaling Groups, Google Cloud’s Cluster Autoscaler, Azure Virtual Machine Scale Sets, and third-party tools like kubecost and cast.ai can suggest the cluster right sizing recommendation.

The above cluster rightsizing recommendation is provided by the kubecost tool. To install kubecost follow Installation – Kubecost Documentation.

2. Resource Allocation and Utilization

Efficient resource allocation and utilization are critical for cost optimization. Kubernetes provides mechanisms for specifying how much CPU and memory each pod should request and limit. By accurately setting these values based on your application’s requirements, you prevent resource over-allocation and ensure that resources are used efficiently. This prevents resource contention, improves application performance, and reduces costs.

Tools & technique: Kubernetes Resource Quotas, LimitRange, Vertical Pod Autoscaling (VPA), kubecost, cast.ai etc.

The below container requests Right-sizing recommendation is provided by the kubecost tool.

- Pod Density

Pod density optimization involves maximizing the number of pods running on each node without causing resource contention. You can achieve this by using node affinity and anti-affinity rules to control pod placement. Node affinity ensures pods are scheduled on nodes that match specific criteria, while node anti-affinity prevents pods from being placed on nodes that already host pods from the same application. These strategies ensure that resources are evenly distributed across nodes, maximizing utilization and reducing the need for additional nodes.

Tools & technique: Kubernetes PodAffinity and PodAntiAffinity, kubecost, cast.ai.

The above Underutilized Node details are provided by kubecost tool.

- Efficient Storage Usage

Minimizing storage costs is crucial in Kubernetes cost optimization. Kubernetes provides Storage Classes that allow you to define different storage tiers with varying performance characteristics. By choosing the appropriate Storageclass for your application’s data storage needs, you can avoid overprovisioning expensive storage for less critical data. Regularly monitoring and reclaiming unused or orphaned persistent volumes (PVs) can free up resources and reduce storage costs. Tools like Kasten K10 offer backup and recovery solutions to help you manage storage efficiently.

The above volume resizing recommendations are provided by the kubecost tool.

- Cost Monitoring and Analysis

Regular cost monitoring and analysis are essential for identifying inefficiencies and cost spikes in your Kubernetes environment. Most cloud providers offer cost management tools that allow you to track and visualize your spending. These tools provide insights into which resources drive costs and can help you make informed decisions about resource allocation and scaling.

Tools: Cloud provider cost management tools (e.g., AWS Cost Explorer, Google Cloud Cost Management) and third-party tools like Kubecost and KubeSquash.

The above cluster cost summary is provided by kubecost tool.

6. Cluster Scheduling

Cluster scheduling optimizes pod placement across nodes to ensure efficient resource utilization. Kubernetes has a default scheduler that makes placement decisions based on resource requests, node affinity, and anti-affinity rules. However, you can also use external schedulers like Kube Scheduler or Rancher’s scheduler to customize scheduling behavior based on your specific requirements.

Tools: Kubernetes Scheduler and external schedulers like KubeScheduler and Rancher’s scheduler.

- Cost-Efficient Architectures

Consider cost-efficient architecture when designing your Kubernetes applications. Serverless computing options and managed Kubernetes services provided by cloud providers can reduce operational overhead and optimize costs.

Tools: AWS Fargate, Google Kubernetes Engine (GKE), Azure Kubernetes Service (AKS), and cloud-native serverless platforms.

8. Optimize for Spot Instances or Preemptible VMs

You can optimize costs for non-production workloads by leveraging spot instances (AWS) or preemptible VMs (Google Cloud). These resources are significantly cheaper than on-demand instances but come with the caveat that they can be terminated with short notice.

Tools & techniques: Kubernetes Pod Disruption Budgets for managing spot instances, kubecost, cast.ai, etc.

The above spot instance recommendations are provided by the kubecost tool.

Conclusion

Kubernetes cost optimization is an ongoing effort that requires a combination of best practices, resource management, and various tools and strategies. By rightsizing your cluster, optimizing resource usage, and making informed networking and storage decisions, you can balance performance and cost efficiency in your Kubernetes deployments.

Drop a query if you have any questions regarding Kubernetes and we will get back to you quickly.

Making IT Networks Enterprise-ready – Cloud Management Services

- Accelerated cloud migration

- End-to-end view of the cloud environment

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. What are the potential risks of not optimizing Kubernetes costs?

ANS: – Failing to optimize Kubernetes costs can result in significant budget overruns. It may lead to unnecessary spending on compute resources, storage, and network usage. Additionally, it can impact the overall performance and scalability of your applications, as resources might be either underutilized or overcommitted.

2. How often should I perform cost monitoring and analysis for my Kubernetes cluster?

ANS: – Regular cost monitoring and analysis are essential. Ideally, you should review your costs on a weekly or monthly basis. This frequency allows you to promptly identify trends, anomalies, and potential areas for optimization. However, the exact cadence may vary depending on your organization’s needs and the scale of your Kubernetes deployments.

3. What's the difference between horizontal and vertical pod auto-scaling, and when should I use each?

ANS: – Horizontal Pod Autoscaler (HPA) adjusts the number of pod replicas based on CPU or memory utilization. It’s suitable for handling varying workloads and scaling applications horizontally. Vertical Pod Autoscaler (VPA) optimizes resource requests and limits for pods, improving resource utilization and cost efficiency. Use HPA for scaling out and in, while VPA is ideal for fine-tuning resource allocation within pods.

WRITTEN BY Harikrishnan S

Harikrishnan Seetharaman is a Research Associate (DevOps) at CloudThat. He completed his Bachelor of Engineering degree in Electronics and Communication, and he achieved AWS solution architect-Associate certification. His area of interest is implementing a cloud-native solution for customers and helping them by proving robust and reliable solutions for their complex problems, DevOps, and SaaS. Apart from his professional interest he likes to spend time in farming and learning new DevOps tools.

Login

Login

September 15, 2023

September 15, 2023 PREV

PREV

Comments