|

Voiced by Amazon Polly |

Welcome to the future of AI development with Amazon Bedrock AgentCore – a game-changing framework from AWS that empowers developers to build intelligent, scalable and secure AI agents. Whether you’re crafting a customer support bot, a data analysis assistant or an automation agent, AgentCore gives you the infrastructure to make it real.

Unlike traditional AI tools, Amazon Bedrock AgentCore isn’t just about accessing foundation models like Claude or Titan. It’s about wrapping those models into fully functional AI agents with memory, identity, observability and tool integration. This means your agents can remember conversations, securely act on behalf of users and even browse the web or write code – all within a serverless, scalable environment.

With AgentCore Runtime, your agents run in isolated sessions, ensuring security and performance. The AgentCore Gateway enables interaction with external APIs, while AgentCore Memory facilitates both short-term and long-term context retention. Identity management integrates with systems like Cognito and Okta, and built-in tools like the Code Interpreter and Browser Tool make your agents truly capable.

Getting started is easy: explore the official documentation, dive into the GitHub samples and use the SDK to bootstrap your first agent. From there: deploy, integrate, observe and iterate.

Whether you’re building a support agent, an automation bot or a data analysis assistant, Amazon Bedrock AgentCore is your launchpad for AI-first applications. With consumption-based pricing, you only pay for what your agent uses – no overprovisioning, no idle costs.

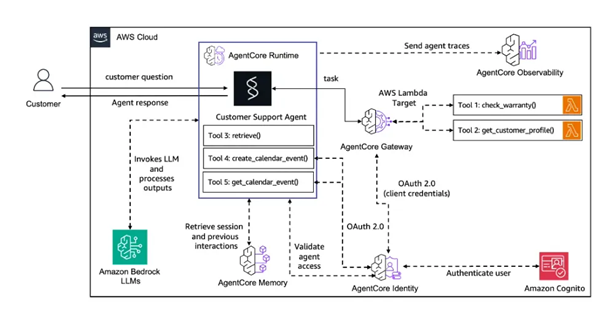

This post breaks down everything you need to know, along with a step-by-step walkthrough of the architecture diagram so you can visualize how all the pieces connect.

Start Learning In-Demand Tech Skills with Expert-Led Training

- Industry-Authorized Curriculum

- Expert-led Training

What Is Bedrock AgentCore?

At its heart, Bedrock AgentCore is a managed framework by AWS that helps you build, deploy and operate AI agents reliably, securely and at scale. Instead of reinventing infra, you focus on your agent logic, the “mind”, while AWS handles the “body” – runtime, memory, identity, gateway and observability.

So, when someone asks, “Is it Bedrock or Bedrock AgentCore?” here’s the quick answer:

- Bedrock = models (Claude, Titan, etc.)

- AgentCore = the framework that wraps models into production-grade agents

Core Components & How They Work

- AgentCore Runtime – Where your agent executes. Serverless, isolated per session and auto-scales.

- AgentCore Gateway – Lets agents call external APIs/tools; converts APIs into agent-aware tools.

- AgentCore Memory – Stores short-term session context and configurable long-term memory.

- Identity – Manages secure access and delegation (Cognito, Okta, OAuth 2.0).

- Built-in Tools – Code Interpreter, Browser Tool and custom tools you register.

- Observability – Traces, metrics and logs so you can monitor, audit and iterate.

How to Get Started - A Simple Flow

- Refer to the official documentation for setup instructions and best practices.

- Explore the amazon-bedrock-agentcore-samples GitHub repo (runtime, gateway, memory examples).

- Use the SDK & Starter Toolkit to bootstrap an agent.

- Deploy to AgentCore Runtime (CLI or APIs).

- Integrate tools via Gateway to let the agent act (not just chat).

- Configure Memory & Identity (what to remember and who the agent can act for).

- Observe, iterate and improve with telemetry and logs.

Diagram Walkthrough

Source: AWS builder center

Let’s walk through the picture step by step so you can see precisely how a real customer support request flows through AgentCore.

Step 1 – Customer sends a question

A user types a query (e.g., “Can you check the warranty on my speaker?”). That request is received by your front end and forwarded to the Customer Support Agent running in AgentCore Runtime.

Step 2 – AgentCore Runtime receives the session

AgentCore Runtime hosts the agent in a serverless, isolated session. Each session is sandboxed so customer data stays private between sessions. The runtime orchestrates the agent’s reasoning, tool calls and memory access.

Step 3 – Agent invokes an LLM on Amazon Bedrock

When the agent needs to interpret intent or generate natural language, it calls an LLM on Amazon Bedrock (e.g., Titan or Claude). The model helps the agent convert “check warranty” into structured steps: find the product, fetch the customer profile and call the warranty API.

Step 4 – Memory retrieval (AgentCore Memory)

The agent checks AgentCore Memory for session state and previous interactions (short-term memory) or user preferences (long-term). For example, if the user asked about this device last week, the agent can reference that context.

Step 5 – Validate identity & permissions (AgentCore Identity + Cognito)

Before making sensitive calls, the agent’s access is validated. AgentCore Identity uses OAuth 2.0 and integrates with identity providers such as Amazon Cognito to authenticate the user and confirm what the agent is permitted to do on their behalf.

Step 6 – Choose and call a tool via AgentCore Gateway

The agent decides which tool to use and routes the call through the AgentCore Gateway. The gateway converts the agent’s tool request into a secure backend call. In the diagram, the gateway invokes an AWS Lambda target, which executes actual business logic.

- Example tools shown in the diagram:

- Tool 1: check_warranty() (Lambda) – queries warranty DB

- Tool 2: get_customer_profile() (Lambda) – returns customer details

- In the runtime box you might also see: retrieve(), create_calendar_event(), get_calendar_event() for scheduling tasks.

Step 7 – Lambda returns factual data to the agent

Lambda calls your internal systems (databases, microservices) and returns verified data to the agent via the Gateway. This gives the agent real-world facts, not guesses.

Step 8 – Observability records traces and metrics

Throughout the flow, AgentCore Observability captures traces, logs and metrics. You get end-to-end visibility: what the model produced, which tools were invoked, how long each step took and whether any retries or errors occurred.

Step 9 – Agent composes the final response and replies to the customer

Using the data from the tools, context from memory and language capability from Bedrock LLMs, the agent crafts a human-friendly response:

“Hi Priya, your speaker warranty is valid until December 2025. Would you like me to schedule a service pickup?”

The response is sent back to the customer, and the session state is updated in memory.

The Three Pillars of Agent Intelligence

Every production-grade agent relies on these three pillars:

- Memory – continuity and personalization across sessions.

- Gateway – secure, auditable bridge to real systems and actions.

- Identity – authentication and least-privilege authorization for safe operation.

These pillars ensure agents are useful, trustworthy and enterprise-ready.

Common Workflow Patterns Under the Hood

AgentCore supports the common patterns you’ll use when designing agents:

- Prompt Chaining: chaining multiple LLM calls (summarize → refine → act).

- Prompt Routing: dynamically selecting prompts or models for domain-specific queries.

- Parallelization: running independent checks (sentiment, topic extraction) concurrently.

- Orchestration: coordinating chains, tools, error handling, and human-in-the-loop stops.

These patterns enable your agent to be modular, efficient and resilient.

Practical Tips & Design Considerations

- Session isolation is non-negotiable – ensure data boundaries per user session.

- Memory policy matters – decide what to keep, for how long, and when to expire.

- Least-privilege access – make the agent’s permissions as narrow as possible.

- Observability = trust – log reasoning steps and tool outputs for audits.

- Gateway adapters make your backend “agent-aware” without changing internal APIs.

Making it Real-Use Cases

- Support agents that read internal knowledge bases and remember past conversations.

- Automation agents that call internal APIs to schedule tasks, raise tickets or generate reports.

- Web-browsing agents that fetch live information when APIs are unavailable.

- Data analysis agents that write and execute code (Code Interpreter) for ad-hoc analysis.

Why AgentCore Matters

Amazon Bedrock AgentCore is more than a framework – it’s the infrastructure that turns LLMs into real, safe, and scalable AI agents. It allows you to focus on the “mind” (agent logic) while AWS provides the “body” (runtime, gateway, memory, identity, observability). That’s how you move generative AI from demos to production-grade systems.

Upskill Your Teams with Enterprise-Ready Tech Training Programs

- Team-wide Customizable Programs

- Measurable Business Outcomes

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. How is AgentCore different from plain Bedrock?

ANS: – Bedrock gives you models. AgentCore wraps models into agents with runtime, memory, identity, gateways and observability.

2. What should I start with?

ANS: – Read docs → run the GitHub samples → bootstrap with the SDK → deploy and connect a simple Lambda tool.

3. Is it expensive?

ANS: – Pricing is consumption-based — you pay for what the agent uses.

WRITTEN BY Priya Kanere

Priya Kanere is an AWS Subject Matter Expert and Champion AWS Authorized Instructor at CloudThat, specializing in cloud technologies, Python, data analytics, machine learning and generative AI. With extensive experience in training and mentoring, she has trained over 3,000 professionals to upskill in emerging technologies. Known for simplifying complex concepts through hands-on teaching and connecting theory with real-world applications, she brings deep technical knowledge and practical insights into every learning experience. Priya’s passion for empowering learners reflects in her unique approach to learning and development.

Login

Login

November 19, 2025

November 19, 2025 PREV

PREV

Comments