|

Voiced by Amazon Polly |

Overview

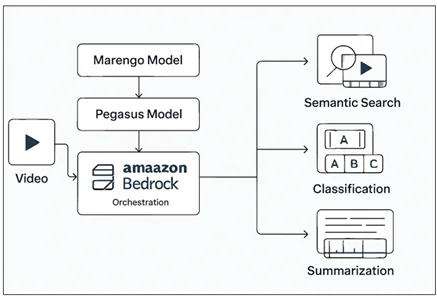

Using sophisticated multimodal foundation technology, TwelveLabs models can process a video’s audio, text, and even the temporal relationships between frames and its visual components. This multifaceted approach produces a nuanced, contextual understanding that can recognise objects, actions, background noise, and the larger narrative context of any given video. Two flagship models are available on the platform: Pegasus 1.2, a sophisticated video-language model that turns raw video into structured data by producing titles, summaries, hashtags, and detailed reports, making previously opaque video content readable and actionable, and Marengo 2.7, which excels at quickly producing context-aware embeddings for semantic search, scene classification, and pattern discovery without the need for predefined tags.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Introduction

The digital landscape is seeing a remarkable increase in video content creation. Platforms are producing over 720,000 hours of video every minute worldwide. Video makes up nearly 80% of all internet traffic and is the fastest-growing part of enterprise data. Yet, it remains one of the hardest types of content for organizations to analyze, search, and gain insights from. Traditional video management systems treat video files as unclear binary objects. They mainly rely on metadata, file names, and manual tagging. These methods only touch the surface of the rich information within the videos.

This change will transform how they operate and serve customers in a world increasingly focused on video.

Key Capabilities and Benefits

With these models, organizations can:

- Search for natural language in video: For example, they can query, “show me every bicycle crash in the race,” and find the exact scenes.

- Classify scenes and create summaries: They can go beyond simple tags to provide useful metadata and insights.

- Extract insights on a large scale: They can review thousands of hours of video to uncover hidden patterns, themes in customer feedback, or trends in product usage.

- Ensure security and scalability suitable for enterprises: They can achieve this with the reliability and governance controls expected on AWS.

- Analyze multiple types of information and time patterns: They can look at visual elements, sound, spoken dialogue, and time-based patterns together for thorough indexing.

How It Works and Typical Workflow

There is no need for specialised infrastructure or internal machine learning knowledge because the TwelveLabs models are fully managed and serverless within Amazon Bedrock.

Typical flow of usage:

- Use Amazon Bedrock to access models: Choose TwelveLabs from the Bedrock model marketplace in the AWS Console, then submit a request for region-specific access to either Pegasus 1.2 or Marengo 2.7.

- Connect or upload video assets: Save your video to Amazon S3 or another compatible location.

- Establish workflows:

- Semantic search: Look for actions, events, scenes, or objects in videos using natural language.

- Classification and summarisation: Produce reports, highlights, hashtags, and chapter markers automatically.

- Get results and incorporate: Utilise the resulting timestamps, metadata, or summaries in applications for business operations, compliance, analytics, or content production.

Example Code: Using TwelveLabs in Amazon Bedrock

Here’s a typical example using the AWS SDK for Python (boto3). This shows how you might use Marengo for video semantic search.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

import boto3 client = boto3.client('bedrock-runtime’) # Bedrock endpoint response = client.invoke_model( modelId='twelvelabs.marengo-2.7', body= { "video_location": "s3://your-bucket/sample-video.mp4", "query": "Show me every customer interaction at the register" } ) print(response["result"]) |

Use Cases in the Real World

- Media & Entertainment: Instantly pull every commentary, celebration, or play in sports archives for repackaging.

- Broadcasting: Rapidly locate moments of interest in breaking news footage.

- Public Safety: Accelerate review of incident or surveillance video by searching for “car leaving the scene” or “missing person appearance”.

- Corporate Training & Compliance: Summarize and classify onboarding or safety training video hours with custom, explainable markers and highlights.

Conclusion

With TwelveLabs’ video understanding models on Amazon Bedrock, advanced video search, content summarization, and analytics become accessible to any organization, regardless of technical skill. This unlocks vast business value from video assets that previously sat idle, with AWS’s scale, security, and reliability. The partnership brings a new era of human-like video understanding for enterprises everywhere.

Drop a query if you have any questions regarding Amazon Bedrock and we will get back to you quickly.

Making IT Networks Enterprise-ready – Cloud Management Services

- Accelerated cloud migration

- End-to-end view of the cloud environment

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. What are the primary distinctions between Pegasus and Marengo?

ANS: – Marengo is an expert at enabling context-aware semantic search and classification by embedding video content. Pegasus is perfect for content automation and discovery since it can produce natural language text from videos, including titles, summaries, hashtags, and reports.

2. Does using TwelveLabs in Amazon Bedrock require deep learning experience?

ANS: – No. Users use the Amazon Bedrock console or APIs to interact with the fully managed service. There is no need to manage or tune the AI/ML infrastructure.

3. What is TwelveLabs' approach to data privacy?

ANS: – All data processing uses AWS’s integrated redundancy, privacy, and compliance controls, which are appropriate for business requirements. Unless you specify otherwise, video data never leaves your AWS account.

WRITTEN BY Nekkanti Bindu

Nekkanti Bindu works as a Research Associate at CloudThat, where she channels her passion for cloud computing into meaningful work every day. Fascinated by the endless possibilities of the cloud, Bindu has established herself as an AWS consultant, helping organizations harness the full potential of AWS technologies. A firm believer in continuous learning, she stays at the forefront of industry trends and evolving cloud innovations. With a strong commitment to making a lasting impact, Bindu is driven to empower businesses to thrive in a cloud-first world.

Login

Login

September 22, 2025

September 22, 2025 PREV

PREV

Comments