|

Voiced by Amazon Polly |

Overview

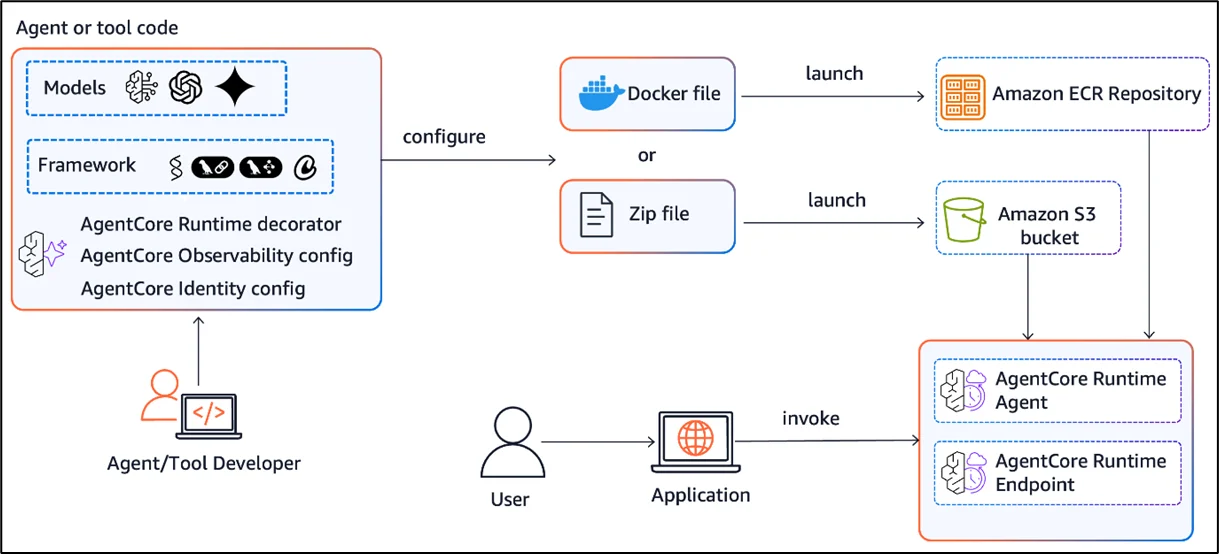

Building, deploying, and managing AI agents at scale often involves navigating complex infrastructure and container management. Amazon Bedrock AgentCore simplifies this process by providing a secure, agentic platform designed for scalable, efficient operations. Its managed service, AgentCore Runtime, provides a low-latency, serverless environment to run agents and tools, supporting multimodal workloads and long-running sessions. Traditionally, deployments relied on container-based setups using Docker and Amazon ECR, which suited organizations with established CI/CD pipelines. However, with the introduction of direct code deployment for Python, developers can now package their agent code and dependencies as a simple zip file, eliminating the need for Docker definitions and container management. This new capability empowers teams to prototype rapidly, streamline iterations, and focus on agent innovation rather than infrastructure overhead.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Introduction

Developers may manage Amazon ECR repositories, generate ARM-compatible containers, upload containers for code updates, and create a Docker file using the container deployment technique. When container DevOps pipelines have previously been set up to automate deployments, this works nicely.

Direct code deployment, on the other hand, can greatly increase developer time and productivity for clients seeking fully managed deployments. From quickly prototyping agent capabilities to delivering production workloads at scale, direct code deployment offers a safe and scalable route forward.

When using direct code deployment, developers compile code and dependencies into a zip file, publish it to Amazon S3, and set up the bucket in the agent setup. The Amazon AgentCore starting toolkit simplifies the development experience by handling dependency discovery, packaging, and upload. The API also supports direct code deployment.

Let’s take a broad look at how the two approaches differ in terms of deployment steps:

Deployment using containers:

The following actions are involved in the container-based deployment method:

- Create a Dockerfile, build an ARM-compatible container

- Establish an Amazon ECR repository

- Upload to the Amazon ECR

- Install on Amazon AgentCore Runtime

Deployment of direct code:

The following actions are involved in the direct code deployment method:

- Put your dependencies and code into a zip file.

- Put it on Amazon

- Set up the bucket in the agent setup.

- Deploy to vAgentCore Runtime

How to use direct code deployment?

Prerequisites:

- Any Python version between 3.10 and 3.13 with your choice of package manager installed.

- We utilize the UV package manager, for instance.

- AWS account for agent creation and deployment

- Anthropic access to the Amazon Bedrock model Sonnet by Claude 4.0

Step 1: Initialize your project

Using the uv package manager, create a new Python project and open the project directory:

|

1 2 |

uv init <project> --python 3.13 cd <project> |

Step 2: Include the project’s dependencies

Install the development tools and Bedrock AgentCore libraries required for your project. Dependencies are introduced in this example using a .toml file, but they may also be defined in a requirements.txt file:

|

1 2 3 |

uv add bedrock-agentcore strands-agents strands-agents-tools uv add --dev bedrock-agentcore-starter-toolkit source .venv/bin/activate |

Step 3: Make a file called agent.py

Make the primary agent implementation file that specifies the behavior of your AI agent:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

from bedrock_agentcore import BedrockAgentCoreApp from strands import Agent, tool from strands_tools import calculator from strands.models import BedrockModel import logging app = BedrockAgentCoreApp(debug=True) # Logging setup logging.basicConfig(level=logging.INFO) logger = logging.getLogger(__name__) # Create a custom tool @tool def weather(): """ Get weather """ return "sunny" model_id = "us.anthropic.claude-sonnet-4-20250514-v1:0" model = BedrockModel( model_id=model_id, ) agent = Agent( model=model, tools=[calculator, weather], system_prompt="You're a helpful assistant. You can do simple math calculation, and tell the weather." ) @app.entrypoint def invoke(payload): """Your AI agent function""" user_input = payload.get("prompt", "Hello! How can I help you today?") logger.info("\n User input: %s", user_input) response = agent(user_input) logger.info("\n Agent result: %s ", response.message) return response.message['content'][0]['text'] if __name__ == "__main__": app.run() |

Step 4: Deploy to AgentCore Runtime

Set up and launch your agent in the AgentCore Runtime environment:

|

1 |

agentcore configure --entrypoint agent.py --name <agent-name> |

This will start an interactive session where you may select a deployment configuration type and set the Amazon S3 bucket to upload the zip deployment package to (as demonstrated in the following configuration). Select option 1, Code Zip, if you want to use direct code distribution.

Deployment Configuration

Select deployment type:

- Code Zip (recommended) – Simple, serverless, no Docker required

- Container – For custom runtimes or complex dependencies

|

1 |

agentcore launch |

This command runs the agent in the Amazon AgentCore Runtime environment, preparing it to receive and process requests, after creating a zip deployment package and uploading it to the designated Amazon S3 bucket.

Let’s ask the agent to check the weather to test the solution:

|

1 |

agentcore invoke '{"prompt":"How is the weather today?"}' |

The first deployment takes about 30 seconds, but future updates to the agent should take less than half that time due to the simplified direct code deployment procedure, enabling quicker development iteration cycles.

Conclusion

Direct code deployment in Amazon Bedrock AgentCore Runtime streamlines the path from development to production by eliminating the complexity of container creation and management.

This capability not only accelerates innovation but also reduces operational overhead, making it easier to adapt and evolve agent behaviors to meet business needs.

Drop a query if you have any questions regarding Amazon Bedrock AgentCore and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. How is direct code deployment different from container deployment?

ANS: – It lets you upload code as a ZIP file to Amazon S3, without Docker or Amazon ECR, making deployment faster and simpler.

2. Which languages are supported?

ANS: – Currently, Python (versions 3.10–3.13) is supported for direct code deployment.

3. When should I use direct code deployment?

ANS: – Use it for quick prototyping or simpler workloads; choose containers for complex dependencies or custom runtimes.

WRITTEN BY Aayushi Khandelwal

Aayushi is a data and AIoT professional at CloudThat, specializing in generative AI technologies. She is passionate about building intelligent, data-driven solutions powered by advanced AI models. With a strong foundation in machine learning, natural language processing, and cloud services, Aayushi focuses on developing scalable systems that deliver meaningful insights and automation. Her expertise includes working with tools like Amazon Bedrock, AWS Lambda, and various open-source AI frameworks.

Login

Login

February 9, 2026

February 9, 2026 PREV

PREV

Comments