|

Voiced by Amazon Polly |

Introduction

Enterprise search has evolved from basic keyword matching to intelligent systems powered by AI that interpret not only words but also meaning. The advent of Generative AI and RAG Applications has made search an integral part of app design.

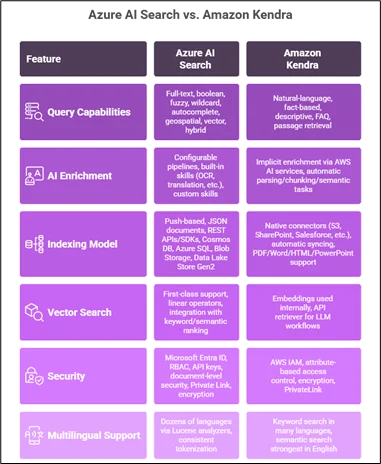

This blog post compares Azure AI Search and Amazon Kendra based on the following criteria, helping developers choose the right platform for their development.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Key Features

Query Capabilities and Search Modes

- Azure AI Search supports full-text search, boolean queries, fuzzy matching, wildcard queries, autocomplete, geospatial search, vector search, and hybrid (keyword + vector) search.

- It is built on Apache Lucene, providing access to mature analyzers, tokenizers, and scoring profiles.

- Semantic ranking is available in higher tiers to improve relevance by understanding query intent and context.

- Amazon Kendra is optimized for natural-language queries rather than structured query syntax.

- It excels at fact-based questions, descriptive queries, FAQ matching, and passage-level retrieval.

- Kendra automatically infers query intent and context using deep learning–based ranking models.

AI Enrichment and Content Understanding

- Azure AI Search offers configurable AI-enrichment pipelines with skillsets.

- The set of in-built skills includes OCR functionality, language detection, translation, entity recognition, key phrase extraction, and image analysis.

- Custom skills can also be implemented via Azure Functions for domain-related processing.

- Azure has text chunking and vectorization capabilities and stores embeddings in the index for semantic search and RAG applications.

- Amazon Kendra enriches data implicitly using AWS AI services, such as Textract and Comprehend.

- The parsing, chunking, and semantic tasks would also be performed automatically.

- It provides relevance tuning capabilities using boosting, synonyms, and incremental learning with low configuration requirements.

Indexing Model and Data Source Integration

- Azure AI Search provides push-based indexing, supporting JSON documents and REST APIs/SKD interfaces.

- The following push and pull sources are currently supported: Cosmos DB, Azure SQL Database, Azure Blob Storage, and Azure Data Lake Store Gen2.

- Integration with non-Azure systems typically requires Logic Apps or custom ingestion pipelines.

- The ultimate representation of all indexed data needs to be JSON.

- Amazon Kendra provides a range of native connectors, including Amazon S3, SharePoint, Salesforce, ServiceNow, Google Drive, Confluence, and MongoDB Atlas.

- After setup, Amazon Kendra can automatically sync data and extract content from PDF, Word, HTML, and PowerPoint documents.

- Both platforms allow custom ingestion through APIs.

Vector Search and Hybrid Retrieval

- Azure AI Search supports vector search at first class.

- Linear operators can be applied continuously, and the sum of two vectors is continuous.

- Vector similarity can be combined with keyword relevance and semantic ranking to achieve hybrid retrieval.

- This makes Azure particularly strong in semantic search, document similarity, and RAG-based chat applications.

- Amazon Kendra uses embeddings under the hood but does not expose vector indexes to developers.

- Instead, it offers an API retriever returning the most relevant document passages for LLM-based workflows.

- This abstraction makes usage easier but reduces low-level control compared to Azure AI Search.

Multilingual and Language Support

- Azure AI Search supports dozens of languages through Lucene language analyzers.

- It provides consistent tokenization and linguistic processing for multilingual applications.

- Amazon Kendra supports many languages for keyword-based search.

- Its most advanced semantic and question-answering capabilities are strongest in English.

- Developers should validate language support based on current documentation and use cases.

Technical Architecture

Azure AI Search uses an index-first architecture where documents are ingested, enriched, tokenized, and stored in inverted and optional vector indexes. AI skillsets transform content during ingestion, enabling semantic and RAG-based search. In modern agentic patterns, an LLM can decompose complex queries, orchestrate searches across indexes, and synthesize responses. Azure exposes full control through REST APIs and SDKs for .NET, Java, Python, and JavaScript.

Amazon Kendra is a fully managed, ML-driven search service. Content is ingested via connectors or APIs into managed indexes with provisioned query and storage capacity. Amazon Kendra automatically interprets queries, ranks passages, and generates answers using integrated ML models, with developers primarily interacting through configuration and unified APIs rather than custom pipelines.

Both services are serverless and highly available. Azure emphasizes modular separation of indexing, enrichment, and querying, while Kendra focuses on tightly integrated, low-configuration ML pipelines, with horizontal scaling handled through capacity units.

Pricing Models

Azure AI Search is priced per search unit, each providing fixed storage and query throughput at a flat hourly rate. Costs increase by adding replicas or partitions for a larger scale, and certain AI enrichments incur additional charges. A limited free tier is available for development, but there is no free production tier.

Amazon Kendra follows a capacity-based pricing model with multiple editions. Each index includes a baseline query and storage units, with additional units required for larger scale. Pricing is hourly, connectors add monthly costs, and while a developer trial exists, production usage can become costly at scale.

Performance, Integration, and Ecosystem

Both services deliver low-latency, high-throughput search when properly provisioned. Azure scales via additional search units, while Amazon Kendra scales through query and storage units. Monitoring is integrated with Azure Monitor and Amazon CloudWatch, respectively.

Ecosystem alignment is a key differentiator. Azure AI Search integrates tightly with Azure OpenAI, Azure AI services, Synapse, Logic Apps, and the Power Platform. Amazon Kendra integrates natively with Amazon Bedrock, Amazon Q, AWS Lambda, and conversational services like Amazon Lex. Both platforms offer robust SDKs and REST APIs for enterprise application integration.

Conclusion

Azure AI Search and Amazon Kendra are both mature, powerful enterprise search platforms, but they cater to different development styles and priorities. Azure AI Search is ideal for teams that want deep customization, explicit control over AI enrichment and vector search, and tight integration with the Azure AI ecosystem. Amazon Kendra is well-suited for organizations that want rapid deployment, strong natural-language Q&A capabilities, and minimal operational overhead, especially when working with common enterprise SaaS data sources on AWS.

Drop a query if you have any questions regarding Azure AI Search or Amazon Kendra and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. Which service is better for RAG (Retrieval-Augmented Generation) and LLM-based applications?

ANS: – Azure AI Search is generally the better choice for RAG-heavy and LLM-centric architectures because it offers first-class vector search, hybrid retrieval (keyword + vector), and tight integration with Azure OpenAI. Developers have full control over embedding generation, chunking, similarity algorithms, and ranking strategies. Amazon Kendra can also be used in RAG workflows via its Retriever API, but it abstracts most vector and ranking logic, making it better suited to simpler, managed RAG scenarios rather than highly customized pipelines.

2. When should a developer choose Amazon Kendra over Azure AI Search?

ANS: – Amazon Kendra is a strong choice when the primary goal is to enable enterprise-grade natural-language search and Q&A with minimal setup, quickly. It is particularly effective when working with common enterprise content sources such as SharePoint, Salesforce, or Confluence, thanks to its native connectors. Teams that prioritize speed of implementation, reduced operational complexity, and out-of-the-box semantic understanding, especially within the AWS ecosystem, often prefer Amazon Kendra over Azure AI Search.

WRITTEN BY Shantanu Singh

Shantanu Singh is a Research Associate at CloudThat with expertise in Data Analytics and Generative AI applications. Driven by a passion for technology, he has chosen data science as his career path and is committed to continuous learning. Shantanu enjoys exploring emerging technologies to enhance both his technical knowledge and interpersonal skills. His dedication to work, eagerness to embrace new advancements, and love for innovation make him a valuable asset to any team.

Login

Login

February 5, 2026

February 5, 2026 PREV

PREV

Comments