|

Voiced by Amazon Polly |

Introduction

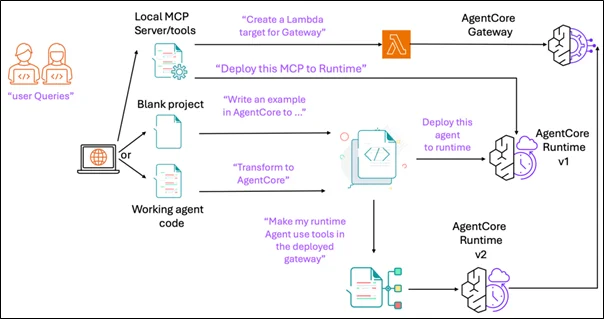

Building production-grade AI agents has always been tricky. Developers wrestle with infrastructure complexity, spend weeks on custom integrations, and face steep learning curves that delay deployment. Amazon Bedrock AgentCore, paired with the Model Context Protocol, changes this entire equation. What previously took engineering teams weeks to build can now happen through simple conversational commands, dramatically accelerating how organizations deploy intelligent agents.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Amazon Bedrock AgentCore: Your Agent Platform

Think of AgentCore as your fully managed infrastructure for building and running AI agents at scale. Unlike traditional approaches, where everything is built from scratch, Amazon AgentCore provides ready-to-use components that handle the heavy lifting. You get complete session isolation, so different users never see each other’s data. Support is available for both quick, real-time tasks and lengthy 8-hour processes, as well as enterprise security features, including Amazon VPC connectivity.

The platform breaks down into four main services. Runtime handles the deployment and scaling of your agents automatically. Gateway transforms your existing APIs and AWS Lambda functions into tools that agents can use, including native MCP server support. Memory enables agents to have both short-term recall for ongoing conversations and long-term storage for persistent knowledge. Observability enables you to monitor what your agents are doing in production, allowing you to catch issues before they become problems.

Model Context Protocol: A Universal Standard

Anthropic introduced MCP in November 2024 to address a fundamental problem: every AI tool required custom integration code for each data source it wanted to access. This created exponential complexity as organizations adopted more tools and connected more systems. MCP provides a single standardized way for AI systems to connect with data sources and tools, similar to how USB-C eliminated the need for different cables for different devices.

The protocol operates on a client-server model using JSON-RPC messages. Servers expose three types of capabilities: Prompts act as instruction templates, Resources provide structured data for context, and Tools are functions that AI models can execute. Any MCP-compliant client can communicate with any MCP-compliant server without custom integration work.

Technical Architecture and Capabilities

AWS released the Amazon AgentCore MCP Server in October 2025 with integrated support for runtime operations, gateway connectivity, identity management, and memory systems. This server specifically targets the friction points that slow down agent development cycles.

Amazon AgentCore’s architecture requires stateless HTTP servers, which might seem limiting until you understand the trade-off. Stateless design enables horizontal scaling since any server instance can handle any request. Session continuity happens through the Mcp-Session-Id header that the Runtime automatically includes in requests. Servers use this header to fetch session-specific data from AgentCore Memory services while maintaining stateless operation at the transport layer. This design pattern is crucial for enterprise deployments that need to handle unpredictable load.

Protocol Implementation and Message Flow

Amazon AgentCore MCP uses JSON-RPC 2.0 for communication, where each tool invocation is sent as an independent, trackable request. The MCP server executes the call and returns a structured response, which the runtime then translates for the agent. This design delivers scalable, stateless communication while preserving stateful user context through session identifiers, combining simplicity, reliability, and continuity.

Resource and Tool Registration Patterns

MCP servers expose three main primitives within Amazon AgentCore’s orchestration layer: Resources, Tools, and Prompts.

Resources are accessible data sources, such as databases, files, or APIs, available as static or dynamic content.

Tools are executable functions defined with JSON Schemas for inputs and outputs; the Gateway validates these and manages execution, timeouts, and retries for both quick and long-running tasks.

Prompts serve as reusable templates that agents can invoke with parameters, such as generating context-specific code reviews.

The Gateway simplifies setup by automatically discovering and registering all available primitives, while streaming handlers enable efficient processing of large datasets without memory strain.

Layered Context Architecture

Amazon AgentCore’s documentation approach uses four layers that build on each other progressively. The first layer includes your development environment, which consists of tools such as Kiro, Amazon Q Developer CLI, Claude Code, or Cursor. Layer two provides comprehensive AWS service documentation through a dedicated MCP server. Layer three covers the documentation of your chosen framework, whether that’s Strands, LangGraph, or another option. The fourth layer delivers SDK-specific documentation for Bedrock AgentCore APIs.

This hierarchy enables progressive disclosure of complexity. Your agent might start with a general question about AWS capabilities in layer two, then drill down to specific SDK method signatures in layer four. The MCP server caches frequently accessed documentation fragments to reduce latency on repeated queries while maintaining freshness through configurable time-to-live policies.

Source – Link

Real-World Applications and Use Cases

Accelerated Agent Development

The Amazon AgentCore MCP server automates much of the agent development process. Instead of manually adapting your code for deployment, it handles the integration automatically, guiding you through steps such as importing runtime libraries, updating dependencies, and initializing with BedrockAgentCoreApp(). Your core logic stays untouched, allowing you to focus on building intelligent behaviour while AgentCore manages deployment and infrastructure seamlessly.

Production Deployment Patterns

Deploying agents through the Amazon AgentCore CLI happens directly through your MCP client. The MCP server executes necessary CLI commands automatically, handling containerization, security configuration, and endpoint creation. Manual infrastructure management drops to near-zero while you maintain full control over agent behavior and resource allocation.

After deployment, you can add additional capabilities. Connect tools through the AgentCore Gateway for external system access. Integrate Memory services for conversation context. Configure Identity management for access control. Add advanced framework features from LangGraph, CrewAI, or other supported platforms.

Technical Advantages and Performance Benefits

Integrating Amazon AgentCore with MCP significantly enhances efficiency and performance. Its standardized interface enables developers to build, modify, and deploy agents directly within their preferred environments using natural language commands, without switching tools.

The stateless HTTP architecture ensures seamless compatibility with AWS load balancing, delivering both high-speed, sub-second responses and long-running task support of up to eight hours.

Industry-wide adoption by leaders like OpenAI and Google DeepMind further validates MCP’s reliability and growing role as a universal standard for AI agent development.

Conclusion

Amazon AgentCore with MCP simplifies AI agent development by reducing weeks of infrastructure setup to minutes through conversational commands.

As MCP adoption grows across the ecosystem, enterprises gain increasingly powerful tools for deploying context-aware AI agents at scale.

Drop a query if you have any questions regarding Amazon AgentCore and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. How does MCP differ from traditional integrations?

ANS: – Traditional APIs require custom code for each integration. MCP offers a single standard protocol, drastically reducing complexity while supporting streaming, structured resources, and reusable prompts.

2. Can Amazon AgentCore work with non-AWS frameworks?

ANS: – Yes. Amazon AgentCore supports multiple ecosystems, including LangGraph, CrewAI, LlamaIndex, and OpenAI’s SDKs. Any ARM64 containerized framework can run within Amazon AgentCore Runtime.

3. What security measures should enterprises apply?

ANS: – Use Amazon VPC connectivity, enforce AWS IAM and OAuth for authentication, and leverage Amazon AgentCore’s session isolation to prevent data leakage. Regularly audit tool permissions and use the new Service-Linked Role for simplified credential management.

WRITTEN BY Parth Sharma

Parth works as a Subject Matter Expert at CloudThat. He has been involved in a variety of AI/ML projects and has a growing interest in machine learning, deep learning, generative AI, and cloud computing. With a practical approach to problem-solving, Parth focuses on applying AI to real-world challenges while continuously learning to stay current with evolving technologies and methodologies.

Login

Login

November 6, 2025

November 6, 2025 PREV

PREV

Comments