|

Voiced by Amazon Polly |

Overview

Organizations today face a growing challenge: processing vast quantities of audio data, from customer calls and meetings to podcasts and voice memos, to extract business insights efficiently. Automatic Speech Recognition (ASR) serves as a crucial first step in this journey, converting speech to text for downstream analytics. However, scaling ASR workloads can be complex and costly due to their intensive compute requirements. This blog explores a practical solution: deploying state-of-the-art NVIDIA Parakeet ASR models on Amazon SageMaker AI with asynchronous endpoints, powered by NVIDIA NIM containers. This approach enables robust, cost-optimized audio transcription pipelines that can handle large files seamlessly and scale dynamically as business demands fluctuate.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Introduction

NVIDIA Speech AI Technology: Parakeet ASR and Riva

At the heart of the solution lies the Parakeet ASR model family from NVIDIA, which delivers high-performance speech recognition with low word error rates thanks to its Fast Conformer encoder architecture. This design enables inference up to 2.4 times faster than standard conformer models, while preserving transcription accuracy. Parakeet ASR is part of a broad suite of speech technologies offered by NVIDIA and can be fine-tuned for specific languages, accents, and industry domains. Developers also benefit from the NIM framework, a collection of GPU-accelerated microservices that simplify model deployment through Dockerized containers with all necessary dependencies.

The architecture leverages the integration between Parakeet ASR, the NVIDIA Riva toolkit for advanced speech AI, and the flexibility of the NIM microservices approach. This allows organizations to deliver accurate transcription and natural-sounding voices across more than 36 languages, making the models suitable for applications in customer service, accessibility compliance, and media analytics. Customization enables improved brand alignment and domain adaptation, while seamless interoperability with LLMs and retrieval-augmented generation makes Parakeet ideal for agentic AI use cases.

Solution Architecture

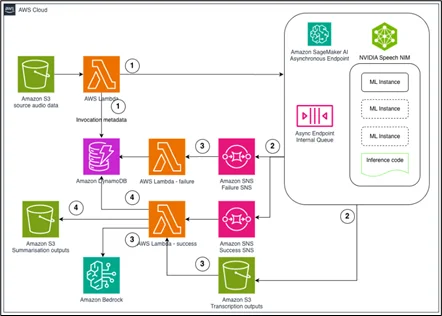

The solution features a scalable, event-driven pipeline leveraging AWS managed services for robustness and cost control:

- Audio files are ingested via Amazon S3, triggering an AWS Lambda function that initiates metadata extraction and orchestrates the workflow.

- Amazon SageMaker asynchronous endpoints host the Parakeet ASR model, automatically scaling to zero when idle and delivering results as jobs complete.

- Amazon SNS notifications are used to communicate processing outcomes (success or failure) and trigger downstream workflows.

- Transcription results are stored in Amazon S3 and further processed using Amazon Bedrock’s LLMs for summarization, classification, or insights extraction.

- Amazon DynamoDB tracks workflow status and metadata, enabling real-time monitoring and analytics across the audio processing pipeline.

Deployment Options

Deployment is flexible, supporting three main container strategies:

- NVIDIA NIM Container: A high-performance, containerized solution supporting dual HTTP and gRPC protocols for optimized processing. The endpoint automatically routes smaller files through HTTP (fast, low latency), while larger or advanced-feature jobs use gRPC, supporting speaker diarization and timestamping.

- AWS Large Model Inference (LMI) Container: Pre-configured Docker images optimized for model parallelism, quantization, and efficient batching, suitable for especially large models or research-oriented deployment.

- SageMaker PyTorch Container: For users who want a fully custom environment, SageMaker PyTorch DLC allows for direct packaging of Hugging Face models or your own artifacts, with configuration options for memory, timeout, and parallelism tailored to workload requirements.

Each deployment method is designed for rapid scaling, high throughput, and comprehensive error handling. The NIM container implementation is particularly notable for its intelligent routing system, which selects the most suitable protocol based on file size and requested features, ensuring optimal resource utilization and performance. The design also includes advanced audio preprocessing, concurrent ASR and speaker identification, and robust output structuring with confidence scores and speaker attribution, all returned in standardized JSON format for easy integration.

Conclusion

Hosting NVIDIA Parakeet ASR models on Amazon SageMaker AI with NIM containers provides a powerful and scalable solution for audio transcription.

Whether deployed for customer service, internal meetings, media production, or compliance workflows, the solution is robust, flexible, and enterprise ready. Teams can tailor deployments to specific requirements using NIM, LMI, or PyTorch containers, all supported by detailed AWS documentation and automation scripts for rapid onboarding.

Drop a query if you have any questions regarding NVIDIA Parakeet ASR models and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. What are the main benefits of using NVIDIA Parakeet ASR with Amazon SageMaker AI asynchronous endpoints?

ANS: –

- High accuracy, low word error rate transcriptions.

- Fully managed, auto-scaling infrastructure.

- The ability to handle large files and batch workloads efficiently.

- Flexible protocol support (HTTP/gRPC) and advanced diarization features.

2. What input formats are supported by the NIM deployment?

ANS: – Multipart form data (recommended for compatibility), base64 JSON, and raw binary uploads are all accepted, enabling integration with a variety of source systems.

3. Can teams deploy open-source models instead of NIM containers?

ANS: – Yes, organizations can use Hugging Face models or other open-source alternatives, packaging them for Amazon SageMaker or Amazon EKS if preferred.

WRITTEN BY Sridhar Andavarapu

Sridhar Andavarapu is a Senior Research Associate at CloudThat, specializing in AWS, Python, SQL, data analytics, and Generative AI. He has extensive experience in building scalable data pipelines, interactive dashboards, and AI-driven analytics solutions that help businesses transform complex datasets into actionable insights. Passionate about emerging technologies, Sridhar actively researches and shares knowledge on AI, cloud analytics, and business intelligence. Through his work, he strives to bridge the gap between data and strategy, enabling enterprises to unlock the full potential of their analytics infrastructure.

Login

Login

November 6, 2025

November 6, 2025 PREV

PREV

Comments