- Consulting

- Training

- Partners

- About Us

x

Data is the most superior aspect in this existing world. Configuring and managing data is the basic requirement in every aspect of life. Data is the facts and statistics collected during every operations of business, They can be used to measure or record business activities may be internal or external. Here we are streaming logs to S3 bucket so that there won’t be any data loss.

Kinesis firehose captures data from web applications, sensors, mobile applications, and various different sources and streams into Amazon S3 or Redshift. Kinesis Firehose takes care of monitoring, scaling and management of data.

[showhide type=”diagram” more_text=”Show Diagram” less_text=”Hide Diagram” hidden=”yes”]

Fluentd is a unified open source data collector which unify data collection and consumption for data use and understanding data.

td-agent is the stable lightweight server agent distribution of fluentd which resides on data generating application.td-agent is a data collection daemon. It collects data from various data sources and uploads them to treasure datastore.

[showhide type=”diagram2″ more_text=”Graphical Representation…” less_text=”Hide Image” hidden=”yes”]

[/showhide]

Here, we are going to stream Nginx logs to S3 using td-agent (logging tool) through Kinesis Firehose which is a managed service for streaming data to S3 or Red Shift.

[showhide type=”fluent_iam_role” more_text=”Click here for the role” less_text=”Hide Details” hidden=”yes”]

Role “fluentd” is as follows:

|

1 2 3 4 5 6 7 8 9 |

{ "Effect":"Allow", "Action":[ "s3:Get*", "s3:List*", "s3:Put*", "s3:Post*" ], "Resource":[ "arn:aws:s3:::YOUR_BUCKET_NAME/logs/*", "arn:aws:s3::: YOUR_BUCKET_NAME" ] } |

[/showhide]

Initially, create Kinesis Firehose Delivery stream using AWS Management console For more information, go through https://aws.amazon.com/kinesis/firehose/

[showhide type=”firehose” more_text=”For Detailed Instructions..” less_text=”Hide Details” hidden=”yes”]

After creating Kinesis Firehose delivery stream, select the destination where to send the streamed data. The destination might be either S3 bucket or Redshift.

Here we are going to configure buffer size, buffer interval and compression options for a stream

Enter the Buffer size as 5 and Buffer interval as 300

Kinesis Firehose buffers data up to 5 MB or 300 seconds whichever condition is satisfied first.

[/showhide]

Fluentd is available as a Ruby gem (gem install fluentd). Also, Treasure Data packages it with all the dependencies as td-agent.

[showhide type=”td-agent” more_text=”For Detailed Instructions..” less_text=”Hide Details” hidden=”yes”]

Here, we proceed with td-agent.

|

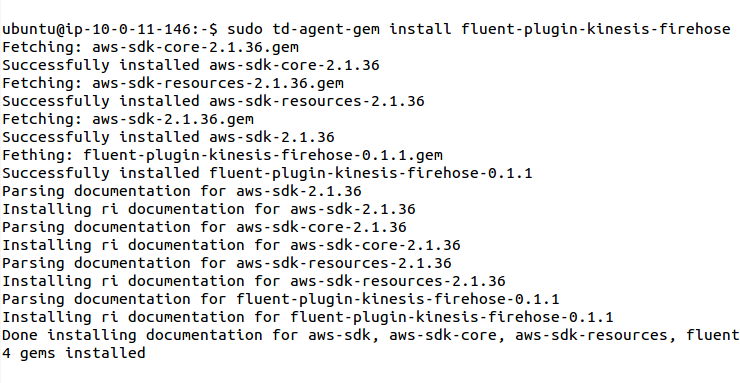

It is a Fluentd output plugin for Kinesis Firehose. It will push the logs out of kinesis firehose to destination

|

1 |

sudo td-agent-gem install fluent-plugin-kinesis-firehose |

[/showhide]

Configuration file is located in “/etc/td-agent/td-agent.conf”.

[showhide type=”agent_conf” more_text=”For Detailed Instructions..” less_text=”Hide Details” hidden=”yes”]

Copy and paste the following contents into the file and provide your access key and secret key.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

<source> @type tail format nginx path /var/log/nginx/access.log tag nginx.access </source> <match **> @type kinesis_firehose delivery_stream_name incoming-stream aws_key_id XXXXXXXXXXXX aws_sec_key XXXXXXXXXXXX region us-west-2 flush_interval 1s </match> |

[/showhide]

We now have to start the agent service.

[showhide type=”start_agent” more_text=”For Detailed Instructions..” less_text=”Hide Details” hidden=”yes”]

Start service using the following command:

|

1 |

sudo /etc/init.d/td-agent start |

Use the below commands to create logs This command is used for simple load testing. It will create 1000 requests with 10 requests running concurrently.

|

1 |

ab -n 1000 -c 10 https://localhost/ |

[/showhide]

Make sure that the logs are getting streamed to S3 bucket.

[showhide type=”diagram3″ more_text=”Show Image” less_text=”Hide Details” hidden=”yes”]

[/showhide]

[/showhide]

NOTE: It might take 10 minutes for data to appear in your bucket due to buffering. Make sure that role should be attached to an instance, so that Fluentd had access to write data into bucket.

We have configured td-agent.conf file to collect access logs from Nginx server from the path /var/log/nginx/access.log and send logs to Kinesis Firehose, which in turn stream logs to S3 bucket which can be used for other purposes.

|

Voiced by Amazon Polly |

CloudThat is a leading provider of cloud training and consulting services, empowering individuals and organizations to leverage the full potential of cloud computing. With a commitment to delivering cutting-edge expertise, CloudThat equips professionals with the skills needed to thrive in the digital era.

Our support doesn't end here. We have monthly newsletters, study guides, practice questions, and more to assist you in upgrading your cloud career. Subscribe to get them all!

Comments