|

Voiced by Amazon Polly |

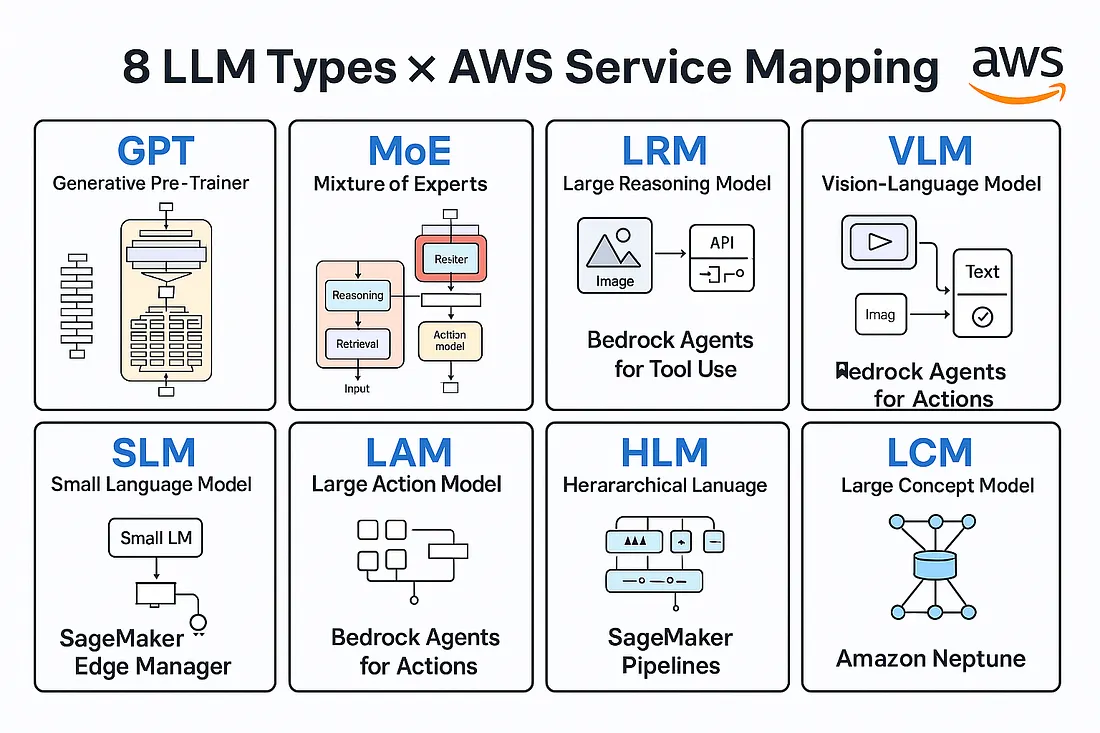

Artificial Intelligence (AI) agents are evolving rapidly — from simple chatbots into autonomous reasoning systems capable of perceiving, deciding, and acting.

At the heart of this transformation lies the diversity of Large Language Models (LLMs), each designed for a distinct kind of cognition or perception.

This blog explores the eight emerging LLM architectures — from Generative Pre-trained Transformers to Large Concept Models — and connects them to the AWS ecosystem that powers their training, orchestration, and deployment.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

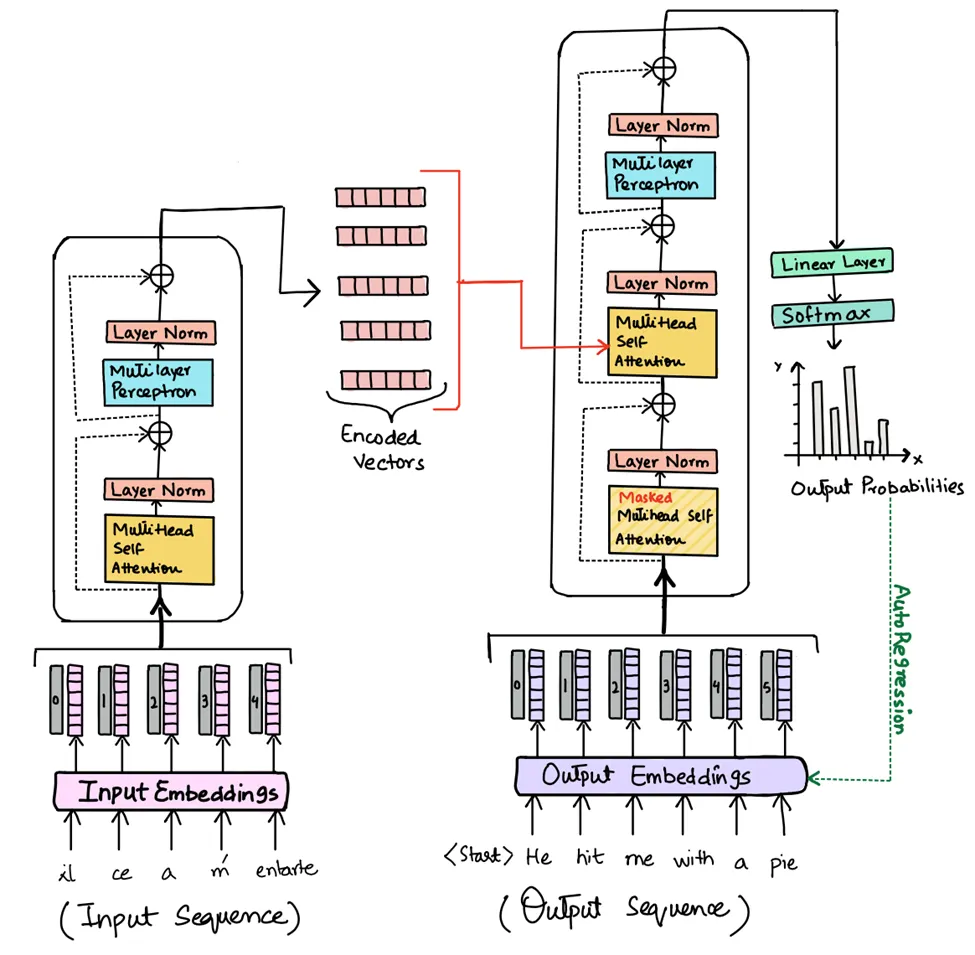

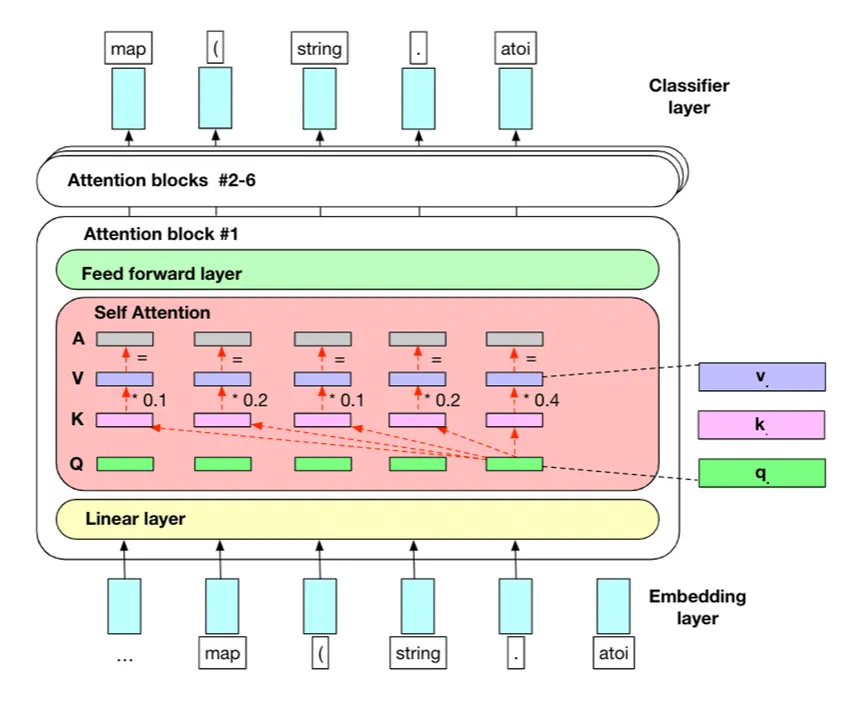

1. GPT — Generative Pre-Trained Transformer

Essence:

GPT models pioneered the transformer revolution. Trained on massive text corpora, they predict the next token in a sequence — enabling coherent, context-aware generation across text, code, and conversation.

AWS Enablement:

- Amazon Bedrock offers fully managed access to foundation models such as Claude, Mistral, Llama, and Amazon Nova.

- Amazon SageMaker JumpStart enables fine-tuning, deployment, and governance for open-source GPT-style models.

- Model Cards and Model Registry enforce governance, traceability, and compliance.

Why it matters:

GPTs remain the foundation for generative chatbots, summarization engines, and document assistants.

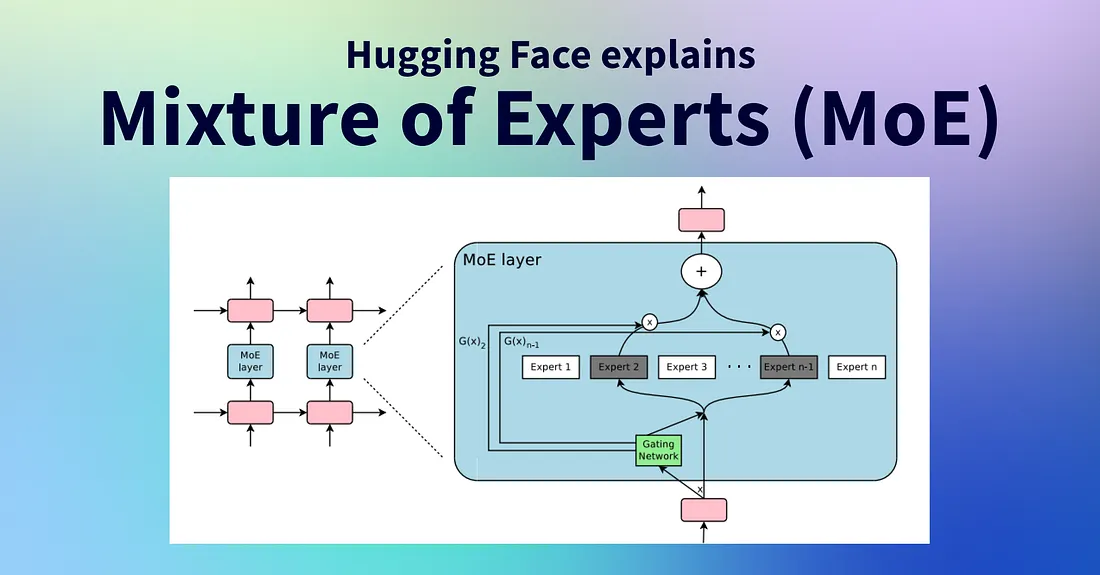

2. MoE — Mixture of Experts

Essence:

Mixture-of-Experts models distribute intelligence across many specialized “experts.” A routing network activates only the relevant experts per query, achieving scalability with computational efficiency.

AWS Enablement:

- Large Model Inference Containers in SageMaker support sharded MoE architectures.

- AWS Trainium / Inferentia2 accelerate distributed training and inference.

- Amazon EFA (Elastic Fabric Adapter) enables low-latency inter-GPU communication for expert routing.

Why it matters:

MoE models enable trillion-parameter scale without linear cost — ideal for adaptive, multi-domain AI agents.

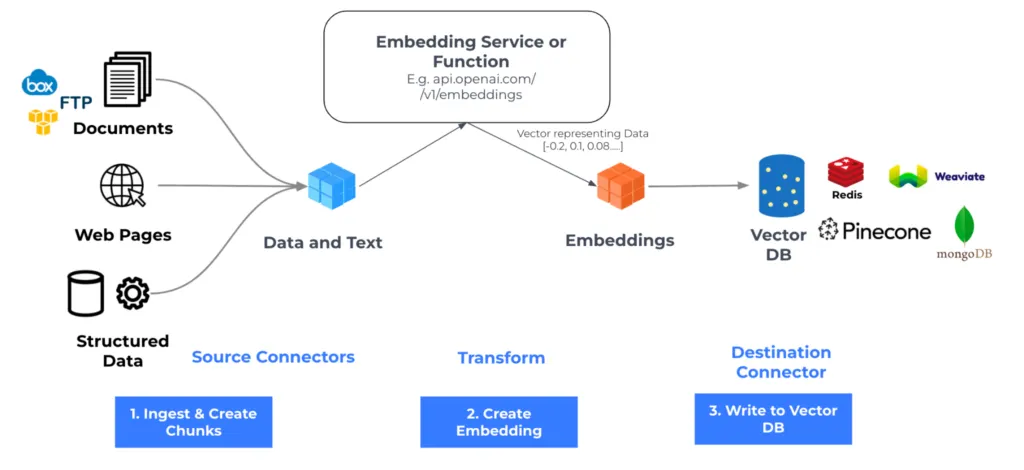

3. LRM — Large Reasoning Model

Essence:

Large Reasoning Models integrate logic, retrieval, and planning. They go beyond word prediction — performing chain-of-thought, tool use, and retrieval-augmented generation (RAG).

AWS Enablement:

- Bedrock Agents combine LLMs with knowledge bases and API calls.

- Amazon Kendra and OpenSearch Serverless provide vector-based retrieval.

- AWS Lambda and Step Functions orchestrate multi-step reasoning flows.

Why it matters:

LRMs underpin autonomous agents that can plan, query data sources, and make informed decisions.

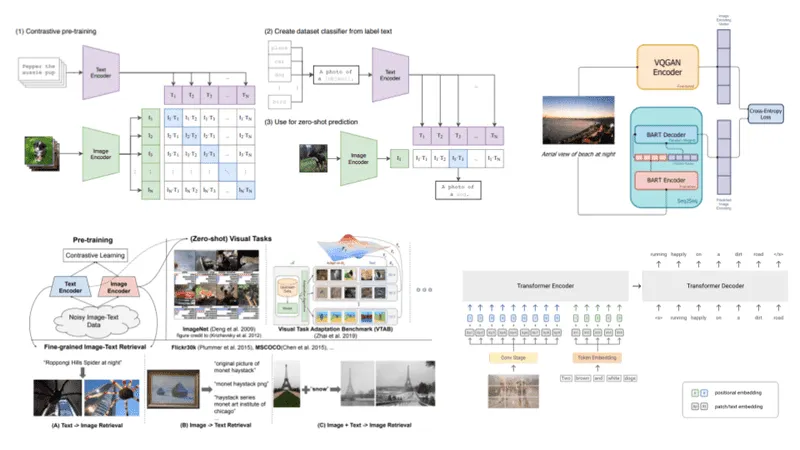

4. VLM — Vision-Language Model

Essence:

Vision-Language Models combine perception and text reasoning — interpreting images, charts, or videos in context with natural language prompts.

AWS Enablement:

- Bedrock-supported models include multimodal models such as Claude 3 Opus and Amazon nova.

- SageMaker Multimodal Training supports image-text datasets.

- Amazon Rekognition provides complementary visual analysis.

Why it matters:

VLMs enable AI agents that “see and speak” — from document OCR copilots to visual inspection systems.

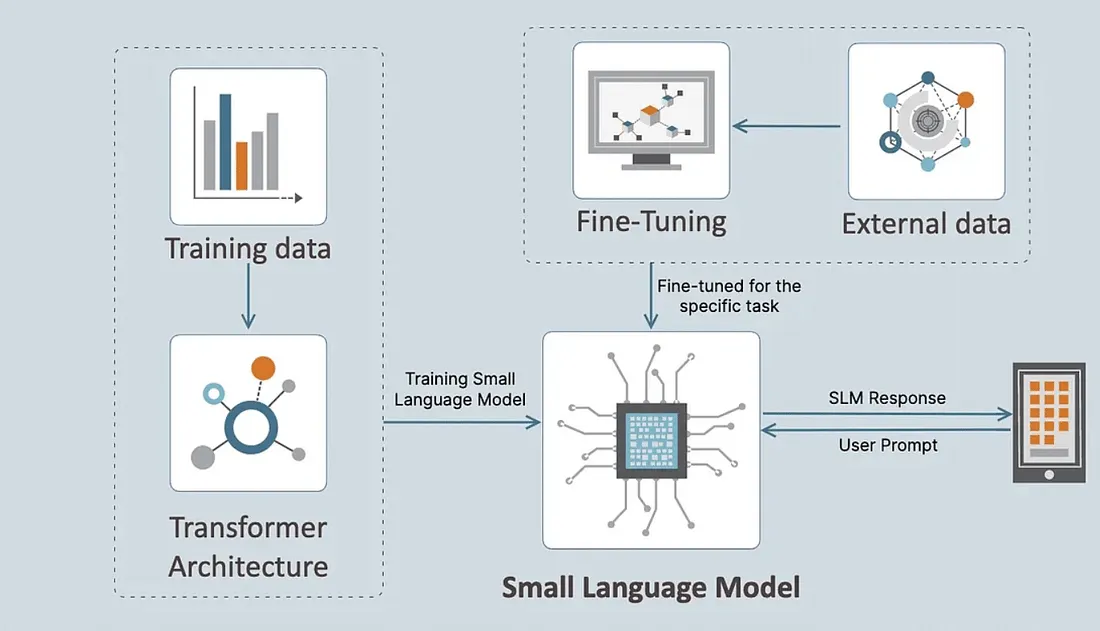

5. SLM — Small Language Model

Essence:

Small Language Models balance intelligence with efficiency. Optimized for latency, privacy, and cost, they power on-device assistants, edge analytics, and embedded AI.

AWS Enablement:

- SageMaker Edge Manager deploys models securely to IoT and industrial endpoints.

- Amazon EC2 Inf2 instances and Inferentia chips optimize inference cost.

- ECS / EKS scale lightweight inference containers.

Why it matters:

SLMs make language intelligence ubiquitous — from factory sensors to mobile apps.

6. LAM — Large Action Model

Essence:

Large Action Models are designed to act, not just reason — translating natural-language intent into executable API calls or workflows.

AWS Enablement:

- Bedrock Agents enable LLMs to securely call business APIs.

- AWS Lambda, EventBridge, and Step Functions automate downstream actions.

- Amazon CloudWatch monitors AI-driven workflows and triggers alerts.

Why it matters:

LAMs bridge the gap between intelligence and automation — forming the backbone of autonomous business agents.

7. HLM — Hierarchical Language Model

Essence:

Hierarchical Language Models structure intelligence in layers: a top-level planner orchestrates sub-models that handle specialized tasks — akin to human delegation.

AWS Enablement:

- SageMaker Pipelines or Step Functions chain models and maintain context across levels.

- Bedrock Knowledge Bases provide long-term memory.

- Amazon SQS / SNS facilitates asynchronous message passing between model tiers.

Why it matters:

HLMs allow scalable coordination — multiple models working together toward complex objectives.

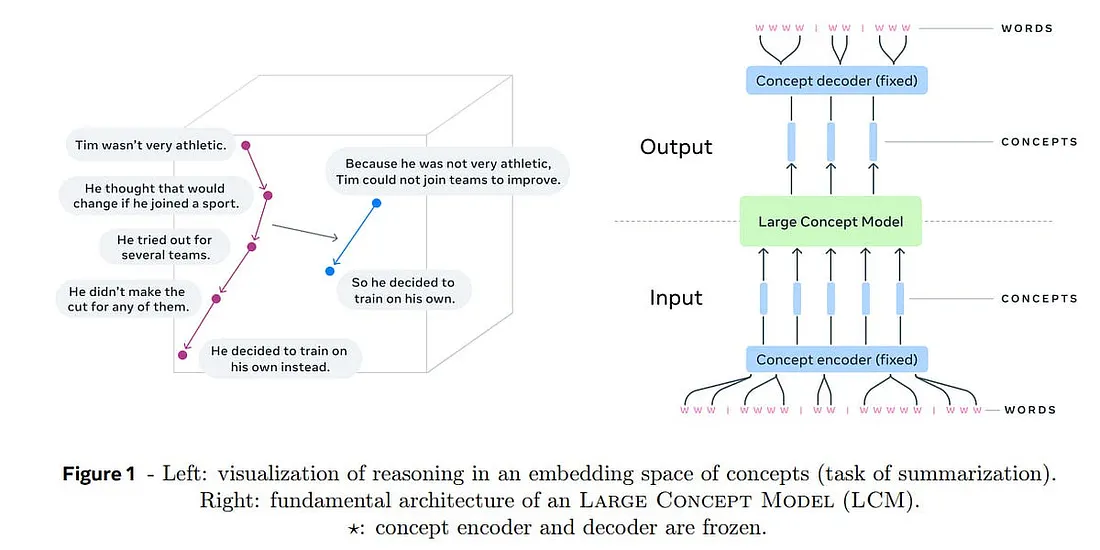

8. LCM — Large Concept Model

Essence:

Large Concept Models operate at a higher semantic level — understanding and generating concepts instead of words. They map knowledge graphs, abstract reasoning, and domain ontologies.

AWS Enablement:

- Amazon Neptune stores and queries concept graphs.

- Bedrock Embeddings API encodes conceptual similarity.

- SageMaker Feature Store manages semantic vectors and relationships.

- AWS Glue Data Catalog provides metadata alignment for conceptual linking.

Why it matters:

LCMs are the foundation for cognitive search, conceptual reasoning, and domain-aware copilots.

The AWS Perspective: From Models to Agents

Across these eight archetypes, AWS provides a continuum of generative AI infrastructure:

- Bedrock for managed foundation models

- SageMaker for training, fine-tuning, and deployment

- Lambda / Step Functions for orchestration

- Neptune, Kendra, and OpenSearch for knowledge integration

- Trainium / Inferentia for scalable compute efficiency

Together, they enable agentic architectures that integrate perception (VLM), reasoning (LRM/HLM), and action (LAM) — the three pillars of intelligent systems.

Conclusion:

The shift from a single omnipotent LLM to an ecosystem of specialized models marks a new era of AI system design.

Future AI agents will blend GPTs for language, MoEs for scale, LRMs for reasoning, VLMs for perception, and LAMs for action — all orchestrated in a modular, governed, and secure way.

AWS stands at the center of this convergence — providing the tools, governance, and scale to turn these model architectures into real-world AI agents.

Upskill Your Teams with Enterprise-Ready Tech Training Programs

- Team-wide Customizable Programs

- Measurable Business Outcomes

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

WRITTEN BY Prarthit Mehta

Prarthit Mehta, CTO of CloudThat’s Cloud Consulting Services, brings over a decade of experience in driving digital transformation across industries. He leads technology strategy, cloud development, security compliance, and IT operations. An AWS Partner Ambassador and holder of multiple AWS and Microsoft Azure certifications, he brings deep expertise in cloud and big data platforms. Prarthit has delivered solutions across diverse industry domains and actively mentors aspiring technologists, enhancing innovation and growth in the tech community.

Login

Login

October 27, 2025

October 27, 2025 PREV

PREV

Comments