|

Voiced by Amazon Polly |

Overview

AI agents are no longer a futuristic idea, they’re already reshaping how businesses operate. From intelligent chatbots to autonomous data analysis agents, companies are deploying them to boost productivity and decision-making.

But there’s a catch: as agents become more autonomous, capable of making decisions, triggering workflows, and interacting with systems, their cloud costs can spiral. Every model inference, storage lookup, or background task can add up, and without cost control, “autonomy” can quickly become an expensive problem.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

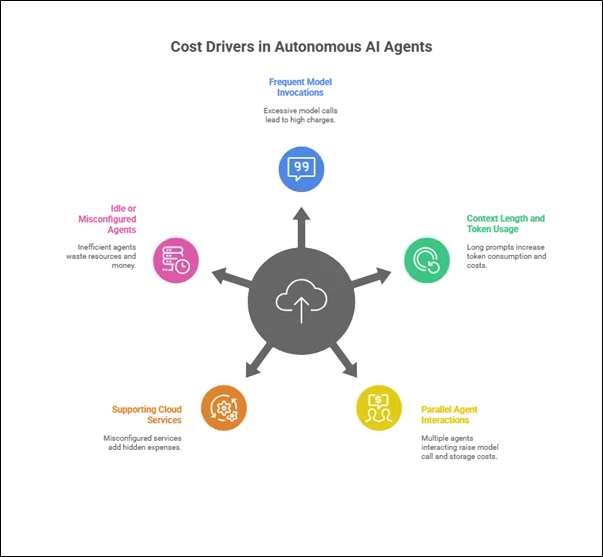

The Cost Challenge in Autonomous AI Agents

Several factors drive the cost of running autonomous agents in the cloud:

- Frequent Model Invocations

Every time an agent calls a foundation model (LLM or otherwise), it incurs a charge. When agents are overly chatty or redundant, the bill grows fast. - Context Length and Token Usage

LLM pricing is tied to tokens. Long prompts with repeated history or unnecessary context can balloon costs. - Parallel Agent Interactions

Each agent-to-agent interaction adds another round of model calls, storage, or compute usage in multi-agent systems. - Supporting Cloud Services

Behind every agent are services like AWS Lambda, Amazon DynamoDB, or Amazon S3. Misconfigured or overused, these can introduce hidden costs. - Idle or Misconfigured Agents

Agents that poll constantly or run background checks when no tasks exist waste resources, and money.

Principles for Balancing Autonomy and Costs

- Design for Task Efficiency

Agents should only perform actions that are necessary and high-value. Avoid designing overly “chatty” agents that interact more than required.

- Optimize Token Usage

- Compress context by summarizing prior conversations.

- Use embeddings and vector databases to fetch only relevant context.

- Employ smaller, cheaper models for lightweight tasks and reserve larger models for critical reasoning.

- Use Tiered Model Architectures

Not every decision requires a large foundation model.

- Lightweight models (e.g., smaller LLMs or rule-based agents) handle simple queries.

- Large LLMs (e.g., Claude, GPT, Llama 70B) are only invoked for complex reasoning.

- Implement Caching

- Cache frequently asked queries and responses in Amazon DynamoDB or Redis.

- Store embeddings in vector databases to avoid re-embedding the same content.

- Pay-as-You-Go Infrastructure

Leverage serverless AWS services such as Amazon Bedrock, AWS Lambda, and Amazon DynamoDB On-Demand to ensure costs scale only with actual usage.

- Introduce Agent Budgeting & Cost Limits

- Set cost ceilings per agent or workflow.

- Implement usage throttling and cost monitoring with CloudWatch and AWS Budgets.

- Asynchronous Workflows

Instead of real-time synchronous interactions, agents can use event-driven architectures with Amazon SQS or Amazon EventBridge, reducing idle compute time.

- Batch Processing

For repetitive workloads (e.g., document summarization), batch multiple tasks in a single request to reduce per-call costs.

AWS Tools for Cost Optimization in AI Agents

- Amazon Bedrock – Offers access to multiple foundation models on a pay-per-inference basis, eliminating infrastructure costs.

- Amazon CloudWatch & AWS Cost Explorer – Monitor token usage, API calls, and model invocations.

- AWS Lambda – Run code only when triggered, avoiding idle compute costs.

- Amazon DynamoDB On-Demand – Pay only for reads/writes instead of provisioning throughput.

- Amazon S3 Intelligent-Tiering – Optimizes storage costs automatically for agent memory or logs.

- AWS Budgets & Trusted Advisor – Set alerts and optimize resource allocation.

Example Architecture: Cost-Optimized AI Agent on AWS

- User Request → Amazon API Gateway

- Agent Orchestration → AWS Lambda with Amazon Bedrock

- Knowledge Retrieval → Amazon DynamoDB + Amazon OpenSearch + S3

- Caching Layer → Amazon DynamoDB for frequent responses

- Cost Monitoring → Amazon CloudWatch + AWS Budgets

- Decision Layer → Multi-tier model (small LLM for routine, large LLM for complex)

This architecture ensures minimal idle time, reduced redundancy, and controlled cost allocation.

Best Practices for Sustainable AI Agent Autonomy

- Monitor Cost per Interaction – Break down cost metrics per query, per agent, or per workflow.

- Use Guardrails to Limit Unnecessary Exploration – Prevent agents from over-exploring irrelevant tasks.

- Prioritize ROI-driven Autonomy – Grant autonomy where it delivers measurable business value.

- Leverage Preprocessing and Rule-Based Filters – Filter out low-value tasks before passing them to costly LLMs.

- Continuously Retrain Prompts – Well-optimized prompts reduce token count and unnecessary retries.

- Lifecycle Management – Shut down agents when not in use.

The Balance: Autonomy vs. Cloud Costs

The goal is not to reduce autonomy but to make it cost-aware autonomy. Businesses should design agents that:

- Make independent decisions.

- Optimize requests intelligently.

- Balance task accuracy with economic efficiency.

The organizations that succeed will build smart agents and sustainable agents – intelligent systems that deliver long-term value without unpredictable cloud bills.

Conclusion

AI agents are powerful tools, but autonomy without cost-awareness can become a liability.

Drop a query if you have any questions regarding AI agents and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. Why do autonomous AI agents cost more than static chatbots?

ANS: – Autonomous agents take independent actions, triggering multiple API calls, memory lookups, and orchestration steps, whereas static chatbots follow pre-defined scripts.

2. What is the biggest driver of cost in LLM-based AI agents?

ANS: – The largest cost typically comes from model inference, especially if the agent uses long prompts, large context windows, or repeatedly invokes expensive foundation models.

3. How can I reduce token usage without losing context?

ANS: – Use summarization, embeddings, and vector search to shorten context and pass only relevant information to the model.

WRITTEN BY Modi Shubham Rajeshbhai

Shubham Modi is working as a Research Associate - Data and AI/ML in CloudThat. He is a focused and very enthusiastic person, keen to learn new things in Data Science on the Cloud. He has worked on AWS, Azure, Machine Learning, and many more technologies.

Login

Login

September 22, 2025

September 22, 2025 PREV

PREV

Comments