|

Voiced by Amazon Polly |

Overview

AWS has announced that OpenAI’s open weight models, including gpt-oss-120b and gpt-oss-20b, are now available through Amazon Bedrock and Amazon SageMaker JumpStart. These models mark a significant expansion of AWS’s foundation model (FM) offerings, providing developers and organizations with new opportunities to build robust, transparent, and customizable AI applications tailored to real business needs.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

Introduction

Artificial Intelligence reshapes how businesses operate, solve problems, and deliver customer experiences. One of the essential drivers behind this transformation is the availability of advanced foundation models (FMs), which power everything from natural language processing to automated reasoning.

The Evolution of Foundation Models on AWS

AWS’s commitment to democratizing AI technology is evident in its continuous efforts to expand its FM portfolio. By partnering with leading AI innovators, AWS ensures its users have seamless access to the most recent advancements. This strategy enables developers to experiment, innovate, and deploy AI solutions without the hassle of switching platforms or rewriting code.

Today, businesses need models that offer flexibility, transparency, and performance. OpenAI’s open weight models on AWS are engineered to fulfill these needs, supporting text generation, reasoning, coding, scientific analysis, and mathematical computation at levels comparable to the best alternatives available.

Deep Dive: OpenAI gpt-oss-120b and gpt-oss-20b

The newly launched OpenAI models come with open weights, a major advantage for organizations seeking fine-grained control over their infrastructure and data. Designed for text generation and advanced reasoning, these models excel in areas like:

- Coding assistance: Generate, complete, and debug code snippets.

- Scientific analysis: Process and interpret complex data or research findings.

- Mathematical reasoning: Solve equations, show logical chains of thought, and explain computations.

Key technical features include:

- 128K context window for handling context-rich tasks and lengthy documents.

- Adjustable reasoning levels (low/medium/high) for tuning model behavior to match specific needs.

- Support agentic workflows using frameworks like Strands Agents, which are ideal for tasks requiring multi-step reasoning or external tool integration.

- External tool compatibility to further enhance capabilities.

- Chain-of-thought output for deep interpretability and validation of the model’s reasoning.

These models are available via:

- Amazon Bedrock provides an OpenAI-compatible endpoint for easy integration with OpenAI SDKs and supported APIs (InvokeModel and Converse API).

- Amazon SageMaker JumpStart offers evaluation, comparison, customization, and deployment within SageMaker Studio or using the Python SDK.

Getting Started with OpenAI Models on Amazon Bedrock

Accessing and using these models is straightforward:

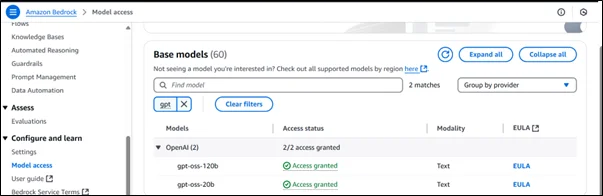

- Request Access: Navigate to Amazon Bedrock’s “Model access” section and request access to gpt-oss-120b and gpt-oss-20b.

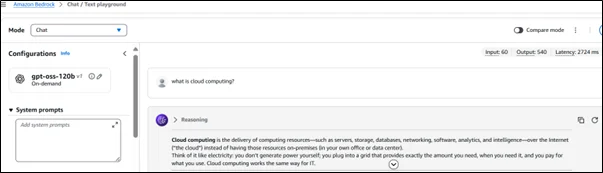

- Test in Playground: Use the Chat/Test playground to submit prompts and observe model outputs, including chain-of-thought reasoning.

- SDK Integration: Configure your OpenAI SDK endpoint with Amazon Bedrock’s API and region, authenticate with an API key, and invoke the models programmatically.

Developers can leverage any supported framework (like Strands Agents) to build sophisticated AI agents, then deploy production-ready agents using Amazon Bedrock AgentCore for scalable, secure runtime and identity management.

Deploying OpenAI Models Through Amazon SageMaker JumpStart

On Amazon SageMaker Studio, users can:

- Set Up Amazon SageMaker Domain: Configure it for a single user or organization.

- Model Selection: Choose either gpt-oss-120b or gpt-oss-20b for deployment.

- Customize & Deploy: Select instance type, configure deployment options, and create an endpoint accessible via SageMaker Studio or AWS SDKs.

This workflow empowers users to tailor models to their needs before bringing them to production, ensuring optimal performance and trustworthiness.

Key Benefits and Flexibility

- Strategic Model Choice: AWS allows seamless switching between models and providers, all without code rewrites. This accelerates innovation and keeps AI strategies agile.

- Full Transparency: Chain-of-thought capabilities provide insight into every step of the model’s reasoning, which is ideal for mission-critical or regulated industries.

- Customizability: Modify, adapt, and fine-tune models for proprietary data, workflows, or industry-specific requirements.

- Security and Safety: Robust evaluation and safety protocols are in place, including compatibility with OpenAI’s standard GPT-4 tokenizer, ensuring secure operations and trusted outputs.

- Global Availability: Bedrock is available in the US West (Oregon). Amazon SageMaker JumpStart supports US East (Ohio, N. Virginia) and Asia Pacific (Mumbai, Tokyo) models.

Things to Consider

- Pricing: Check Amazon Bedrock and Amazon SageMaker AI pricing pages for up-to-date costs, as usage and region can affect pricing.

- Model Access: Ensure you have access to the relevant AWS regions for full model functionality.

- Use Cases: Chain-of-thought output is particularly valuable for domains requiring explainability, such as finance, law, healthcare, and research.

How to access OpenAI?

Enable model access

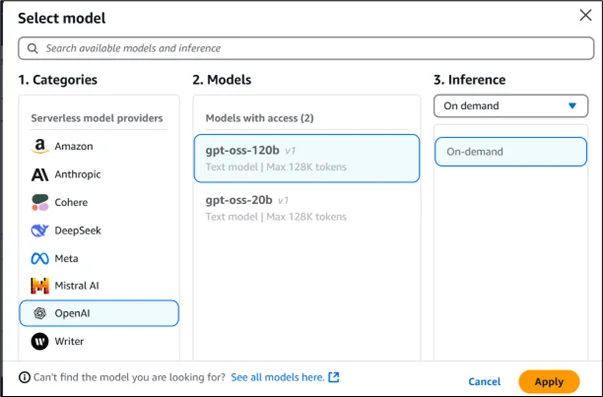

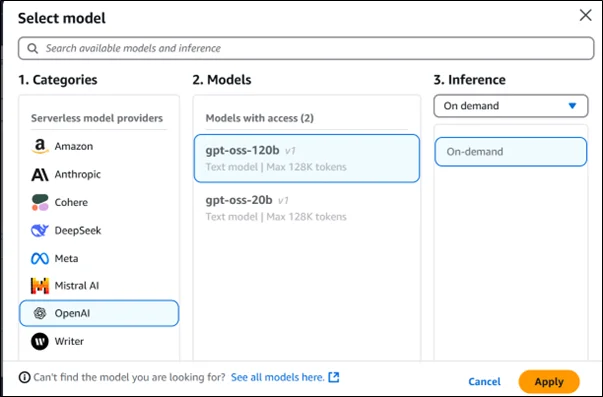

Select OpenAI for Playground access.

Response:

Conclusion

Launching OpenAI open weight models on AWS is pivotal for AI developers and enterprises. With powerful text generation and reasoning capabilities, robust transparency, and unmatched customizability, these models offer real-world advantages over closed solutions. By making cutting-edge AI accessible through trusted platforms like Amazon Bedrock and Amazon SageMaker JumpStart, AWS continues to drive innovation and empower customers to realize the full potential of artificial intelligence.

Drop a query if you have any questions regarding OpenAI and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. What are open weight models, and why do they matter?

ANS: – Open weight models provide full transparency and control over the underlying AI parameters. This lets organizations adapt, customize, and validate model behavior to fit specific business needs, improve security, and comply with regulatory requirements.

2. How do I access OpenAI open weight models on AWS?

ANS: – Use Amazon Bedrock to request access and experiment with the models, or use Amazon SageMaker JumpStart for model evaluation, customization, and deployment. Both platforms support integration with standard AI SDKs and are designed for quick onboarding.

3. Can I deploy these models for production use?

ANS: – Yes. Amazon Bedrock and Amazon SageMaker JumpStart offer streamlined workflows for deploying OpenAI open weight models into production. You can start with a simple evaluation, then build, customize, and scale as needed with tools designed for enterprise-level reliability and security.

WRITTEN BY Yerraballi Suresh Kumar Reddy

Suresh is a highly skilled and results-driven Generative AI Engineer with over three years of experience and a proven track record in architecting, developing, and deploying end-to-end LLM-powered applications. His expertise covers the full project lifecycle, from foundational research and model fine-tuning to building scalable, production-grade RAG pipelines and enterprise-level GenAI platforms. Adept at leveraging state-of-the-art models, frameworks, and cloud technologies, Suresh specializes in creating innovative solutions to address complex business challenges.

Login

Login

September 22, 2025

September 22, 2025 PREV

PREV

Comments