|

Voiced by Amazon Polly |

Overview

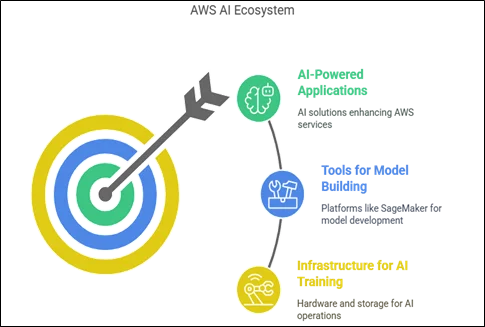

As artificial intelligence continues reshaping the digital landscape, Amazon Web Services (AWS) remains determined to secure its leadership position in AI. AWS’s AI vision is central to its three-layer strategy, which outlines its approach to AI across infrastructure, development tools, and applications. This layered structure is intended to help organizations of all sizes leverage AI, whether they are training large foundation models (FMs), building custom solutions, or simply consuming AI through ready-to-use applications.

But how well is AWS delivering on this ambitious framework? With increased competition from Google Cloud, Microsoft Azure, and NVIDIA’s growing dominance in AI chips and software, AWS is pressured to show real innovation, not just marketing flair. Let’s dig into these layers and see how AWS is performing.

Pioneers in Cloud Consulting & Migration Services

- Reduced infrastructural costs

- Accelerated application deployment

The Bottom Layer: Infrastructure for AI Training

AWS’s Custom Silicon: Inferentia and Trainium

AWS has invested heavily in custom hardware to support AI training and inference. The two standout chips in this domain are Inferentia (for inference workloads) and Trainium (for training deep learning models). These chips are purpose-built to offer better per dollar performance than general-purpose GPUs.

However, the real-world adoption of these chips has been slow. Most machine learning practitioners and organizations still rely on NVIDIA’s powerful and mature GPU ecosystem. Tools like CUDA, cuDNN, and TensorRT are deeply entrenched in AI development workflows, and AWS’s silicon, while technically impressive, has yet to convince the majority to switch.

Moreover, using AWS Trainium or AWS Inferentia often requires significant changes in the development stack. For many teams, this friction outweighs the performance benefits, especially when NVIDIA continues to innovate on both hardware and software fronts.

Compute and Storage Still Rule

Despite the flashy chip announcements, AWS’s true strength in the bottom layer lies in its vast array of compute instances (like EC2 P4d, Inf2, and Trn1) and scalable storage options (such as Amazon S3 and Amazon EBS). These foundational services are essential for model training at scale and continue to be widely used.

AWS also offers tight integration with container orchestration (Amazon ECS, Amazon EKS), CI/CD pipelines, and networking services. It is a strong infrastructure choice without widespread adoption of its custom silicon.

The Middle Layer: Tools for Model Building

Amazon SageMaker

At the center of AWS’s middle layer is Amazon SageMaker, a fully managed service that provides every tool needed to build, train, and deploy machine learning models. Amazon SageMaker offers built-in algorithms, managed notebooks, auto-scaling clusters, and MLOps capabilities. It’s a comprehensive platform, but not without its complexity.

Amazon SageMaker has seen steady improvements, including Amazon SageMaker Studio, JumpStart (for prebuilt models), and Data Wrangler (for data prep). These additions make it more approachable, but there’s still a learning curve. Competing platforms like Google Vertex AI or Azure ML often provide a more intuitive user experience.

Model Context Protocol and AI Tooling

Recently, AWS has taken a significant step by embracing the AWS Model Context Protocol (MCP) in developer tooling. AWS MCP allows large language models to interact with external data sources and services. AWS’s announcement of MCP support in Amazon Q CLI and the launch of AWS MCP Servers strongly signal that it wants to be a serious player in the next generation of agentic AI development.

By connecting AI assistants directly to AWS best practices, documentation, and service APIs, AWS enables developers to write better infrastructure code and build cloud-native AI applications faster. This could be a game-changer if adopted broadly.

The Top Layer: AI-Powered Applications

Amazon Q and Amazon Bedrock

At the top layer, AWS is betting on Amazon Q, its AI assistant integrated with developer tools and enterprise apps. Amazon Q can answer questions, write code, and help troubleshoot AWS configurations using natural language. With MCP integration, Q becomes more context-aware and intelligent, potentially transforming cloud development.

In addition, AWS’s Bedrock service allows users to consume foundation models from providers like Anthropic, Meta, and Stability AI via an API. Amazon Bedrock removes the complexity of managing model infrastructure and supports customization through techniques like RAG (retrieval-augmented generation) and fine-tuning.

Application Ecosystem

Beyond Amazon Bedrock and Amazon Q, AWS continues to embed AI across its service portfolio. Services like Amazon Connect, AWS Lambda, and Amazon Comprehend are now being enhanced with AI features such as voice analytics, intelligent routing, and sentiment analysis. While many of these updates feel incremental, the broader vision is to make every AWS service smarter and more autonomous.

Conclusion

AWS has developed a comprehensive three-layer AI strategy, but execution varies across the stack.

That said, AWS’s AI vision still faces challenges. Usability, interoperability with popular tools, and developer adoption will be its real success tests. If AWS can lower barriers to entry and continue integrating intelligence across its services, it may indeed deliver on its layered AI strategy.

Drop a query if you have any questions regarding Three-layer AI strategy and we will get back to you quickly.

Empowering organizations to become ‘data driven’ enterprises with our Cloud experts.

- Reduced infrastructure costs

- Timely data-driven decisions

About CloudThat

CloudThat is an award-winning company and the first in India to offer cloud training and consulting services worldwide. As a Microsoft Solutions Partner, AWS Advanced Tier Training Partner, and Google Cloud Platform Partner, CloudThat has empowered over 850,000 professionals through 600+ cloud certifications winning global recognition for its training excellence including 20 MCT Trainers in Microsoft’s Global Top 100 and an impressive 12 awards in the last 8 years. CloudThat specializes in Cloud Migration, Data Platforms, DevOps, IoT, and cutting-edge technologies like Gen AI & AI/ML. It has delivered over 500 consulting projects for 250+ organizations in 30+ countries as it continues to empower professionals and enterprises to thrive in the digital-first world.

FAQs

1. What are the three layers of AWS’s AI strategy?

ANS: – AWS categorizes its AI strategy into:

- Bottom Layer: Infrastructure for training and running AI models (e.g., AWS Trainium, Amazon EC2, Amazon S3).

- Middle Layer: Tools for building, training, and managing models (e.g., Amazon SageMaker, AWS MCP).

- Top Layer: Applications and services that leverage AI (e.g., Amazon Q, Amazon Bedrock).

2. What is Trainium, and why does it matter?

ANS: – Trainium is a custom AI chip built by AWS for training deep learning models. It aims to offer higher performance and lower cost than GPUs but requires the adoption of AWS-specific toolchains.

WRITTEN BY Shubham Namdev Save

Shubham Save is a Research Associate at CloudThat, specializing in a wide range of AWS services with hands-on experience in Amazon EMR, Redshift, Aurora, DynamoDB, and more. Known for his punctuality and dedication, he is committed to delivering high-quality work. Passionate about continuous learning, Shubham actively explores emerging technologies and cloud innovations in his free time, ensuring he stays ahead in the ever-evolving tech landscape.

Login

Login

April 23, 2025

April 23, 2025 PREV

PREV

Comments